Enhancement of Multiple Sensor Images using

Joint Image Fusion and Blind Restoration

Nikolaos Mitianoudis, Tania Stathaki

Communications and Signal Processing group, Imperial College London,

Exhibition Road, SW7 2AZ London, UK

Abstract

Image fusion systems aim at transferring “interesting” information from the input

sensor images to the fused image. The common assumption for most fusion ap-

proaches is the existence of a high-quality reference image signal for all image parts

in all input sensor images. In the case that there are common degraded areas in at

least one of the input images, the fusion algorithms can not improve the information

provided there, but simply convey a combination of this degraded information to

the output. In this study, the authors propose a combined spatial-domain method

of fusion and restoration in order to identify these common degraded areas in the

fused image and use a regularised restoration approach to enhance the content in

these areas. The proposed approach was tested on both multi-focus and multi-modal

image sets and produced interesting results.

Key words: Spatial-domain Image Fusion, Image Restoration.

PACS:

1 Introduction

Data fusion is defined as the process of combining data from sensors and

related information from several databases, so that the performance of the

system can be improved, while the accuracy of the results can be also increased.

Essentially, fusion is a procedure of incorporating essential information from

several sensors to a composite result that will be more comprehensive and thus

more useful for a human operator or other computer vision tasks.

Image fusion can be similarly viewed as the process of combining information

in the form of images, obtained from various sources in order to construct

an artificial image that contains all “useful” information that exists in the

input images. Each image has been acquired using different sensor modalities

Preprint submitted to Elsevier Science 22 October 2007

or capture techniques, and therefore, it has different features, such as type of

degradation, thermal and visual characteristics. The main concept behind all

image fusion algorithms is to detect strong salient features in the input sensor

images and fuse these details to the synthetic image. The resulting synthetic

image is usually referred to as the fused image.

Let x

1

(r), . . . , x

T

(r) represent T images of size M

1

× M

2

capturing the same

scene, where r = (i, j) refers to pixel coordinates (i, j) in the image. Each im-

age has been acquired using different sensors that are placed relatively close

and are observing the same scene. Ideally, the images acquired by these sen-

sors should be similar. However, there might exist some miscorrespondence

between several points of the observed scene, due to the different sensor view-

points. Image registration is the process of establishing point-by-point corre-

spondence between a number of images, describing the same scene. In this

study, the input images are assumed to have negligible registration problems

or the transformation matrix between the sensors’ viewpoints is known. Thus,

the objects in all images can be considered geometrically aligned.

As already mentioned, the process of combining the important features from

the original T images to form a single enhanced image y(r) is usually referred

to as image fusion. Fusion techniques can be divided into spatial domain and

transform domain techniques [5]. In spatial domain techniques, the input im-

ages are fused in the spatial domain, i.e. using localised spatial features. As-

suming that g(·) represents the “fusion rule”, i.e. the method that combines

features from the input images, the spatial domain techniques can be sum-

marised, as follows:

y(r) = g(x

1

(r), . . . , x

T

(r)) (1)

Moving to a transform domain enables the use of a framework, where the

image’s salient features are more clearly depicted than in the spatial domain.

Let T {·} represent a transform operator and g(·) the applied fusion rule.

Transform-domain fusion techniques can then be outlined, as follows:

y(r) = T

−1

{g(T {x

1

(r)}, . . . , T {x

T

(r)})} (2)

Several transformations were proposed to be used for image fusion, including

the Dual-Tree Wavelet Transform [5,7,12], Pyramid Decomposition [14] and

image-trained Independent Component Analysis bases [10,9]. All these trans-

formations project the input images onto localised bases, modelling sharp and

abrupt transitions (edges) and therefore, describe the image using a more

meaningful representation that can be used to detect and emphasize salient

features, important for performing the task of image fusion. In essence, these

transformations can discriminate between salient information (strong edges

2

and texture) and constant or non-textured background and can also evaluate

the quality of the provided salient information. Consequently, one can select

the required information from the input images in the transform domain to

construct the “fused” image, following the criteria presented earlier on.

In the case of multi-focus image fusion scenarios, an alternative approach

has been proposed in the spatial domain, exploiting current error estimation

methods to identify high-quality edge information [6]. One can perform error

minimization between the fused and input images, using various proposed error

norms in the spatial domain in order to perform fusion. The possible benefit

of a spatial-domain approach is the reduction in computational complexity,

which is present in a transform-domain method due to the forward and inverse

transformation step.

In addition, following a spatial-domain fusion framework, one can also ben-

efit from current available spatial-domain image enhancement techniques to

incorporate a possible restoration step to enhance areas that exhibit distorted

information in all input images. Current fusion approaches can not enhance

areas that appear degraded in any sense in all input images. There is a neces-

sity for some pure information to exist for all parts of the image in the various

input images, so that the fusion algorithm can produce a high quality output.

In this work, we propose to reformulate and extend Jones and Vorontsov’s [6]

spatial-domain approach to fuse the non-degraded common parts of the sensor

images. A novel approach is used to identify the areas of common degradation

in all input sensor images. A double-regularised image restoration approach

using robust functionals is applied on the estimated common degraded area

to enhance the common degraded area in the “fused” image. The overall fu-

sion result is superior to any traditional fusion approach since the proposed

approach goes beyond the concept of transferring useful information to a thor-

ough fusion-enhancement approach.

2 Robust Error Estimation Theory

Let the image y(r) be a recovered version from a degraded observed image

x(r), where r = (i, j) are pixel coordinates (i, j). To estimate the recovered

image y(r), one can minimise an error functional E(y) that expresses the

difference between the original image and the estimated one, in terms of y.

The error functional can be defined by:

E(y) =

Z

Ω

ρ (r, y(r), |∇y(r)|) dr (3)

3

where Ω is the image support, ∇y(r) is the image gradient. The function ρ (·)

is termed the error norm and is defined according to the application, i.e. the

type of degradation or the desired task. For example, a least square error norm

can be appropriate to remove additive Gaussian noise from a degraded image.

The extremum of the previous equation can be estimated, using the Euler -

Lagrange equation. The Euler-Lagrange equation is an equation satisfied by a

function f of a parameter t which extremises the functional:

E(f) =

Z

F (t, f(t), f

0

(t)) dt (4)

where F is a given function with continuous first partial derivatives. The Euler-

Lagrange equation is described by the following ordinary differential equation,

i.e. a relation that contains functions of only one independent variable, and

one or more of its derivatives with respect to that variable, the solution t of

which extremises the above functional [21].

∂

∂f(t)

F (t, f(t), f

0

(t)) −

d

dt

∂

∂f

0

(t)

F (t, f(t), f

0

(t)) = 0 (5)

Applying the above rule to derive the extremum of (3), the following Euler-

Lagrange equation is derived:

∂ρ

∂y

− ∇

³

∂ρ

∂∇y

´

= 0 (6)

Since ρ(·) is a function of |∇y| and not ∇y, we perform the substitution

∂∇y = ∂|∇y|/sgn(∇y) = |∇y|∂|∇y|/∇y (7)

where sgn(y) = y/|y|. Consequently, the Euler-Lagrange equation is given by:

∂ρ

∂y

− ∇

³

1

|∇y|

∂ρ

∂|∇y|

∇y(r)

´

= 0 (8)

To obtain a closed-form solution y(r) from (8) is not straightforward. Hence,

one can use numerical optimisation methods to estimate y. Gradient-descent

optimisation can be applied to estimate y(r) iteratively using the following

update rule:

y(r, t) ← y(r, t − 1) − η

∂y(r, t)

∂t

(9)

4

where t is the time evolution parameter, η is the optimisation step size and

∂y(r, t)

∂t

= −

∂ρ

∂y

+ ∇

³

1

|∇y|

∂ρ

∂|∇y|

∇y(r, t)

´

(10)

Starting with the initial condition y(r, 0) = x(r), the iteration of (10) continues

until the minimisation criterion is satisfied, i.e. |∂y(r, t)/∂t| < ², where ² is a

small constant (² ∼ 0.0001). In practice, only a finite number of iterations are

performed to achieve visually satisfactory results [6]. The choice of the error

norm ρ(·) in the Lagrange-Euler equation is the next topic of discussion.

2.1 Isotropic diffusion

As mentioned previously, one candidate error norm ρ(·) is the least-squares

error norm. This norm is given by:

ρ(r, |∇y(r)|) =

1

2

|∇y(r)|

2

(11)

The above error norm smooths Gaussian noise and depends only on the image

gradient ∇y(r), but not explicitly on the image y(r) itself. If the least-squares

error norm is substituted in the time evolution equation (10), we get the

following update:

∂y(r, t)

∂t

= ∇

2

y(r, t) (12)

which is the isotropic diffusion equation having the following analytic solu-

tion [2]:

y(r, t) = G(r, t) ∗ x(r) (13)

where ∗ denotes the convolution of a Gaussian function G(r, t) of standard

deviation t with x(r), the initial data. The solution specifies that the time

evolution in (12) is a convolution process performing Gaussian smoothing.

However, as the time evolution iteration progresses, the function y(r, t) be-

comes the product of the convolution of the input image with a Gaussian of

constantly increasing variance, which will finally pro duce a constant value.

In addition, it has been shown that isotropic diffusion may not only smooth

edges, but also causes drifts of the actual edges in the image edge, because

of the Gaussian filtering (smoothing) [2,13]. These are two disadvantages that

need to be seriously considered when using isotropic diffusion.

5

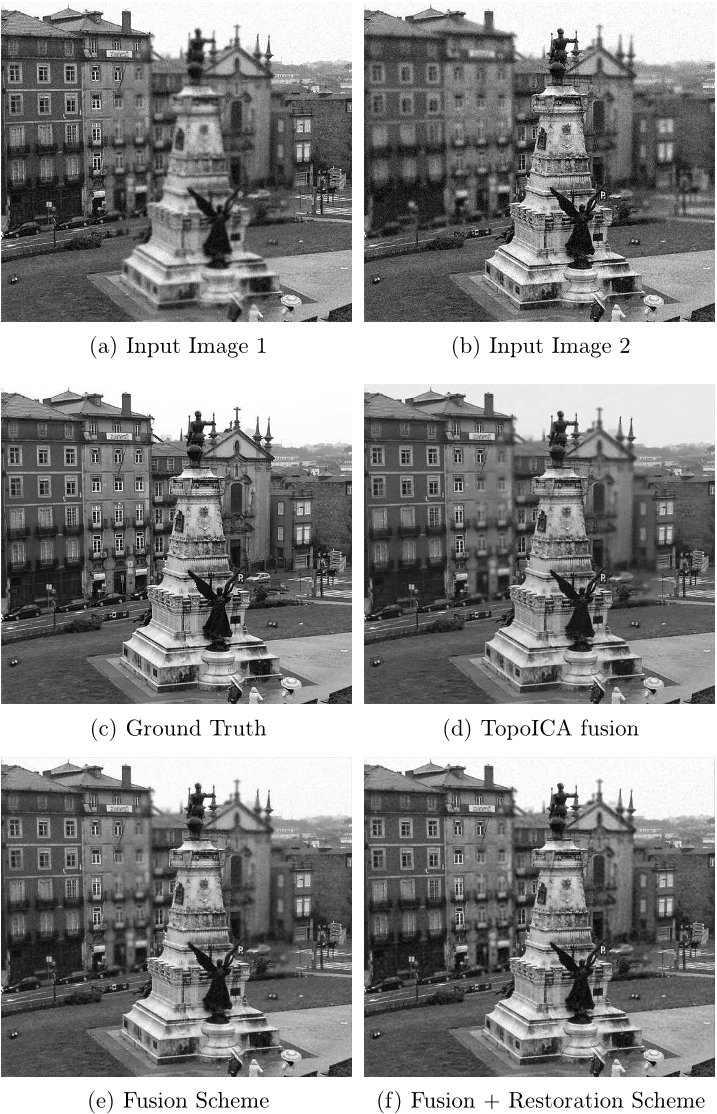

![Fig. 1. An out-of-focus fusion example using the “Disk” dataset available by the Image Fusion server [3]. We compare the TopoICA-based fusion approach and the proposed Diffusion scheme.](/figures/fig-1-an-out-of-focus-fusion-example-using-the-disk-dataset-1dofi6y6.png)

![Table 1 Performance evaluation of the Diffusion approach and the TopoICA-based fusion approach using Petrovic [18] and Piella’s [15] metrics.](/figures/table-1-performance-evaluation-of-the-diffusion-approach-and-3cgndre5.png)