Did you find this useful? Give us your feedback

![Table 2: Topic milestone papers (top-10 papers) for Sentiment Analysis from [8].](/figures/table-2-topic-milestone-papers-top-10-papers-for-sentiment-riuw03tb.png)

38 citations

13 citations

...Lu et al. (2014) proposed a topic model which uses authorship, published venues, and citation relations among scientific documents to detect topics and identify the most notable works in the corpus....

[...]

...The collective topic model (CTM) proposed by Lu et al. (2014) simultaneously discovers topics and related milestone papers in the corpus by modeling papers, authors, and published venues as a bag of citations based on the PLSA model....

[...]

3 citations

...In our model, different from [6, 17], we use the topics extracted from textual information....

[...]

...Lu et al.[6] extend the method by considering additional factors that influence the importance of papers, such as authorship and published venues....

[...]

...Thus, the topics described in [6, 17] are too general but imprecise....

[...]

...Although [6, 17] use “topic” in the discription of their methods, the topic defined in [6, 17] is actually a cluster of documents....

[...]

...In [6, 17], the reference for a document is determined by sampling cited documents according to the topicdocument distribution....

[...]

2 citations

...Topic model is a common technology for the evolution of research themes [31,32] and discovery of high quality papers [33]....

[...]

2 citations

...Further, topic models have been employed for retrieving relevant papers [7]....

[...]

332 citations

...Experiments on a real dataset ANN show that our model can better evaluate the impact of papers and its result is not biased against new publications....

[...]

...The ACL Anthology Network (ANN) [7] was used in our experiments....

[...]

144 citations

...Some citation recommendation systems have been designed to recommend appropriate citations for academic works [5, 1]....

[...]

...For example, [5] designed a translation model between citation contexts and reference words, and recommended a list of citations by using long queries such as sentences or a manuscript....

[...]

...Previous work exists in citation recommendation [5, 1]; i....

[...]

140 citations

...Some citation recommendation systems have been designed to recommend appropriate citations for academic works [5, 1]....

[...]

...Bethard and Jurafsky [1] designed a feature-based learning model for literature retrieval....

[...]

...Previous work exists in citation recommendation [5, 1]; i....

[...]

...[1] S. Bethard and D. Jurafsky....

[...]

54 citations

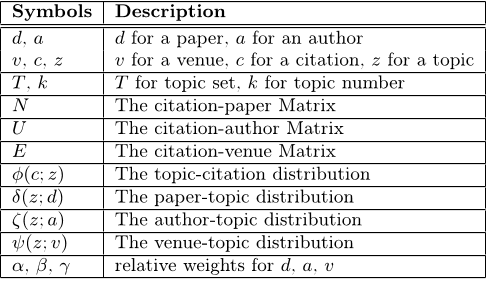

...Table 2: Topic milestone papers (top-10 papers) for Sentiment Analysis from [8]....

[...]

...The top-2 paper in our model is ranked highly, compared with that in [8] (top-4)....

[...]

...This dataset is also used in previous work [8]; thus, we can use it to perform some comparisons with [8]....

[...]

...However, [8] only considered co-citation relations for topic milestone paper discovery....

[...]

...In order to compare with previous work [8], we use the top-10 papers for the topic Sentiment Analysis as an example....

[...]

17 citations