Autonomous Robots 16, 95–116, 2004

c

2004 Kluwer Academic Publishers. Manufactured in The Netherlands.

A Color Vision-Based Lane Tracking System for Autonomous Driving

on Unmarked Roads

MIGUEL ANGEL SOTELO AND FRANCISCO JAVIER RODRIGUEZ

Department of Electronics, University of Alcala, Alcal

´

adeHenares, Madrid, Spain

michael@depeca.uah.es

fjrs@depeca.uah.es

LUIS MAGDALENA

Department of Applied Mathematics, Technical University, Madrid, Spain

llayos@mat.upm.es

LUIS MIGUEL BERGASA AND LUCIANO BOQUETE

Department of Electronics, University of Alcala, Alcal

´

adeHenares, Madrid, Spain

bergasa@depeca.uah.es

luciano@depeca.uah.es

Abstract. This work describes a color Vision-based System intended to perform stable autonomous driving on

unmarked roads. Accordingly, this implies the development of an accurate road surface detection system that en-

sures vehicle stability. Although this topic has already been documented in the technical literature by different

research groups, the vast majority of the already existing Intelligent Transportation Systems are devoted to as-

sisted driving of vehicles on marked extra urban roads and highways. The complete system was tested on the

BABIECA prototype vehicle, which was autonomously driven for hundred of kilometers accomplishing differ-

ent navigation missions on a private circuit that emulates an urban quarter. During the tests, the navigation sys-

tem demonstrated its robustness with regard to shadows, road texture, and weather and changing illumination

conditions.

Keywords: color vision-based lane tracker, unmarked roads, unsupervised segmentation

1. Introduction

The main issue addressed in this work deals with the

design of a vision-based algorithm for autonomous ve-

hicle driving on unmarked roads.

1.1. Motivation for Autonomous Driving Systems

The deployment of Autonomous Driving Systems is a

challenging topic that has focused the interest of re-

search institutions all across the world since the mid

eighties. Apart from the obvious advantages related to

safety increase, such as accident rate reduction and hu-

man life savings, there are other benefits that could

clearly derive from automatic driving. Thus, on one

hand, vehicles keeping a short but reliable safety dis-

tance by automatic means allow to increase the capac-

ity of roads and highways. This inexorably leads to

an optimal use of infrastructures. On the other hand,

a remarkable saving in fuel expenses can be achieved

by automatically controlling vehicles velocity so as to

keep a soft acceleration profile. Likewise, automatic

cooperative driving of vehicle fleets involved in the

96 Sotelo et al.

transportation of heavy loads can lead to notable in-

dustrial cost reductions.

1.2. Autonomous Driving on Highways

and Extraurban Roads

Although the basic goal of this work is concerned with

the development of an Autonomous Driving System

for unmarked roads, the techniques deployed for lane

tracking in this kind of scenarios are similar to those

developed for road tracking in highways and structured

roads, as long as they face common problems. Nonethe-

less, most of the research groups currently working on

this topic focus their endeavors on autonomously navi-

gating vehicles on structured roads, i.e., marked roads.

This allows to reduce the navigation problem to the lo-

calization of lane markers painted on the road surface.

That’s the case of some well known and prestigious sys-

tems such as RALPH (Pomerleau and Jockem, 1996)

(Rapid Adapting Lateral Position Handler), developed

on the Navlab vehicle at the Robotics Institute of the

Carnegie Mellon University, the impressive unmanned

vehicles developed during the last decade by the re-

search groups at the UBM (Dickmanns et al., 1994;

Lutzeler and Dickmanns, 1998) and Daimler-Benz

(Franke et al., 1998), or the GOLD system (Bertozzi

and Broggi, 1998; Broggi et al., 1999) implemented

on the ARGO autonomous vehicle at the Universita di

Parma. All these systems have widely proved their va-

lidity on extensive tests carried out along thousand of

kilometers of autonomous driving on structured high-

ways and extraurban roads. The effectivity of these re-

sults on structured roads has led to the commercializa-

tion of some of these systems as driving aid products

that provide warning signals upon lane depart. Some

research groups have also undertaken the problem of

autonomous vision based navigation on completely un-

structured roads. Among them are the SCARF and

UNSCARF systems (Thorpe, 1990) designed to ex-

tract the road shape basing on the study of homoge-

neous regions from a color image. The ALVINN (Au-

tonomous Land Vehicle In a Neural Net) (Pomerleau,

1993) system is also able to follow unmarked roads af-

ter a proper training phase on the particular roads where

the vehicle must navigate. The group at the Universi-

tat der Bundeswehr, Munich, headed by E. Dickmanns

has also developed a remarkable number of works on

this topic since the early 80’s. Thus, autonomous guid-

ance of vehicles on either marked or unmarked roads

demonstrated its first results in Dickmanns and Zapp

(1986) and Dickmanns and Mysliwetz (1992) where

nine road and vehicle parameters were recursively es-

timated following the 4D approach on 3D scenes. More

recently, a combination of on- and off-road driving

was achieved in Gregor et al. (2001) using the EMS-

vision (Expectation-based Multifocal Saccadic vision)

system, showing its wide range of maneuvering capa-

bilities as described in Gregor et al. (2001). Likewise,

another similar system can be found in Lutzeler and

Dickmanns (2000) and Gregor et al. (2002), where a

real autonomous system for Intelligent Navigation in

a network of unmarked roads and intersections is de-

signed and implemented using edge detectors for lane

tracking. The vehicle is equipped with a four camera

vision system, and can be considered as the first com-

pletely autonomous vehicle capable to successfully

perform some kind of global mission in an urban-like

environment, also based on the EMS-vision system. On

the other hand, the work developed by the Department

of Electronics at the University of Alcala (UAH) in the

field of Autonomous Vehicle Driving started in 1993

with the design of a vision based algorithm for outdoor

environments (Rodriguez et al., 1998) that was imple-

mented on an industrial fork lift truck autonomously

operated on the campus of the UAH. After that, the de-

velopment of a vision-based system (Sotelo et al., 2001;

De Pedro et al., 2001) for Autonomous Vehicle Driving

on unmarked roads was undertaken until reaching the

results presented in this paper. The complete naviga-

tion system was implemented on BABIECA, an electric

Citroen Berlingo commercial prototype as depicted in

Fig. 1. The vehicle is equipped with a color camera, a

DGPS receiver, two computers, and the necessary elec-

tronic equipment to allow for automatic actuation on

the steering wheel, brake and acceleration pedals. Thus,

complete lateral and longitudinal automatic actuation

is issued during navigation. Real tests were carried out

on a private circuit emulating an urban quarter, com-

posed of streets and intersections (crossroads), located

at the Instituto de Autom

´

atica Industrial del CSIC in

Madrid, Spain. Additionally, a live demonstration ex-

hibiting the system capabilities on autonomous driving

was also carried out during the IEEE Conference on

Intelligent Vehicles 2002, in a private circuit located at

Satory (Versailles), France.

The work described in this paper is organized in the

following sections: Section 2 describes the color vision

based algorithm for lane tracking. Section 3 provides

some global results, and finally, concluding remarks

are presented in Section 4.

A Color Vision-Based Lane Tracking System 97

Figure 1. Babieca autonomous vehicle.

2. Lane Tracking

As described in the previous section, the main goal of

this work is to robustly track the lane of any kind of

road (structured or not). This includes the tracking of

non structured roads, i.e., roads without lane markers

painted on them.

2.1. Region of Interest

The original 480 ×512 incoming image acquired by

a color camera is in real time re-scaled to a low res-

olution 60 × 64 image, by making use of the system

hardware capabilities. It inevitably leads to a decrement

in pixel resolution that must necessarily be assessed.

Thus, the maximum resolution of direct measurements

is between 4 cm, at a distance of 10 m, and 8 cm at 20 m.

Nonetheless, the use of temporal filtering techniques

(as described in the following sections) allows to obtain

finer resolution estimations. As discussed in Bertozzi

et al. (2000) due to the existence of physical and conti-

nuity constraints derived from vehicle motion and road

design, the analysis of the whole image can be replaced

by the analysis of a specific portion of it, namely the

region of interest. In this region, the probability of find-

ing the most relevant road features is assured to be high

by making use of a priori knowledge on the road shape,

according to the parabolic road model proposed. Thus,

in most cases the region of interest is reduced to some

portion of image surrounding the road edges estimated

in the previous iteration of the algorithm. This is a valid

assumption for road tracking applications heavily rely-

ing on the detection of lane markers that represent the

road edges. This is not the case of the work presented

in this paper, as the main goal is to autonomously navi-

gate on completely unstructured roads (including rural

paths, etc). As will be later described, color and shape

features are the key characteristics used to distinguish

the road from the rest of elements in the image. This

leads to a slightly different concept of region of interest

where the complete road must be entirely contained in

the region under analysis.

On the other hand, the use of a narrow focus of at-

tention surrounding the previous road model is strongly

discarded due to the unstable behavior exhibited by the

segmentation process in practice (more detailed justifi-

cation will be given in the next sections). A rectangular

region of interest of 36 ×64 pixels covering the near-

est 20 m ahead of the vehicle is proposed instead, as

shown in Fig. 2. This restriction permits to remove non-

relevant elements from the image such as the sky, trees,

buildings, etc.

2.2. Road Features

The combined use of color and shape restrictions pro-

vides the essential information required to drive on

non structured roads. Prior to the segmentation of the

98 Sotelo et al.

Figure 2. Area of interest.

image, a proper selection of the most suitable color

space becomes an outstanding part of the process. On

one hand, the RGB color space has been extensively

tested and used in previous road tracking applications

on non-structured roads (Thorpe, 1990; Crisman and

Thorpe, 1991; Rodriguez et al., 1998). Nevertheless,

the use of the RGB color space has some well known

disadvantages, as mentioned next. It is non-intuitive

and non-uniform in color separation. This means that

two relatively close colors can be very separated in the

RGB color space. RGB components are slightly cor-

related. A color can not be imagined from its RGB

components. On the other hand, in some applications

the RGB color information is transformed into a differ-

ent color space where the luminance and chrominance

components of the color are clearly separated from each

other. This kind of representation benefits from the fact

that the color description model is quite oriented to hu-

man perception of colors. Additionally, in outdoor en-

vironments the change in luminance is very large due

to the unpredictable and uncontrollable weather con-

ditions, while the change in color or chrominance is

not that relevant. This makes highly recommendable

the use of a color space where a clear separation be-

tween intensity (luminance) and color (chrominance)

information can be established.

The HSI (Hue, Saturation and Intensity) color space

constitutes a good example of this kind of representa-

tion, as it permits to describe colors in terms that can be

intuitively understood. A human can easily recognize

basic color attributes: intensity (luminance or bright-

ness), hue or color, and saturation (Ikonomakis et al.,

2000). Hue represents the impression related to the pre-

dominant wavelength in the perceived color stimulus.

Saturation corresponds to the color relative purity, and

thus, non saturated colors are gray scale colors. Inten-

sity is the amount of light in a color. The maximum

intensity is perceived as pure white, while the mini-

mum intensity is pure black. Some of the most relevant

advantages related to the use of the HSI color space

are discussed below. It is closely related to human per-

ception of colors, having a high power to discriminate

colors, specially the hue component. The difference

between colors can be directly quantified by using a

distance measure. Transformation from the RGB color

space to the HSI color space can be made by means

of Eqs. (1) and (2), where V1 and V2 are intermediate

variables containing the chrominance information of

the color.

I

V

1

V

2

=

1

3

1

3

1

3

−1

√

6

−1

√

6

2

√

6

1

√

6

−2

√

6

1

√

6

·

R

G

B

(1)

H = arctan

V

2

V

1

S =

V

2

1

+ V

2

2

(2)

This transformation describes a geometrical approx-

imation to map the RGB color cube into the HSI color

space, as depicted in Fig. 4. As can be clearly appreci-

ated from observation of Fig. 3, colors are distributed

in a cylindrical manner in the HSI color space. A sim-

ilar way to proceed is currently under consideration

by performing a change in the coordinate frames so as

to align with the I axis, and compute one component

along the I axis and the other in the plane normal to

the I axis. This could save some computing time by

avoiding going through the trigonometry.

Figure 3.Mapping from the RGB cube to the HSI color space.

A Color Vision-Based Lane Tracking System 99

Although the RGB color space has been success-

fully used in previous works dealing with road seg-

mentation (Thorpe, 1990; Rodriguez et al., 1998), the

HSI color space has exhibited superior performance

in image segmentation problems as demonstrated in

Ikonomakis et al. (2000). According to this, we pro-

pose the use of color features in the HSI color space as

the basis to perform the segmentation of non-structured

roads. A more detailed discussion supporting the use

of the HSI color space for image segmentation in

outdoor applications is extensively reported in Sotelo

(2001).

2.3. Road Model

The use of a road model eases the reconstruction of

the road geometry and permits to filter the data com-

puted during the features searching process. Among the

different possibilities found in the literature, models re-

laying on clothoids (Dickmanns et al., 1994) and poly-

nomial expressions have extensively exhibited high

performance in the field of road tracking. More con-

cretely, the use of parabolic functions to model the pro-

jection of the road edges onto the image plane has been

proposed and successfully tested in previous works

(Schneiderman and Nashman, 1994). Parabolic mod-

els do not allow inflection points (curvature changing

sign). This could lead to some problems in very snaky

appearance roads. Nonetheless, the use of parabolic

models has proved to suffice in practice for autonomous

driving on two different test tracks including bended

roads by using an appropriate lookahead distance as

described in Sotelo (2003). On the other hand, some of

the advantages derived from the use of a second order

polynomial model are described below.

• Simplicity: a second order polynomial model has

only three adjustable coefficients.

• Physical plausibility: in practice, any real stretch of

road can be reasonably approximated by a parabolic

function in the image plane. Discontinuities in the

road model are only encountered in road intersec-

tions and, particularly, in crossroads.

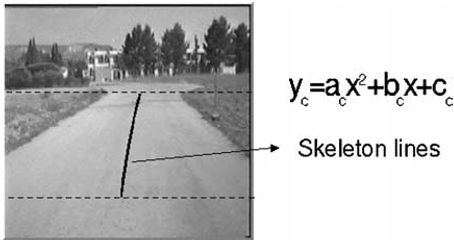

According to this, we’ve adopted the use of second

order polynomial functions for both the edges and the

center of the road (the skeleton lines will serve as a ref-

erence trajectory from which the steering angle com-

mand will be obtained), as depicted in Fig. 5.

The adjustable parameters of the several parabolic

functions are continuously updated at each iteration of

the algorithm using a well known least squares estima-

tor, as will be described later. Likewise, the road width

is estimated basing on the estimated road model under

the slowly varying width and flat terrain assumptions.

The joint use of a polynomial road model and the previ-

ously mentioned constraints allows for simple mapping

between the 2D image plane and the 3D real scene us-

ing one single camera.

2.4. Road Segmentation

Image segmentation must be carried out by exploit-

ing the cylindrical distribution of color features in the

HSI color space, bearing in mind that the separation

between road and no road color characteristics is non-

linear. To better understand the most appropriate dis-

tance measure that should be used in the road segmen-

tation problem consider again the decomposition of a

color vector into its three components in the HSI color

space, as illustrated in Fig. 4. According to the previ-

ous decomposition, the comparison between a pattern

pixel denoted by P

p

and any given pixel P

i

can be di-

rectly measured in terms of intensity and chrominance

distance, as depicted in Fig. 5.

From the analytical point of view, the difference

between two color vectors in the HSI space can be

Figure 4. Road model.

Figure 5. Color comparison in HSI space.