Did you find this useful? Give us your feedback

17 citations

...employ the hue-saturation-intensity (HSI) color space [18]....

[...]

...In the lane segmentation approach, off-line color models are used for classifying the lane pixels from the background [6, 18, 19, 13]....

[...]

17 citations

...In [37,38] a pixel is considered achromatic if its intensity is below 10 or above 90, or if its normalized saturation is under 10, where the saturation and intensity values are normalized from 0 to 100....

[...]

16 citations

15 citations

...The performance of these systems is sometimes improved by including constraints such as temporal coherence [5], [6] or road shape restrictions [1] though they cannot solve the problem completely....

[...]

...Common vision–based algorithms for road detection adopt a bottom– up approach whereby low–level pixel or region properties such as color [1], [2] or texture [3], [4] are extracted and grouped according to some similarity measure....

[...]

14 citations

...• search for features more discriminative than the RGB colors such as texture [6], depth in stereovision [7], color space robust to shadows [8], [9], • and model based approaches using the road shape geometry [10], [11], [12], [13]....

[...]

1,088 citations

...Shadows and brightness on the road are admittedly the greatest difficulty in vision based systems operating in outdoor environments (Bertozzi and Broggi, 1998)....

[...]

...…during the last decade by the research groups at the UBM (Dickmanns et al., 1994; Lutzeler and Dickmanns, 1998) and Daimler-Benz (Franke et al., 1998), or the GOLD system (Bertozzi and Broggi, 1998; Broggi et al., 1999) implemented on the ARGO autonomous vehicle at the Universita di Parma....

[...]

...Likewise, automatic cooperative driving of vehicle fleets involved in the transportation of heavy loads can lead to notable industrial cost reductions....

[...]

780 citations

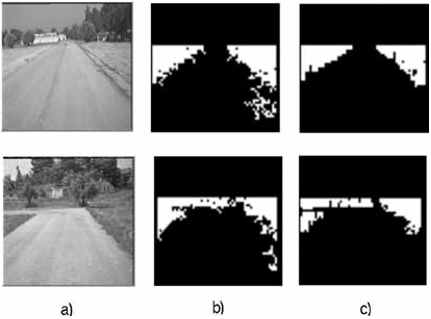

...Although the RGB color space has been successfully used in previous works dealing with road segmentation ( Thorpe, 1990; Rodriguez et al., 1998), the HSI color space has exhibited superior performance in image segmentation problems as demonstrated in Ikonomakis et al. (2000)....

[...]

...On one hand, the RGB color space has been extensively tested and used in previous road tracking applications on non-structured roads ( Thorpe, 1990; Crisman and Thorpe, 1991; Rodriguez et al., 1998)....

[...]

...Among them are the SCARF and UNSCARF systems ( Thorpe, 1990 ) designed to extract the road shape basing on the study of homogeneous regions from a color image....

[...]

648 citations

...Thus, autonomous guidance of vehicles on either marked or unmarked roads demonstrated its first results in Dickmanns and Zapp (1986) and Dickmanns and Mysliwetz (1992) where nine road and vehicle parameters were recursively estimated following the 4D approach on 3D scenes....

[...]

508 citations

...On the other hand, the normalized blue component is generally predominant over the normalized red and green components, as discussed in Pomerleau (1993)....

[...]

...Likewise, automatic cooperative driving of vehicle fleets involved in the transportation of heavy loads can lead to notable industrial cost reductions....

[...]

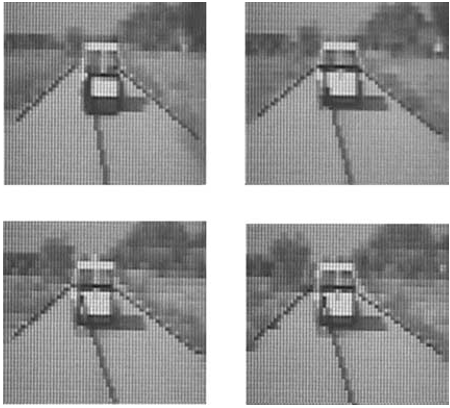

...The ALVINN (Autonomous Land Vehicle In a Neural Net) (Pomerleau, 1993) system is also able to follow unmarked roads after a proper training phase on the particular roads where the vehicle must navigate....

[...]

448 citations

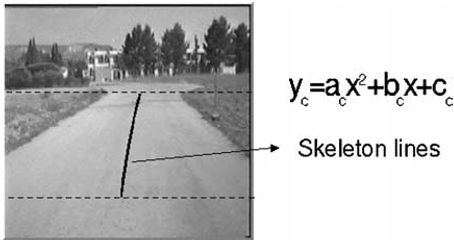

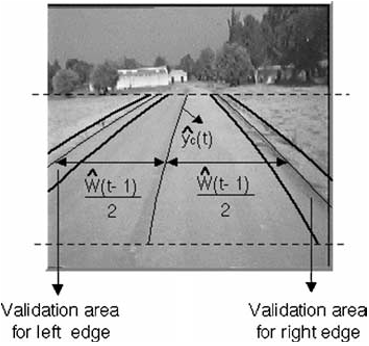

...As discussed in Bertozzi et al. (2000) due to the existence of physical and continuity constraints derived from vehicle motion and road design, the analysis of the whole image can be replaced by the analysis of a specific portion of it, namely the region of interest....

[...]

...In order to deal with this problem, some authors propose to improve the dynamic range of visual cameras (Bertozzi et al., 2000) so as to tackle strong luminance changes, when entering or exiting tunnels for instance, or to enhance the sensitiveness of cameras to the blue component of colors....

[...]