SOFTWARE—PRACTICE AND EXPERIENCE, VOL. 1(1), 1–4 (JANUARY 1988)

A Comparison of

Approximate String Matching Algorithms

PETTERI JOKINEN, JORMA TARHIO, AND ESKO UKKONEN

Department of Computer Science, P.O. Box 26 (Teollisuuskatu 23), FIN-00014 University of Helsinki, Finland

(email: tarhio@cs.helsinki.fi)

SUMMARY

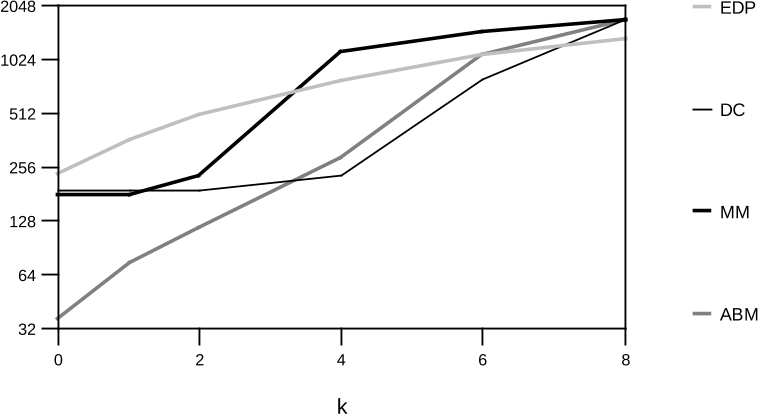

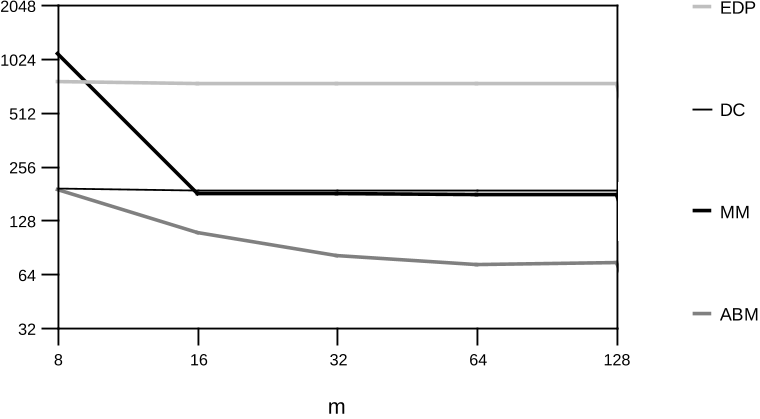

Experimental comparison of the running time of approximate string matching algorithms for the

k

dif-

ferences problem is presented. Given a pattern string, a text string, and integer

k

, the task is to find all

approximate occurrences of the pattern in the text with at most

k

differences (insertions, deletions, changes).

Weconsider sevenalgorithms basedondifferentapproachesincludingdynamicprogramming,Boyer-Moore

string matching, suffix automata, and the distribution of characters. It turns out that none of the algorithms

is the best for all values of the problem parameters, and the speed differences between the methods can be

considerable.

KEY WORDS String matching Edit distance k differences problem

INTRODUCTION

We consider the

k

differences problem, a version of the approximate string matching problem.

Given two strings, text

T

=

t

1

t

2

: : : t

n

and pattern

P

=

p

1

p

2

: : : p

m

and integer

k

, the task is

to find the end points of all approximate occurrences of

P

in

T

. An approximate occurrence

means asubstring

P

0

of

T

such thatat most

k

editing operations (insertions,deletions, changes)

are needed to convert

P

0

to

P

.

There are several algorithms proposed for this problem, see e.g. the survey of Galil and

Giancarlo.

1

The problem can be solved in time

O

(

mn

)

by dynamic programming.

2, 3

A very

simple improvement giving

O

(

k n

)

expected time solution for random strings is described by

Ukkonen.

3

Later, Landau and Vishkin,

4, 5

Galil and Park,

6

Ukkonen and Wood

7

give different

algorithms that consist of preprocessing the pattern in time

O

(

m

2

)

(or

O

(

m

)

) and scanning

the text in worst-case time

O

(

k n

)

. Tarhio and Ukkonen

8, 9

present an algorithm which is based

on the Boyer-Moore approach and works in sublinear average time. There are also several

other efficient solutions

10-17

, and some

11-14

of them work in sublinear average time. Currently

O

(

k n

)

is the best worst-case bound known if the preprocessing time is allowed to be at most

O

(

m

2

)

.

There are also fast algorithms

9, 17-20

for the

k

mismatches problem, which is a reduced form

of

k

differences problem so that a change is the only editing operation allowed.

It is clear that with such a multitude of different solutions to the same problem it is difficult

to select a proper method for each particular approximate string matching task. The theoretical

analyses given in the literature are helpful but it is important that the theory is completed with

experimental comparisons extensive enough.

CCC 0038–0644/88/010001–04 Received 1 March 1988

c

1988 by John Wiley & Sons, Ltd. Revised 25 March 1988

2 PETTERI JOKINEN, JORMA TARHIO, AND ESKO UKKONEN

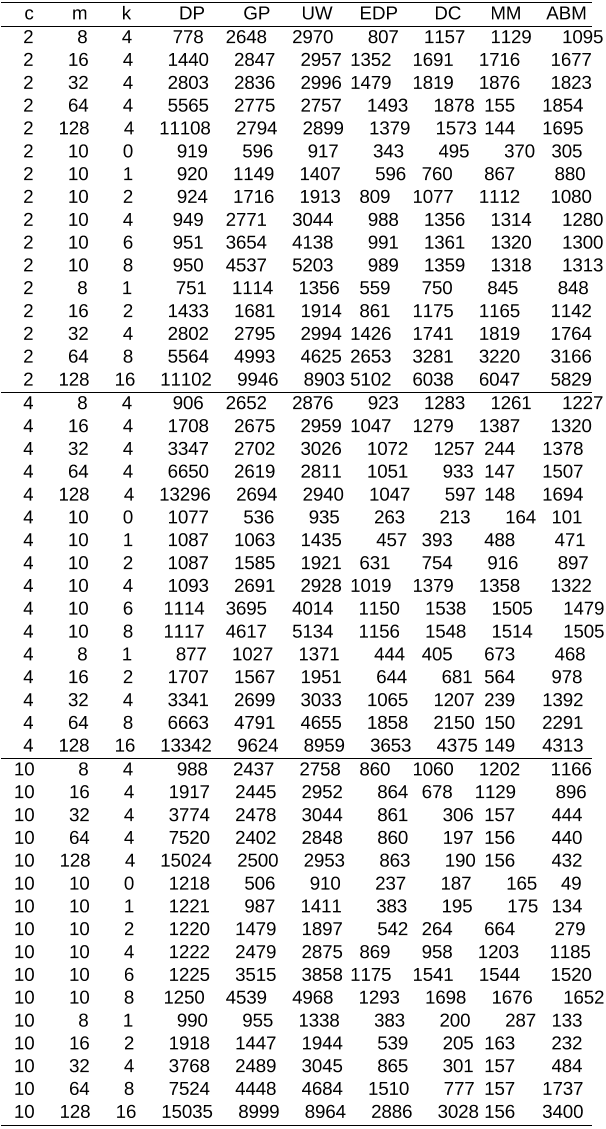

We will present an experimental comparison

of the running times of seven algorithms for

the

k

differences problem. The tested algorithms are: two dynamic programming methods,

2, 3

Galil-Park algorithm,

6

Ukkonen-Wood algorithm,

7

an algorithm counting the distribution

of characters,

18

approximate Boyer-Moore algorithm,

9

and an algorithm based on maximal

matches between the pattern and the text.

10

(The last algorithm

10

is very similar to the linear

algorithm of Chang and Lawler,

11

although they have been invented independently.) We give

brief descriptions of the algorithms as well as an Ada code for their central parts. As our

emphasis is in the experiments, the reader is advised to consult the original references for

more detailed descriptions of the methods.

The paper is organized as follows. At first, the framework based on edit distance is intro-

duced. Then the seven algorithms are presented. Finally, the comparison of the algorithms is

represented and its results are summarized.

THE K DIFFERENCES PROBLEM

We use the concept of edit distance

21, 22

to measure the goodness of approximate occurrences

of a pattern. The edit distance between two strings,

A

and

B

in alphabet Σ, can be defined

as the minimum number of editing steps needed to convert

A

to

B

. Each editing step is a

rewriting step of the form

a

!

"

(a deletion),

"

!

b

(an insertion), or

a

!

b

(a change) where

a

,

b

are in Σ and

"

is the empty string.

The k differences problem is, given pattern

P

=

p

1

p

2

: : : p

m

and text

T

=

t

1

t

2

: : : t

n

in

alphabet Σ of size

, and integer

k

, to find all such

j

that the edit distance (i.e., the number of

differences) between

P

and some substring of

T

ending at

t

j

is at most

k

. The basic solution

of the problem is the following dynamic programming method:

2, 3

Let

D

be an

m

+ 1 by

n

+

1 table such that

D

(

i; j

)

is the minimum edit distance between

p

1

p

2

: : : p

i

and any substring

of

T

ending at

t

j

. Then

D

(

0

; j

) =

0

;

0

j

n

;

D

(

i; j

) =

min

8

<

:

D

(

i

1

; j

) +

1

D

(

i

1

; j

1

) +

if

p

i

=

t

j

then 0 else 1

D

(

i; j

1

) +

1

Table

D

can be evaluated column-by-column in time

O

(

mn

)

. Whenever

D

(

m; j

)

is found

to be at most

k

for some

j

, there is an approximate occurrence of

P

ending at

t

j

with edit

distance

D

(

m; j

)

k

. Hence

j

is a solution to the

k

differences problem.

In Fig. 1 there is an example of table

D

for

T

= bcbacbbb and

P

= cacd. The pattern

occurs at positions 5 and 6 of the text with at most 2 differences.

All the algorithms presented work within this model, but they utilize different approaches

in restricting the number of entries that are necessary to evaluate in table

D

. Some of the algo-

rithms work in two phases: scanning and checking. The scanning phase searches for potential

occurrences of the pattern, and the checking phase verifies if the suggested occurrences are

good or not. The checking is always done using dynamic programming.

The comparison was carried out in 1991. Some of the newer methods will likely be faster than the tested algorithms for certain

values of problem parameters.

A COMPARISION OF APPROXIMATE STRING MATCHING ALGORITHMS 3

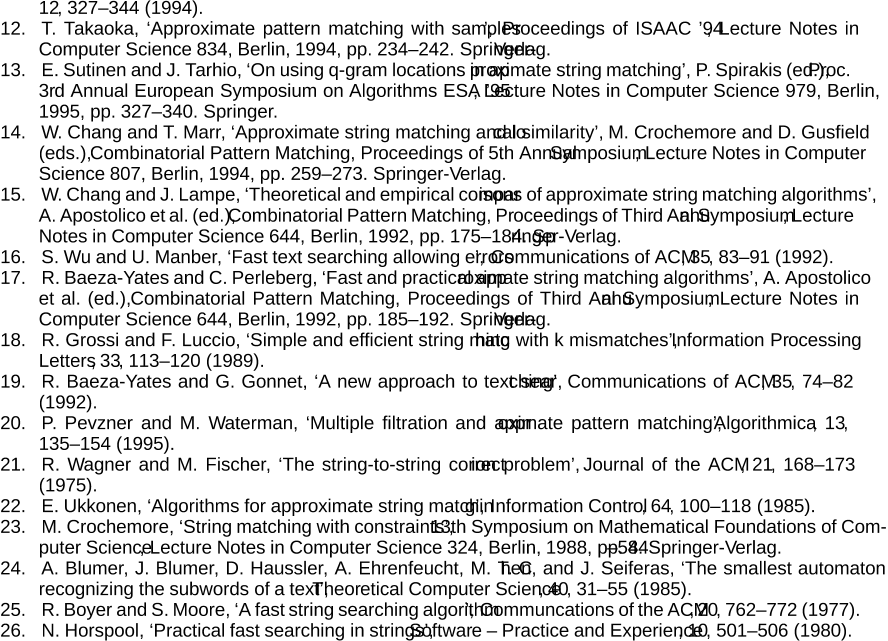

0 1 2 3 4 5 6 7 8

b c b a c b b b

0 0 0 0 0 0 0 0 0 0

1 c 1 1 0 1 1 0 1 1 1

2 a 2 2 1 1 1 1 1 2 2

3 c 3 3 2 2 2 1 2 2 3

4 d 4 4 3 3 3 2 2 3 3

Figure 1. Table

D

.

ALGORITHMS

Dynamic programming

We consider two different versions of dynamic programming for the

k

differences problem.

In the previous section we introduced the trivial solution which computes all entries of table

D

. The code of this algorithm is straight-forward,

2, 21

and we do not present it here. In the

following, we refer to this solution as Algorithm DP.

Diagonal

h

of

D

for

h

=

m

,

: : :

,

n

, consists of all

D

(

i; j

)

such that

j

i

=

h

. Considering

computation along diagonals gives a simple way to limit unnecessary computation. It is

easy to show that entries on every diagonal

h

are monotonically increasing.

22

Therefore

the computation along a diagonal can be stopped, when the threshold value of

k

+

1 is

reached, because the rest of the entries on that diagonal will be greater than

k

. This idea leads

to Algorithm EDP (Enhanced Dynamic Programming) working in average time

3

O

(

k n

)

.

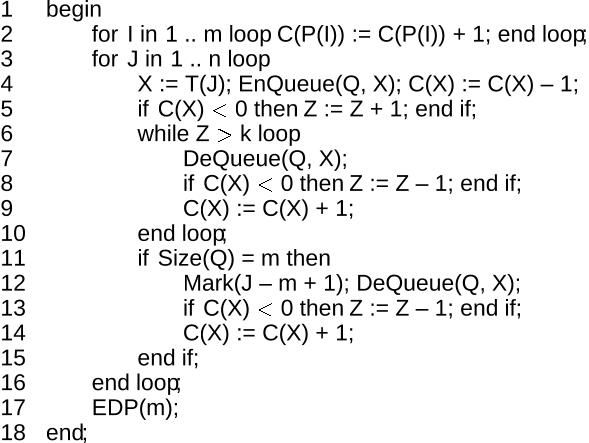

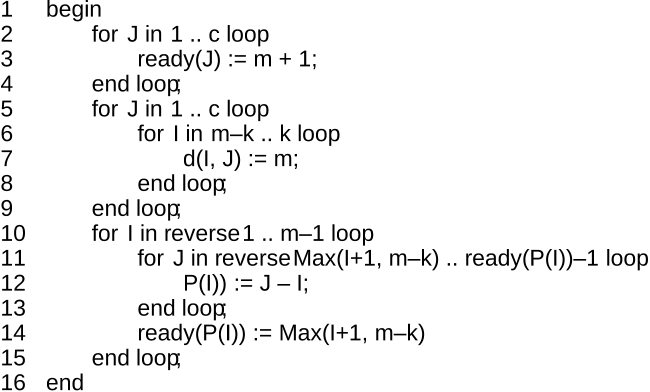

Algorithm EDP is shown in Fig. 2.

In algorithm EDP, the text and the pattern are stored in tables

T

and

P

. Table

D

is evaluated

a column at a time. The entries of the current column are stored in table

h

, and the value of

D

(

i

1

; j

1

)

is temporarily stored in variable

C

. A work space of

O

(

m

)

is enough, because

every

D

(

i; j

)

depends only on entries

D

(

i

1

; j

)

,

D

(

i; j

1

)

, and

D

(

i

1

; j

1

)

. Variable

Top tells the row where the topmost diagonal still under the threshold value

k

+

1 intersects the

current column. On line 12 an approximate occurrence is reported, when row

m

is reached.

Galil-Park

The

O

(

k n

)

algorithm presented by Galil and Park

6

is based on the diagonalwise monotonic-

ity of the entries of table

D

. It also uses so-called reference triples that represent matching

substrings of the pattern and the text. This approach was used already by Landau and Vishkin.

4

The algorithm evaluates a modified form of table

D

. The core of the algorithm is shown in

Fig. 3 as Algorithm GP.

In preprocessing of pattern

P

(procedure call Prefixes

(

P

)

on line 2), upper triangular table

Prefix

(

i; j

)

, 1

i < j

m

, is computed where Prefix

(

i; j

)

is the length of the longest

common prefix of

p

i

: : : p

m

and

p

j

: : : p

m

.

Reference triple (

u

,

v

,

w

) consists of start position

u

, end position

v

, and diagonal

w

such

that substring

t

u

: : : t

v

matches substring

p

u

w

: : : p

v

w

and

t

v

+

1

6

=

p

v

+

1

w

. Algorithm GP

manipulates several triples; the components of the

r

th

triple are presented as

U

(

r

)

,

V

(

r

)

, and

W

(

r

)

.

4 PETTERI JOKINEN, JORMA TARHIO, AND ESKO UKKONEN

1 begin

2 Top := k + 1;

3 for I in 0 .. m loop H(I) := I; end loop;

4 for J in 1 .. n loop

5 C := 0;

6 for I in 1 .. Top loop

7 if P(I) = T(J) then E :=C;

8 else E := Min((H(I – 1), H(I), C)) + 1; end if;

9 C := H(I); H(I) := E;

10 end loop;

11 while H(Top)

>

k loop Top := Top – 1; end loop;

12 if Top = m then Report Match(J);

13 else Top := Top + 1; end if;

14 end loop;

15 end;

Figure 2. Algorithm EDP.

For diagonal

d

and integer

e

, let

C

(

e; d

)

be the largest column

j

such that

D

(

j

d; j

) =

e

.

In other words, the entries of value

e

on diagonal

d

of

D

end at column

C

(

e; d

)

. Now

C

(

e; d

) =

C ol

+

J ump

(

C ol

+

1

d; C ol

+

1

)

holds where

C ol

=

max

f

C

(

e

1

; d

1

) +

1

; C

(

e

1

; d

) +

1

; C

(

e

1

; d

+

1

)

g

and Jump

(

i; j

)

is the length of the longest common prefix of

p

i

: : : p

m

and

t

j

: : : t

n

for all

i

,

j

.

Let C-diagonal

g

consist of entries

C

(

e; d

)

such that

e

+

d

=

g

. For every

C

-diagonal

Algorithm GP performs an iteration that evaluates it from two previous

C

-diagonals (lines

7–38). The evaluation of each entry starts with evaluating the Col value (line 11). The rest

of the loop (lines 12–35) effectively finds the value Jump

(

C ol

+

1

d; C ol

+

1

)

using the

reference triples and table Prefix. A new

C

-value is stored on line 24.

The algorithm maintains an ordered sequence of reference triples. The sequence is updated

on lines 28–35. Procedure Within

(

d

)

called on line 14 tests if text position

d

is within some

interval of the

k

first reference triples in the sequence. In the positive case, variable

R

is

updated to express the index of the reference triple whose interval contains text position

d

.

A match is reported on line 26.

Instead ofthe whole

C

defined above, table

C

ofthe algorithmcontains onlythree successive

C

-diagonals. The use of this buffer of three diagonals is organized with variables

B

1,

B

2,

and

B

3.

Ukkonen-Wood

Another

O

(

k n

)

algorithm, given by Ukkonen and Wood,

7

has an overall structure identical

to the algorithm of Galil and Park. However, no reference triples are used. Instead, to find

the necessary values Jump

(

i; j

)

, the text is scanned with a modified suffix automaton for

A COMPARISION OF APPROXIMATE STRING MATCHING ALGORITHMS 5

1 begin

2 Prefixes(P);

3 for I in –1 .. k loop

4 C(I, 1) := –Infinity; C(I, 2) := –1;

5 end loop;

6 B1:=0; B2:=1; B3:=2;

7 for J in 0 .. n – m + k loop

8 C(–1, B1) := J; R := 0;

9 for E in 0 .. k loop

10 H := J – E;

11 Col := Max((C(E–1, B2) + 1, C(E–1, B3) + 1, C(E–1, B1)));

12 Se := Col + 1; Found := false;

13 while not Found loop

14 if Within(Col + 1) then

15 F := V(R) – Col; G := Prefix(Col+1–H, Col+1–W(R));

16 if F = G then Col := Col + F;

17 else Col := Col + Min(F, G); Found := true; end if;

18 else

19 if Col – H

<

m and then P(Col+1–H) = T(Col+1) then

20 Col := Col + 1;

21 else Found := true; end if;

22 end if;

23 end loop;

24 C(E, B1) := Min(Col, m+H);

25 if C(E, B1) = H + m and then C(E–1, B2)

<

m + H then

26 Report

Match((H + m));

27 end if;

28 if V(E)

>

=

C(E, B1) then

29 if E = 0 then U(E) := J + 1;

30 else U(E) := Max(U(E), V(E–1) + 1); end if;

31 else

32 V(E) := C(E, B1); W(E) := H;

33 if E = 0 then U(E) := J + 1;

34 else U(E) := Max(Se, V(E–1) + 1); end if;

35 end if;

36 end loop;

37 B := B1; B1 := B3; B3 := B2; B2 := B;

38 end loop;

39 end;

Figure 3. Algorithm GP.