2 Ricardo C arvalho Amorim, João Aguiar Castro, João Rocha da Silva, Cristina Ribeiro

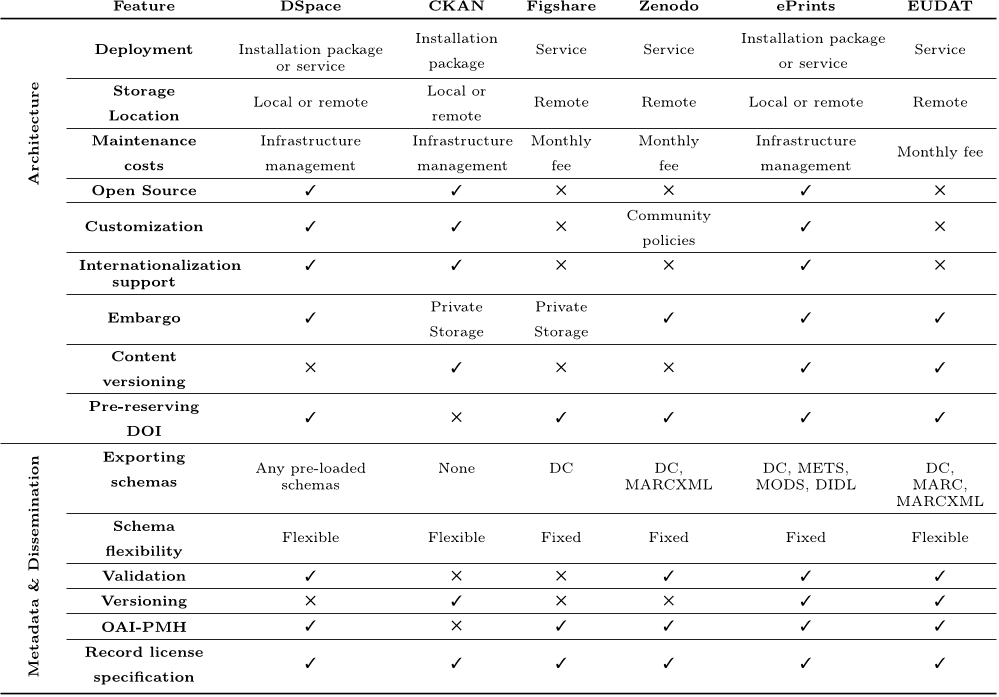

stitutions. Then, focus moves to their fitne ss to han-

dle research data, namely their domain-specific meta-

data requirements and preservation guidelines. Imple-

mentation costs, architecture, interoperability, content

dissemination capabilities, implemented search features

and community acceptance are also taken into consider-

ation. When faced with the many alternatives currently

av ailable, it can be difficult for institutions to choose a

suitable platform to meet their specific requirements.

Several comparative studies between existing solutions

were already carried out in order to evaluate different

aspects of each implementation, confirming that this is

an issue with increasing importance [16,3,6]. This eval-

uation considers aspects relevant to the authors’ ongo-

ing work, focused on finding solutions to research data

management, and takes into consideration their past ex-

perience in this field [33]. This experience has provided

insights on specific, local needs that can influence the

adoption of a platform and therefore the success in its

deployment.

It is clear that the effort in creating metadata for

research datasets is very different from what is required

for research publications. While publications can be ac-

curately described by librarians, good quality metadata

for a dataset requires the contribution of the researchers

involved in its production. Their knowledge of the do-

main is required to adequately document the dataset

production context so that others can reuse it. Involv-

ing the researchers in the deposit stage is a challenge, as

the investment in metadata production for data publi-

cation and sharing is typically higher than that required

for the addition of notes that are only intended for their

peers in a research group [7].

Moreover, the authors look at staging platforms,

which are especially tailored to capture metadata re-

cords as they are produced, offering researchers an in-

tegrated environment for their manage m ent along with

the data. As this is an area with several proposals in

active development, EUDAT, which includes tools for

data staging, and Dendro, a platform proposed for en-

gaging researchers in data description, taking into ac-

count the need for data and metadata organisation will

be contemplated.

Staging platforms are capable of exporting the en-

closed datasets and metadata records to research data

repositories. The platforms selected for the analysis in

the sequel as candidates for u s e are considered as re-

search data management repositories for datasets in

the long tail of science , as they are designed with shar-

ing and dissemination in mind. Together, staging plat-

forms and research data repositories provide the tools to

handle the stages of the research workflow. Long-term

preservation imposes further requirements, and other

tools may be necessary to satisfy th e m. However, as da-

tasets become organised and described, their value and

their potential for reuse will prompt further preserva-

tion actions.

2 From publications to data management

The growth in the number of research publications,

combined with a strong drive towards open access poli-

cies [8,10], continue to foster the development of open-

source platforms for managing bibliographic records.

While data citation is not yet a widespread practice, the

importance of citable datasets is growing. Until a cul-

ture of data citation is widely adopted, however, many

research groups are opting to pu blish so-called “data

papers”, which are more easily citable than datasets.

Data pape rs serve not only as a reference to datasets

but also document their production context [9].

As data management becomes an increasin gly im-

portant part of the research workflow [24], solutions de-

signed for managing research data are being actively

developed by both open-source communities and data

management-related companies. As with institutional

repositories, many of their design and development chal-

lenges have to do with description and long-term preser-

vation of research data. There are, however, at least

two fundamental differences between publications and

datasets: the latter are often purely numeric, making

it very hard to derive any type of metadata by sim-

ply looking at their contents; also, datasets require de-

tailed, domain-specific des c riptions to be corre ctly in-

terpreted. Metadata requ ire ments can also vary greatly

from domain to domain, requiring repository data mod-

els to be flexible enough to adequately represent these

records [35]. The effort invested in adequate dataset

description is worthwhile, since it has been shown that

research publications that provide access to their base

data consistently yield higher citation rates than those

that do not [27].

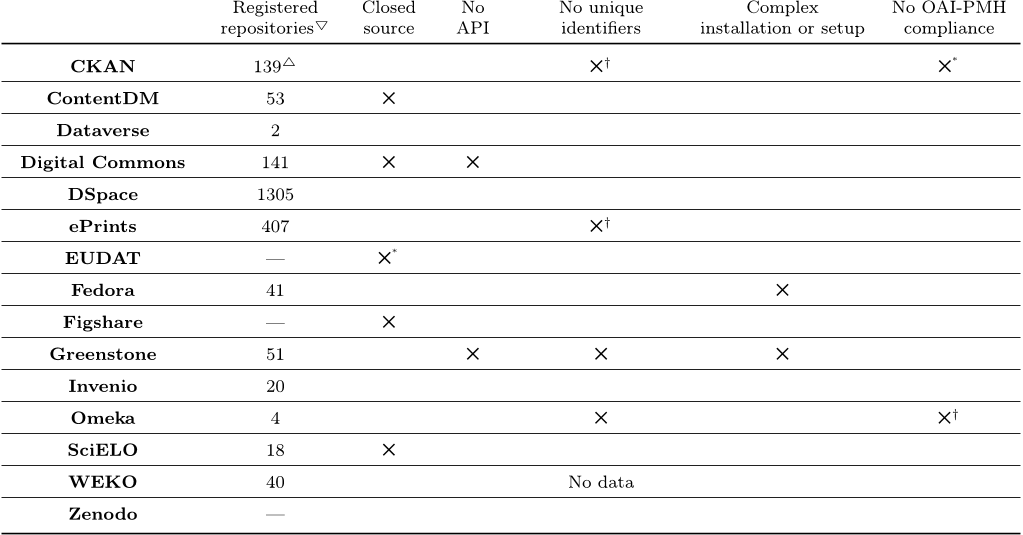

As these rep ositories deal with a reasonably small

set of managed formats for deposit, several reference

models, such as the OAIS (Open Archival Information

System) [12]arecurrentlyinusetoensurepreservation

and to promote metadata interchange and dissemina-

tion. Besides capturing the available metadata during

the ingestion process, data re positories often distribute

this information to other instances, improving the pub-

lications’ visibility through specialised research search

engines or repository indexers. While the former focus

on querying each repository f or exposed contents, the

latter help users find data repositories that match their

needs—such as repositories from a specific domain or

storing data from a specific community. Governmental