Did you find this useful? Give us your feedback

49 citations

...Although Part-All scheme (which is followed by many related works [12], [8], [6], [7], [10], [20]) is able to substantially reduce WCD of the critical PEs compared to no-Part (Figures 3a and 3b), it also significantly reduces the bandwidth delivered to the noncritical PEs (Figure 4)....

[...]

...As discussed in [12], [20], [9], this bound can then be used to either derive the worst-case execution time of single real-time task, or to perform response-time analysis for a multi-tasking application....

[...]

...[12], [8], [6], [7]) considers DRAM bank partitioning, where banks are partitioned among PEs to reduce bank conflicts and hence improve WCD....

[...]

...Similar to previous work [9], [10], we do not account for the delay from the refresh process because it can be often neglected compared to other delays [9], or otherwise, it can be added as an extra delay term to the execution time of a task using existing methods [11], [12]....

[...]

36 citations

18 citations

12 citations

...However, if that is not the case, its ability to effectively exploit the SDRAM is compromised [11]....

[...]

...Such strategy has been discussed in [11] and is out of the scope of this article....

[...]

...Supporting different granularities, which would be necessary for instance if a DMA engine competes for the SDRAM with cache-relying processors, is out of the scope of this article (as it constitutes an orthogonal challenge already investigated in [11])....

[...]

...We highlight that the same assumption has been made in [11], [12], which also employed a trace-based approach....

[...]

...To address the aforementioned scenario, researchers proposed using a combination of bank privatization and openrow policy [6], [7], [11]....

[...]

9 citations

[...]

4,039 citations

...For each benchmark, we obtain the memory trace by running the benchmark on the gem5 [3] architecture simulator; we employed a simple in-order timing model using the x86 instruction set architecture as our objective is the evaluation of the memory system rather than detailed core simulation....

[...]

1,864 citations

...However, for simulation results, the other requestors are running the lbm benchmark from SPEC2006 CPU suite [15], which is highly bandwidth intensive....

[...]

249 citations

...(3) Based on the latency bounds for individual requests, we show how to compute the overall latency suffered by a task running on a fully timing compositional core [34]....

[...]

...Let us assume that the requestor executing the task under analysis is a fully timing compositional core as described in [34] (example: ARM7)....

[...]

244 citations

...Their work is part of a larger effort to develop PTARM [24], a precision-timed (PRET [8, 5]) architecture....

[...]

239 citations

First of all, the authors plan to synthesize and test the proposed controller on FPGA.

Since AMC was originally described for a slower DDR2 device, the authors recomputed the length of AMC static command groups based on the timing parameters of the employed DDR3 device.

By decomposing, the latency for tAC and tCD can now be computed separately, greatly simplifying the analysis; tReq is then computed as the sum of the two components.

tAE : since the authors want to ensure that no command in the global queue is delayed by commands in the refresh sequence, the authors need to wait for the longest timing constraint between an ACT command and any other command issued after ending the sequence.

tIP and tIA represent the worst case delay between inserting a command in the FIFO queue and when that command is issued, and thus capture interference caused by other requestors.

To derive the total latency for accessing shared data for the task under analysis, assume the number of loads to shared data isNSL and number of stores to shared data is NSS for the task under analysis.

By carefully scheduling the static command sequences, the controller can significantly reduce the size of each TDMA slot compared to previous static controllers when handling small size requests that do not require interleaving.

The assumption of constant access time in DRAM can lead to highly pessimistic bounds because DRAM is a complex and stateful resource, i.e., the time required to perform one memory request is highly dependent on the history of previous and concurrent requests.

Since memory traces were obtained, no worst case pattern is needed since the order of requests are assumed to be known; instead, the authors simply computed the worst case latency of each request based on the type of the previous request according to Table 4.

Modern memory devices are organized into ranks and each rank is divided into multiple banks, which can be accessed in parallel provided that no collisions occur on either buses.

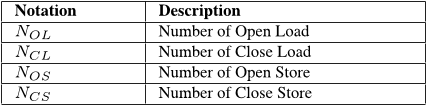

Since the analysis in Section 5 depends on the order of requests, this section shows how to derive a safe worst case requests order given the number of each type of requests.

The tFAW constraint that limits the number of banks that can be activated in order to limit the amount of current drawn to the device to prevent over heating problems.

The downside is that the analysis is pessimistic, since it assumes than an interfering requestor could cause maximum delay on each individual command of the requestor under analysis, while this might not be possible in practice.

The worst case latency for a single request to shared data for the task under analysis is then:tReqShared(Load) = k−1∑ i=1 tReqOther,i(M + s− 1) + t Req Analysis(Load,M + s− 1), (25)for a load request, while for a store request it is:tReqShared(Store) = k−1∑ i=1 tReqOther,i(M + s− 1) + t Req Analysis(Store,M + s− 1).

This is because there is another timing constraint, tRTW , between read command of Request 1 and write command of Request 2, and the write command can only be issued once all applicable constraints are satisfied.