This is a repository copy of A Morphable Face Albedo Model.

White Rose Research Online URL for this paper:

https://eprints.whiterose.ac.uk/164169/

Version: Accepted Version

Proceedings Paper:

Smith, William Alfred Peter orcid.org/0000-0002-6047-0413, Seck, Alassane, Dee, Hannah

et al. (3 more authors) (Accepted: 2020) A Morphable Face Albedo Model. In: Proceeding

of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR 2020).

IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR 2020), 14-19

Jun 2020 IEEE . (In Press)

eprints@whiterose.ac.uk

https://eprints.whiterose.ac.uk/

Reuse

Items deposited in White Rose Research Online are protected by copyright, with all rights reserved unless

indicated otherwise. They may be downloaded and/or printed for private study, or other acts as permitted by

national copyright laws. The publisher or other rights holders may allow further reproduction and re-use of

the full text version. This is indicated by the licence information on the White Rose Research Online record

for the item.

Takedown

If you consider content in White Rose Research Online to be in breach of UK law, please notify us by

emailing eprints@whiterose.ac.uk including the URL of the record and the reason for the withdrawal request.

A Morphable Face Albedo Model

William A. P. Smith

1

Alassane Seck

2,3

Hannah Dee

3

Bernard Tiddeman

3

Joshua Tenenbaum

4

Bernhard Egger

4

1

University of York, UK

2

ARM Ltd, UK

3

Aberystwyth University, UK

4

MIT - BCS, CSAIL & CBMM, USA

william.smith@york.ac.uk, alou.kces@live.co.uk, {hmd1,bpt}@aber.ac.uk, {jbt,egger}@mit.edu

Statistical Diffuse Albedo Model Statistical Specular Albedo Model Combined Model Rendering

1 2 3

+

–

Mean

1 2 3

+

–

Mean

Mean

1 2 3

+

–

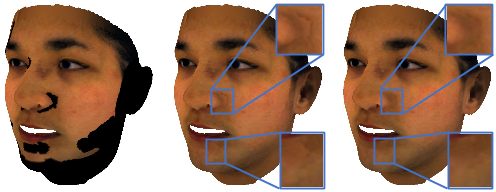

Figure 1: First 3 principal components of our statistical diffuse (left) and specular (middle) albedo models. Both are visualised

in linear sRGB space. Right: rendering of the combined model under frontal illumination in nonlinear sRGB space.

Abstract

In this paper, we bring together two divergent strands of

research: photometric face capture and statistical 3D face

appearance modelling. We propose a novel lightstage cap-

ture and processing pipeline for acquiring ear-to-ear, truly

intrinsic diffuse and specular albedo maps that fully factor

out the effects of illumination, camera and geometry. Using

this pipeline, we capture a dataset of 50 scans and com-

bine them with the only existing publicly available albedo

dataset (3DRFE) of 23 scans. This allows us to build the

first morphable face albedo model. We believe this is the

first statistical analysis of the variability of facial specular

albedo maps. This model can be used as a plug in replace-

ment for the texture model of the Basel Face Model and we

make our new albedo model publicly available. We ensure

careful spectral calibration such that our model is built in a

linear sRGB space, suitable for inverse rendering of images

taken by typical cameras. We demonstrate our model in a

state of the art analysis-by-synthesis 3DMM fitting pipeline,

are the first to integrate specular map estimation and out-

perform the Basel Face Model in albedo reconstruction.

1. Introduction

3D Morphable Models (3DMMs) were proposed over 20

years ago [4] as a dense statistical model of 3D face geom-

etry and texture. They can be used as a generative model of

2D face appearance by combining shape and texture param-

eters with illumination and camera parameters that are pro-

vided as input to a graphics renderer. Using such a model

in an analysis-by-synthesis framework allows a principled

disentangling of the contributing factors of face appearance

in an image. More recently, 3DMMs and differentiable ren-

derers have been used as model-based decoders to train con-

volutional neural networks (CNNs) to regress 3DMM pa-

rameters directly from a single image [29].

The ability of these methods to disentangle intrinsic (ge-

ometry and reflectance) from extrinsic (illumination and

camera) parameters relies upon the 3DMM capturing only

intrinsic parameters, with geometry and reflectance mod-

elled independently. 3DMMs are usually built from cap-

tured data [4, 22, 5, 7]. This necessitates a face capture

setup in which not only 3D geometry but also intrinsic face

reflectance properties, e.g. diffuse albedo, can be measured.

A recent large scale survey of 3DMMs [10] identified a lack

of intrinsic face appearance datasets as a critical limiting

factor in advancing the state-of-the-art. Existing 3DMMs

are built using ill-defined “textures” that bake in shading,

shadowing, specularities, light source colour, camera spec-

tral sensitivity and colour transformations. Capturing truly

intrinsic face appearance parameters is a well studied prob-

lem in graphics but this work has been done largely inde-

pendently of the computer vision and 3DMM communities.

In this paper we present a novel capture setup and pro-

cessing pipeline for measuring ear-to-ear diffuse and spec-

ular albedo maps. We use a lightstage to capture multiple

photometric views of a face. We compute geometry using

uncalibrated multiview stereo, warp a template to the raw

5011

scanned meshes and then stitch seamless per-vertex diffuse

and specular albedo maps. We capture our own dataset of

50 faces, combine this with the 3DRFE dataset [27] and

build a statistical albedo model that can be used as a drop-

in replacement for existing texture models. We make this

model publicly available. To demonstrate the benefits of

our model, we use it with a state-of-the-art fitting algorithm

and show improvements over existing texture models.

1.1. Related work

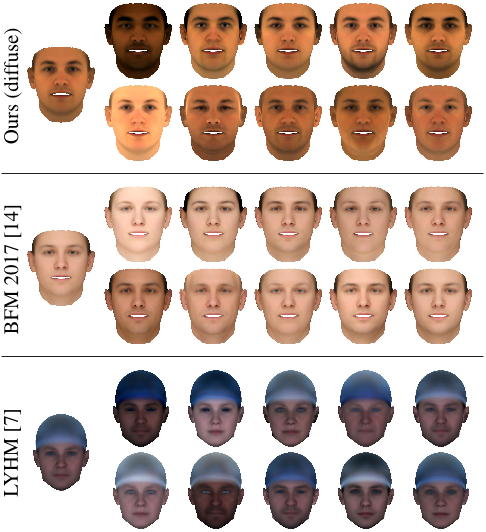

3D Morphable Face Models The original 3DMM of

Blanz and Vetter [4] was built using 200 scans captured in a

Cyberware laser scanner which also provides a colour tex-

ture map. Ten years later the first publicly available 3DMM,

the Basel Face Model (BFM) [22], was released. Again,

this was built from 200 scans, this time captured using a

structured light system from ABW-3D. Here, texture is cap-

tured by three cameras synchronised with three flashes with

diffusers, providing relatively consistent illumination. The

later BFM 2017 [14] used largely the same data from the

same scanning setup. More recently, attempts have been

made to scale up training data to better capture variabil-

ity across the population. Both the large scale face model

(LSFM) [5] (10k subjects) and Liverpool-York Head Model

(LYHM) [7] (1.2k subjects) use shape and textures captured

by a 3DMD multiview structured light scanner under rel-

atively uncontrolled illumination conditions. Ploumpis et

al. [24] show how to combine the LSFM and LYHM but

do so only for shape, not for texture. All of these previous

models use texture maps that are corrupted by shading ef-

fects related to geometry and the illumination environment,

mix specular and diffuse reflectance and are specific to the

camera with which they were captured. Gecer et al. [12] use

a Generative Adversarial Network (GAN) to learn a non-

linear texture model from high resolution scanned textures.

Although this enables them to capture high frequency de-

tails usually lost by linear models, it does not resolve the

issues with the source textures.

Recently, there have been attempts to learn 3DMMs di-

rectly from in-the-wild data simultaneously with learning to

fit the model to images [30, 28]. The advantage of such ap-

proaches is that they can exploit the vast resource of avail-

able 2D face images. However, the separation of illumina-

tion and albedo is ambiguous while non-Lambertian effects

are usually neglected and so these methods do not currently

provide intrinsic appearance models of a quality compara-

ble with those built from captured textures.

Face Capture Existing methods for face capture fall

broadly into two categories: photometric and geometric.

Geometric methods rely on finding correspondences be-

tween features in multiview images enabling the triangu-

lation of 3D position. These methods are relatively robust,

can operate in uncontrolled illumination conditions, provide

instantaneous capture and can provide high quality shape

estimates [3]. They are sufficiently mature that commer-

cial systems are widely available, for example using struc-

tured light stereo, multiview stereo or laser scanning. How-

ever, the texture maps captured by these systems are nothing

other than an image of the face under a particular set of en-

vironmental conditions and hence are useless for relighting.

Worse, since appearance is view-dependent (the position of

specularities changes with viewing direction), no one single

appearance can explain the set of multiview images.

On the other hand, photometric analysis allows estima-

tion of additional reflectance properties such as diffuse and

specular albedo [21], surface roughness [15] and index of

refraction [16] through analysis of the intensity and polar-

isation state of reflected light. This separation of appear-

ance into geometry and reflectance is essential for the con-

struction of 3DMMs that truly distentangle the different fac-

tors of appearance. The required setups are usually much

more restrictive, complex and not yet widely commercially

available. Hence, the availability of datasets has been ex-

tremely limited, particularly of the scale required for learn-

ing 3DMMs. There is a single publicly available dataset

of scans, the 3D Relightable Facial Expression (3DRFE)

database [27] captured using the setup of Ma et al. [21].

Ma et al. [21] were the first to propose the use of po-

larised spherical gradient illumination in a lightstage. This

serves two purposes. On the one hand, spherical gradient il-

lumination provides a means to perform photometric stereo

that avoids problems caused by binary shadowing in point

source photometric stereo. On the other hand, the use of po-

larising filters on the lights and camera enables separation

of diffuse and specular reflectance which, for the constant

illumination case, allows measurement of intrinsic albedo.

This was extended to realtime performance capture by Wil-

son et al. [31] who showed how a certain sequence of il-

lumination conditions allowed for temporal upsampling of

the photometric shape estimates. The main drawback of the

lightstage setup is that the required illumination polariser

orientation is view dependent and so diffuse/specular sep-

aration is only possible for a single viewpoint which does

not permit capturing full ear-to-ear face models. Ghosh et

al. [17] made an empirical observation that using two illu-

mination fields with locally orthogonal patterns of polari-

sation allows approximate specular/diffuse separation from

any viewpoint on the equator. Although practically useful,

in this configuration specular and diffuse reflectance is not

fully separated. More generally, lightstage albedo bakes in

ambient occlusion (which depends on geometry) and RGB

values are dependent on the light source spectra and camera

spectral sensitivities.

3D Morphable Model Fitting The estimation of 3DMM

parameters (shape, expression, colour, illumination and

5012

camera) is an ongoing inverse rendering challenge. Most

approaches focus on shape estimation only and omit the

reconstruction of colour/albedo and illumination, e.g. [20].

The few methods taking the colour into account suffer from

the ambiguity between albedo and illumination demon-

strated in Egger et al. [9]. This ambiguity is especially hard

to overcome for two reasons: 1. all publicly available face

models don’t model real diffuse or specular albedo, 2. most

models have a strong bias towards Caucasian faces which

results in a strongly biased prior. The reflectance models

used for inverse rendering are usually dramatically simpli-

fied and the specular term is either omitted or constant.

Genova et al. [13] point out the limitation of no statistics

on specularity and use a heuristic for their specular term.

Romdhani et al. [25] use the position of specularities as

shape cues but again with homogeneous specular maps. The

work of Yamaguchi et al. [32] demonstrate the value of sep-

arate estimation of specular and diffuse albedo, however

they do not explore the statistics or build a generative model

and their approach is not available to the community. Cur-

rent limitations are mainly caused by the lack of a publicly

available diffuse and specular albedo model.

2. Data capture

A lightstage exploits the phenomenon that specular re-

flection from a dielectric material preserves the plane of po-

larisation of linearly polarised incident light whereas sub-

surface diffuse reflection randomises it. This allows separa-

tion of specular and diffuse reflectance by capturing a pair

of images under polarised illumination. A polarising filter

on each lightsource is oriented such that a specular reflec-

tion towards the viewer has the same plane of polarisation.

The first image, I

para

, has a polarising filter in front of the

camera oriented parallel to the plane of polarisation of the

specularly reflected light, allowing both specular and dif-

fuse transmission. The second, I

perp

, has the polarising fil-

ter oriented perpendicularly, blocking the specular but still

permitting transmission of the diffuse reflectance. The dif-

ference, I

para

− I

perp

, gives only the specular reflection.

Setup Our setup comprises a custom built lightstage with

polarised LED illumination, a single photometric camera

(Nikon D200) with optoelectric polarising filter (LC-Tec

FPM-L-AR) and seven additional cameras (Canon 7D) to

provide multiview coverage. We use 41 ultra bright white

LEDs mounted on a geodesic dome of diameter 1.8m. Each

LED has a rotatable linear polarising filter in front of it.

Their orientation is tuned by placing a sphere of low diffuse

albedo and high specular albedo (a black snooker ball) in

the centre of the dome and adjusting the filter orientation

until the specular reflection is completely cancelled in the

photometric camera’s view. Since we only seek to estimate

albedo maps, we require only the constant illumination con-

dition in which all LEDs are set to maximum brightness.

In contrast to previous lightstage-based methods, we

capture multiple virtual viewpoints by capturing the face

in different poses, specifically frontal and left/right pro-

file. This provides full ear-to-ear coverage for the single

polarisation-calibrated photometric viewpoint. The opto-

electric polarising filter enables the parallel/perpendicular

conditions to be captured in rapid succession without re-

quiring mechanical filter rotation. We augment the photo-

metric camera with additional cameras providing multiview,

single-shot images captured in sync with the photometric

images. We position these additional cameras to provide

overlapping coverage of the face. We do not rely on a fixed

geometric calibration, so the exact positioning of these cam-

eras is unimportant and we allow the cameras to autofocus

between captures. In our setup, we use 7 such cameras in

addition to the photometric view giving a total of 8 simul-

taneous views. Since we repeat the capture three times, we

have 24 effective views. For synchronisation, we control

camera shutters and the polarisation state of the photomet-

ric camera using an MBED micro controller. A complete

dataset for a face is shown in Fig. 2.

Participants We captured 50 individuals (13 females) in

our setup. Our participants range in age from 18 to 67 and

cover skin types I-V of the Fitzpatrick scale [11].

3. Data processing

In order to merge these views and to provide a rough

base mesh, we perform a multiview reconstruction. We

then warp the 3DMM template mesh to the scan geometry.

As well as other sources of alignment error, since the three

photometric views are not acquired simultaneously, there is

likely to be non-rigid deformation of the face between these

views. For this reason, in Section 3.3 we propose a robust

algorithm for stitching the photometric views without blur-

ring potentially misaligned features. We provide an imple-

mentation of our sampling, weighting and blending pipeline

as an extension of the MatlabRenderer toolbox [2].

3.1. Multiview stereo

We commence by applying uncalibrated structure-from-

motion followed by dense multiview stereo [1] to all 24

viewpoints (see Fig. 2, blue boxed images). Solving this un-

calibrated multiview reconstruction problem provides both

the base mesh (see Fig. 2, bottom left) to which we fit the

3DMM template and also intrinsic and extrinsic camera pa-

rameters for the three photometric views. These form the

input to our stitching process.

3.2. Template fitting

To build a 3DMM from raw scanning data, we es-

tablish correspondence to a template. We use the Basel

5013

!

"#$%%

&#$'(

!

)*#*

&#$'(

!

"#$%%

+,&(

!

)*#*

+,&(

Frontal Pose Left Pose

Right Pose

Multiview stereo

Template warping

!

"#$%%

#-./(

!

)*#*

#-./(

0!

"#$%%

01!

)*#*

2 !

"#$%%

3

Sampling

Poisson

blending

Raw scan

!

%(-("/

Colour transformed

albedo images

Sampled albedo maps

Fitted

template

Diffuse:

Specular:

Figure 2: Overview of our capture and blending pipeline. Images within a blue box are captured simultaneously. Photometric

image pairs within a dashed orange box are captured sequentially with perpendicular/parallel polarisation state respectively.

Face Pipeline [14] which uses smooth deformations based

on Gaussian Processes. We adopted the threshold to ex-

clude vertices from the optimisation for the different levels

(to 32mm, 16mm, 8mm, 4mm, 2mm, 1mm, 0.5mm from

coarse to fine) to reach better performance for missing parts

of the scans. Besides this minor change we used the Basel

Face Pipeline as is, with between 25 and 45 manually anno-

tated landmarks (eyes: 8, nose 9, mouth 6, eyebrows 4, ears

18). We used the template of the BFM 2017 for registration

which makes our model compatible to this model.

3.3. Sampling and stitching

We stitch the multiple photometric viewpoints into seam-

less diffuse and specular per-vertex albedo maps using Pois-

son blending. Blending in the gradient domain via solution

of a Poisson equation was first proposed by P

´

erez et al. [23]

for 2D images. The approach allows us to avoid visible

seams where texture or geometry from different views are

inconsistent.

For each viewpoint, v ∈ V = {v

1

, . . . , v

k

}, we sample

RGB intensities onto the n vertices of the mesh, I

v

∈ R

n×3

.

Then, for each view we compute a per-triangle confidence

value for each of the t triangles, w

v

∈ R

t

. For each tri-

angle, this is defined as the minimum per-vertex weight for

each vertex in the triangle, where the per-vertex weights are

defined as follows. If the vertex is not visible in that view,

the weight is set to zero. We also set the weight to zero if

the vertex projection is within a threshold distance of the

occluding boundary to avoid sampling background onto the

mesh. Otherwise, we take the dot product between the sur-

face normal and view vectors as the weight, giving prefer-

ence to observations whose projected resolution is higher.

Next, we define a selection matrix for each view, S

v

∈

{0, 1}

m

v

×t

, that selects a triangle if view v has the highest

weight for that triangle:

S

T

v

1

m

v

i

= 1 iff ∀u ∈ V \ {v}, w

u

i

< w

v

i

. (1)

We define an additional selection matrix S

v

k+1

that selects

all triangles not selected in any view (i.e. that have no non-

zero weight). Hence, every triangle is selected exactly once

and

P

k+1

i=1

m

v

i

= t. We similarly define per-vertex selec-

tion matrices

˜

S

v

∈ {0, 1}

˜m

v

×n

that select the vertices for

which view v has the highest per-vertex weights.

We write a screened Poisson equation as a linear system

5014

![Figure 3: (a)-(c): Source geometry and albedo maps from the 3DRFE dataset [27]. (d)-(e): final registered, colour transformed albedo maps on warped template geometry.](/figures/figure-3-a-c-source-geometry-and-albedo-maps-from-the-3drfe-17cw7urs.png)

![Figure 7: Qualitative model adaptation results on the LFW dataset [18]. Our model leads to comparable results whilst explicitly disentangling albedo and estimating diffuse and specular albedo.](/figures/figure-7-qualitative-model-adaptation-results-on-the-lfw-17dtmin0.png)