A Neurolinguistic Model of Grammatical

Construction Processing

Peter Ford Dominey

1

, Michel Hoen

1

, and Toshio Inui

2

Abstract

& One of the functions of everyday human language is to com-

municate meaning. Thus, when one hears or reads the sen-

tence, ‘‘John gave a book to Mary,’’ some aspect of an event

concerning the transfer of possession of a book from John to

Mary is (hopefully) transmitted. One theoretical approach to

language referred to as construction grammar emphasizes this

link between sentence structure and meaning in the form of

grammatical constructions. The objective of the current re-

search is to (1) outline a functional description of grammatical

construction processing based on principles of psycholinguis-

tics, (2) develop a model of how these functions can be imple-

mented in human neurophysiology, and then (3) demonstrate

the feasibility of the resulting model in processing languages of

typologically diverse natures, that is, English, French, and Japa-

nese. In this context, particular interest will be directed toward

the processing of novel compositional structure of relative

phrases. The simulation results are discussed in the context of

recent neurophysiological studies of language processing. &

INTRODUCTION

One of the long-term quests of cognitive neuroscience

has been to link functional aspects of language process-

ing to its underlying neurophysiology, that is, to under-

stand how neural mechanisms allow the mapping of the

surface structure of a sentence onto a conceptual repre-

sentation of its meaning. The successful pursuit of this

objective will likely prove to be a multidisciplinary endeav-

or that requires cooperation between theoretical, devel-

opmental, and neurological approaches to the study of

language, as well as contributions from computational

modeling that can eventually validate proposed hypothe-

ses. The current research takes this multidisciplinary

approach within the theoretical framework associated

with grammatical constructions. The essential distinc-

tion within this context is that language is considered to

consist of a structured inventory of mappings between

the surface forms of utterances and meanings, referred

to as grammatical constructions (see Goldberg, 1995).

These mappings vary along a continuum of complexity.

At one end are single words and fixed ‘‘holophrases’’

such as ‘‘Gimme that’’ that are processed as unparsed

‘‘holistic’’ items (see Tomasello, 2003). At the other

extreme are complex abstract argument constructions

that allow the use of sentences like this one. In between

are the workhorses of everyday language, abstract argu-

ment constructions that allow the expression of spatio-

temporal events that are basic to human experience

including active transitive (e.g., John took the car) and

ditransitive (e.g., Mary gave my mom a new recipe)

constructions (Goldberg, 1995).

In this context, the ‘‘usage-based’’ perspective holds

that the infant begins language acquisition by learning

very simple constructions in a progressive development

of processing complexity, with a substantial amount of

early ground that can be covered with relatively mod-

est computational resources (Clark, 2003; Tomasello,

2003). This is in contrast with the ‘‘continuity hypothe-

sis’’ issued from the generative grammar philosophy,

which holds that the full range of syntactic complexity

is available in the form of a universal grammar and is

used at the outset of language learning (see Tomasello,

2000, and comments on the continuity hypothesis de-

bate). In this generative context, the universal grammar

is there from the outset, and the challenge is to under-

stand how it got there. In the usage-based construction

approach, initial processing is simple and becomes

increasingly complex, and the challenge is to explain

the mechanisms that allow full productivity and com-

positionality (composing new constructions from exist-

ing ones). This issue is partially addressed in the current

research.

Theoretical Framework for

Grammatical Constructions

If grammatical constructions are mappings from sen-

tence structure to meaning, then the system must be

capable of (1) identifying the type of grammatical con-

struction for a given sentence and (2) using the identi-

fied construction and its corresponding mapping to

1

CNRS UMR 5015, France,

2

Kyoto University, Japan

D 2006 Massachusetts Institute of Technology Journal of Cognitive Neuroscience 18:12, pp. 2088–2107

extract the meaning of the sentence. Interestingly, this

corresponds to Townsend and Bever’s (2001) two steps

of syntactic processing, that is, (i) parsing of the sen-

tence into phrasal constituents and words (requiring

access to lexical categories and word order analysis) and

(ii) subsequent analysis of phrasal structure and long-

distance dependencies by means of syntactic rules.

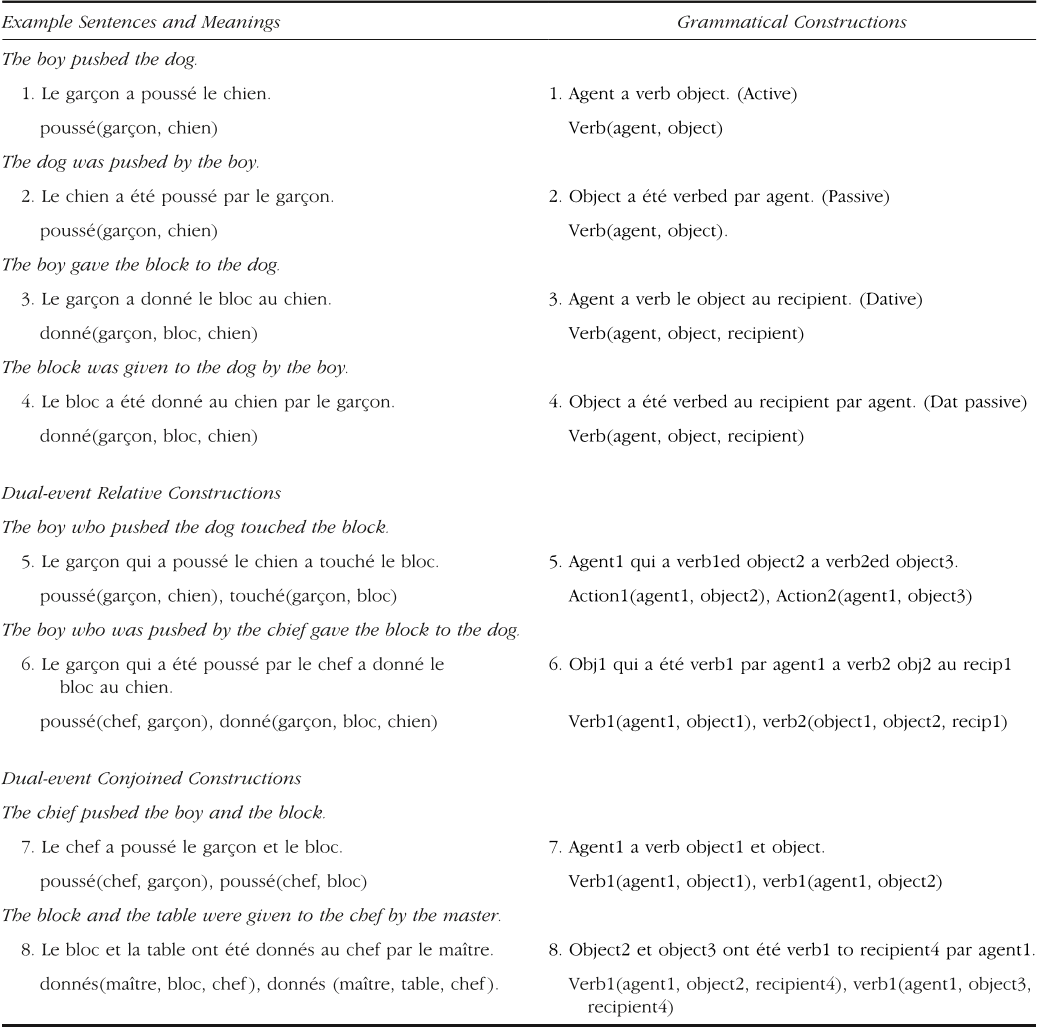

Figure 1A illustrates an example of an active transi-

tive sentence and its mapping onto a representation of

meaning. The generalized representation of the corre-

sponding active transitive construction is depicted in

Figure 1B. The ‘‘slots’’ depicted by the NPs and V can

be instantiated by different nouns and verbs in order to

generate an open set of new sentences. For each sentence

corresponding to this construction type, the mapping of

sentence to meaning is provided by the construction.

Figure 1C illustrates a more complex construction that

contains an embedded relative phrase. In this context, a

central issue in construction grammar will concern how

the potential diversity of constructions are identified.

Part of the response to this lies in the specific ways in

which function and content words are structurally orga-

nized in distinct sentence types. Function words (also

referred to as closed class words because of their limited

number in any given language), including determiners,

prepositions, and auxiliary verbs, play a role in defining

the grammatical structure of a sentence (i.e., in specify-

ing who did what to whom) although they carry little

semantic content. Content words (also referred to as

open class words because of their essentially unlimited

number) play a more central role in contributing pieces

of meaning that are inserted into the grammatical

structure of the sentence. Thus, returning to our exam-

ples in Figure 1, the thematic roles for the content

words are determined by their relative position in the

sentences with respect to the other content words and

with respect to the function words. Although this is the

case in English, Bates, McNew, MacWhinney, Devescovi,

and Smith (1982) and MacWhinney (1982) have made

the case more generally, stating that across human lan-

guages, the grammatical structure of sentences is spec-

ified by a combination of cues including word order,

grammatical function words (and or grammatical mark-

ers attached to the word roots), and prosodic structure.

The general idea here is that these constructions are

templates into which a variety of open class elements

(nouns, verbs, etc.) can be inserted in order to express

novel meanings. Part of the definition of a construction

is the mapping between slots or variables in the tem-

plate and the corresponding semantic roles in the

meaning, as illustrated in Figure 1. In this context, a

substantial part of the language faculty corresponds to a

structured set of such sentence-to-meaning mappings,

and these mappings are stored and retrieved based on

the patterns of structural markers (i.e., word order and

function word patterns) unique to each grammatical

construction type. Several major issues can be raised

with respect to this characterization. The issues that we

address in the current research are as follows: (1) Can

this theoretical characterization be mapped onto human

functional neuroanatomy in a meaningful manner and

(2) can the resulting system be demonstrated to account

for a restricted subset of human language phenomena

in a meaningful manner?

Implementing Grammatical Constructions

in a Neurocomputational Model

Interestingly, this characterization of grammatical con-

structions can be reformulated into a type of sequence

learning problem, if we consider a sentence as a se-

quence of words. Determining the construction type for

a given sentence consists in analyzing or recoding the

sentence as a sequence of open class and closed class

elements, and then performing sequence recognition on

this recoded sequence. Dominey (1995) and Dominey,

Arbib, and Joseph (1995) have demonstrated how such

sequence recognition can be performed by a recurrent

prefrontal cortical network (a ‘‘temporal recurrent net-

work’’ [TRN]) that encodes sequential structure (see

also Dominey 1998a, b). Then corticostriatal connec-

tions allow the association of different categories of

sequences represented in the recurrent network with

different behavioral responses.

Once the construction type has thus been identified,

the corresponding mapping of open class elements

onto their semantic roles must be retrieved and per-

formed. This mapping corresponds to what we have

called ‘‘abstract structure’’ processing (Dominey, Lelekov,

Ventre-Dominey, & Jeannerod, 1998). In this context, the

reordering in the form–meaning mapping in Figure 1B

can be characterized as the abstract structure ABCD-

Figure 1. Grammatical construction overview. (A) Specific example

of a sentence-to-meaning mapping. (B) Generalized representation

of the construction. (C) Sentence-to-meaning mapping for sentence

with relativized phrase. (D) Compositional sentence-to-meaning

mapping for sentence with relative phrase in which the relative

phrase has been extracted.

Dominey, Hoen, and Inui 2089

BACD where A-D represent variable slots. In order to

accommodate such abstract structures, rather than rep-

resenting sequences of distinct elements, we modified

the recurrent network model to represent sequences of

variables corresponding to prefrontal working memory

elements (Dominey et al., 1998).

Concretely, from a developmental perspective, we

demonstrated that the resulting abstract recurrent net-

work (ARN) could simulate human infant performance

in distinguishing between abstract structures such as

ABB versus AAB (Dominey & Ramus, 2000) as described

by Marcus et al. (1999). In the grammatical construction

analog, these abstract structures correspond to the

mapping of word order in the sentence onto semantic

arguments in the meaning as illustrated in Figure 1. We

thus demonstrated how the dual TRN/ARN system could

be used for sentence comprehension. The sequence of

closed class elements defining the construction was

processed by the TRN (corresponding to a recurrent

corticocortical network). Then, via modifiable cortico-

striatal synapses, the resulting pattern of cortical activity

recovered the corresponding abstract structure for re-

ordering the open class elements into the appropriate

semantic argument order by the ARN (Dominey, Hoen,

Blanc, & Lelekov-Boissard, 2003; Dominey, 2002).

The model essentially predicted a common neuro-

physiological basis for abstract mappings of the form

BHM-HMB and form-to-meaning mappings such as ‘‘The

ball was hit by Mary’’: Hit(Mary, ball). Concretely, we

predicted that brain lesions in the left perisylvian cortex

that produce syntactic comprehension deficits would

produce correlated impairments in abstract sequence

processing. The first test of this prediction was thus to

compare performance in aphasic patients on these two

types of behavior. We observed a highly significant

correlation between performance on syntactic compre-

hension and abstract structure processing in aphasic

patients, as well as in schizophrenic patients (Dominey

et al., 2003; Lelekov et al., 2000). We reasoned that if this

correlation was due to a shared brain mechanism, then

training in one of the tasks should transfer to improve-

ment on the other. Based on this prediction, we subse-

quently demonstrated that reeducation with specific

nonlinguistic abstract structures transferred to improved

comprehension for the analogous sentence types in

a group of aphasic patients (Hoen et al., 2003). In or-

der to begin to characterize the underlying shared neu-

ral mechanisms, Hoen and Dominey (2000) measured

brain activity with event-related potentials for abstract

sequences in which the mapping was specified by a

‘‘function symbol’’ analogous to function words in sen-

tences. Thus, in the sequences ABCxBAC and ABCzABC,

the two function symbols x and z indicate two distinct

structure mappings. We thus observed a left anterior

negativity (LAN) in response to the function symbols,

analogous to the LAN observed in response to gram-

matical function words during sentence processing

(Hoen & Dominey, 2000), again suggesting a shared

neural mechanism. In order to neuroanatomically local-

ize this shared mechanism, we performed a related set

of brain imagery experiments comparing sentence and

sequence processing using functional magnetic reso-

nance imaging (fMRI). We observed that a common cor-

tical network including Brodmann’s area (BA) 44 was

involved in the processing of sentences and abstract

structure in nonlinguistic sequences, whereas BA 45

was exclusively activated in sentence processing, corre-

sponding to insertion of lexical semantic content into

the transformation mechanism (Hoen, Pachot-Clouard,

Segebarth, & Dominey, 2006).

This computational neuroscience approach allowed

the projection of the construction grammar framework

onto the corticostriatal system with two essential prop-

erties: first, construction identification by corticostriatal

sequence recognition, and second, structure mapping

based on the manipulation of sequences of frontal cor-

tical working memory elements (Dominey & Hoen,

2006). Given the initial validation of this model in the

neurophysiological and neuropsychological studies cited

above, we can now proceed with a more detailed study

of how this can contribute to an understanding of the

possible implementation of grammatical constructions,

as illustrated in Figure 2.

As the sentence is processed word by word, a process

of lexical categorization identifies open and closed class

words. This is not unrealistic, as newborns can perform

this categorization (Shi, Werker, & Morgan, 1999), and

several neural network studies have demonstrated lexi-

cal categorization of this type based on prosodic cues

(Blanc, Dodane, & Dominey, 2003; Shi et al., 1999). In

the current implementation, only nouns and verbs are

recognized as open class words, with the modification of

these by adjectives and adverbs left for now as a future

issue. The meanings of the open class words are re-

trieved from the lexicon (not addressed here, but see

Dominey & Boucher, 2005; Roy, 2002; Dominey, 2000;

Siskind, 1996) and these referent meanings are stored in

a working memory called the PredictedReferentsArray.

The next crucial step is the mapping of these referent

meanings onto the appropriate components of the

meaning structure. In Figure 2, this corresponds to

the mapping from the PredictedReferentsArray onto

the meaning coded in the SceneEventArray. As seen in

Figure 2A and B, this mapping varies depending on the

construction type. Thus, the system must be able to

store and retrieve different FormToMeaning mappings

appropriate for different sentence types, corresponding

to distinct grammatical constructions.

During the lexical categorization process, the struc-

ture of the sentence is recoded based on the local

structure of open and closed class words, in order to

yield a ConstructionIndex that will be unique to each

construction type, corresponding to the cue ensemble

of Bates et al. (1982) in the general case. The closed

2090 Journal of Cognitive Neuroscience Volume 18, Number 12

class words are explicitly represented in the Construc-

tionIndex, whereas open class words are represented as

slots that can take open class words as arguments. The

ConstructionIndex is thus a global representation of the

sentence structure. Again, the requirement is that every

different grammatical construction type should yield a

unique ConstructionIndex. This ConstructionIndex can

then be used as an index into an associative memory to

store and retrieve the correct FormToMeaning mapping.

We have suggested that this mechanism relies on

recurrent cortical networks and corticostriatal process-

ing (Dominey et al., 2003). In particular, we propose that

the formation of the ConstructionIndex as a neural

pattern of activity will rely on sequence processing in

recurrent cortical networks, and that the retrieval of the

FormToMeaning component will rely on a corticostriatal

associative memory. Finally, the mapping from form to

meaning will take place in the frontal cortical region

including BAs 44, 46, and 6. This corresponds to the

SceneEventArray, consistent with observations that

event meaning is represented in this BA 44 pars oper-

cularis region, when events are being visually observed

(Buccino et al., 2004), and when their descriptions are

listened to (Tettamanti et al., 2005). Hoen et al. (2006)

provided strong evidence that for both the processing of

grammatical and nonlinguistic structure processing

rules, this frontal cortical region including BAs 44, 46,

and 6 was activated (see Figure 3), indicating its role in

linguistic and nonlinguistic structural mapping.

The proposed role of basal ganglia in rule storage

and retrieval is somewhat related to the procedural

component of Ullman’s (2001, 2004, 2006) grammar pro-

cessing model in which grammatical rules are encoded

in specific (but potentially domain independent) chan-

nels of the corticostriatal system. Longworth, Keenan,

Barker, Marslen-Wilson, and Tyler (2005) indicate a

more restricted, non-language-specific role of the stria-

tum in language in the selection of the appropriate

mapping in the late integration processes of language

comprehension. Neuropsychological evidence for the

role of the striatum in such rule extraction has been

provided in patients with Huntington’s disease (a form

of striatal dysfunction) that were impaired in rule appli-

cation in three domains: morphology, syntax, and arith-

metic (Teichmann et al., 2005). These data are thus

consistent with the hypothesis that the striatum is

Figure 2. Structure-mapping

architecture. (A) Passive

sentence processing: Step 1,

lexical categorization—

open and closed class words

directed to OpenClassArray

and ConstructionIndex,

respectively. Step 2, open

class words in OpenClassArray

are translated to their

referent meanings via the

WordToReferent mapping.

Insertion of this referent

semantic content into

the Predicted Referents

Array (PRA) is realized in

pars triangularis BA 45.

Step 3, PRA elements are

mapped onto their roles

in the SceneEventArray

by the FormToMeaning

mapping specific to each

sentence type. Step 4,

This mapping is retrieved

from ConstructionInventory

(a corticostriatal associative

memory) via the Construction-

Index (a corticocortical

recurrent network) that

encodes the closed and

open class word patterns

that characterize each

grammatical construction type.

The structure mapping process

is associated with activation of

pars opercularis BA 44. In the

current implementation, neural network associative memory for the ConstructionInventory is replaced by a procedural lookup table. (B) Active

sentence. Note difference in ConstructionIndex and in FormToMeaning.

Dominey, Hoen, and Inui 2091

involved in the computational application of rules in-

cluding our proposed mappings.

In contrast, the integration of lexicosemantic con-

tent into this structure processing machinery, filling of

the PredictedReferentsArray, corresponds to a more

language-specific ventral stream mechanism that culmi-

nates in the pars triangularis (BA 45) of the ventral pre-

motor area, consistent with the declarative component

of Ullman’s (2004) model. In this context, Hoen et al.

(2006) observed that when the processing of sentences

and nonlinguistic sequences was compared, BA 45 was

activated exclusively by the sentence processing.

Goals of the Current Study

We have previously demonstrated that the model in

Figure 2 can learn a variety of constructions that can

then be used in different language interaction contexts

(Dominey & Boucher, 2005a, b; Dominey, 2000). How-

ever, from a functional perspective, a model of gram-

matical construction processing should additionally

address two distinct challenges of (1) cross-linguistic

validity and (2) compositionality, which we will address

in the current study.

Although the examples used above have been pre-

sented in English, the proposed model of grammatical

construction processing should accommodate different

types of languages. Thus, the first objective will be to

demonstrate that the proposed system is capable of

learning grammatical constructions in English, French,

and Japanese. Whereas English and French are relatively

similar in their linguistic structure, Japanese is some-

what different in that it allows more freedom in word

order, with information about thematic roles encoded

in case markers.

Regarding the challenge of compositionality, as pre-

sented above, it appears that for each different type of

sentence, the system must have a distinct construction.

Considering Figure 1C, this indicates that every time a

noun is expanded into a relative phrase, a new construc-

tion will be required. This is undesirable for two reasons:

First it imposes a large number of similar constructions to

be stored, and second, it means that if a sentence occurs

with a relative phrase in a new location, the model will

fail to understand that sentence without first having a

<sentence, meaning> pair from which it can learn the

mapping. Alternatively, the model could process con-

structions within constructions in a compositional man-

ner. Thus, the sentence ‘‘The dog that chased the cat bit

Mary’’ could be decomposed into its constituent phrases

‘‘The dog chased the cat’’ and ‘‘The dog bit Mary.’’ The

goal is to determine whether the model as presented

above can accommodate this kind of compositionality.

During the processing of multiple sentences with this

type of embedded relative clause, it will repeatedly

occur that the pattern ‘‘agent that action object’’ will

occur, and will map onto the meaning component

action(agent, object). The same kind of pattern finding

that allows the association of a ConstructionIndex with

the corresponding FormToMeaning mapping could also

work on such patterns that reoccur within sentences.

That is, rather than looking for patterns at the level of

the entire sentence or ConstructionIndex, the model

could apply exactly the same mechanisms to identify

subpatterns within the sentence. This would allow the

system to generalize over these occurrences such that

when this pattern occurs in a new location in a sentence,

it can be extracted. This will decompose the sentence

into the principal and the relative phrases, with the out-

come that both will more likely correspond to existing

constructions (see Figure 1D). Clearly, this will not work

in all cases of new relative clauses, but more importantly,

it will provide a compositional capability for the system

to accommodate a subclass of the possible new sentence

types. This type of segmentation approach has been

explored by Miikkulainen (1996), discussed below.

METHODS

In the first set of experiments (Experiment 1A–C) for each

of the three languages English, Japanese, and French,

respectively, we will expose the model to a set of <sen-

tence, meaning> pairs in a training phase. We then

validate that the model has learned these sentences

by presenting the sentences alone and comparing the

Figure 3. Comparison of brain activation in sentence processing

and nonlinguistic sequence mapping tasks. Subjects read visually

presented sentences and nonlinguistic sequences presented one

word/element at a time, and performed grammaticality/correctness

judgments after each, responding by button press. Red areas

indicate regions activated by both tasks, including a prefrontal

network that involves BAs 44, 46, 9, and 6. Green areas indicate

cortical regions activated exclusively in the sentence processing

task, including BAs 21, 22, 47, and 45. From Hoen et al. (2006)

with permission.

2092 Journal of Cognitive Neuroscience Volume 18, Number 12