A Survey of Semantic Image and Video

Annotation Tools

S. Dasiopoulou, E. Giannakidou, G. Litos, P. Malasioti, and I. Kompatsiaris

Multimedia Knowledge Lab oratory, Informatics and Telematics Institute,

Centre for Research and Technology Hellas

{dasiop,igiannak,litos,xenia,ikom}@iti.gr

Abstract. The availability of semantically annotated image and video

assets constitutes a critical prerequisite for the realisation of intelligent

knowledge management services pertaining to realistic user needs. Given

the extend of the challenges involved in the automatic extraction of such

descriptions, manually created metadata play a significant role, further

strengthened by their deployment in training and evaluation tasks re-

lated to the automatic extraction of content descriptions. The different

views taken by the two main approaches towards semantic content de-

scription, namely the Semantic Web and MPEG-7, as well as the traits

particular to multimedia content due to the multiplicity of information

levels involved, have resulted in a variety of image and video annotation

to ols, adopting varying description aspects. Aiming to provide a com-

mon framework of reference and furthermore to highlight open issues,

esp ecially with respect to the coverage and the interoperability of the

pro duced metadata, in this chapter we present an overview of the state

of the art in image and video annotation tools.

1 Introduction

Accessing multimedia content in correspondence with the meaning pertained to a

user, constitutes the core challenge in multimedia research, commonly referred to

as the semantic gap [1]. The current state of the art in automatic content analysis

and understanding supports in many cases the successful detection of semantic

concepts, such as persons, buildings, natural scenes vs manmade scenes, etc. at a

satisfactory level of accuracy; however, the attained performance remains highly

variable when considering general domains, or when increasing, even slightly, the

number of supported concepts [2–4]. As a consequence, the manual generation of

content descriptions holds an important role towards the realisation of intelligent

content management services. This significance is further strengthened by the

need for manually constructed descriptions in automatic content analysis both

for evaluation as well as for training purposes, when learning based on pre-

annotated examples is used.

The availability of semantic descriptions though is not adequate per se for

the effective management of multimedia content. Fundamental to information

sharing, exchange and reuse, is the interoperability of the descriptions at both

syntactic and semantic levels, i.e. regarding the valid structuring of the descrip-

tions and the endowed meaning respectively. Besides the general prerequisite for

interoperability, additional requirements arise from the multiple levels at which

multimedia content can be represented including structural and low-level fea-

tures information. Further description levels induce from more generic aspects

such as authoring & access control, navigation, and user history & preferences.

The strong relation of structural and low-level feature information to the tasks in-

volved in the automatic analysis of visual content, as well as to retrieval services,

such as transcoding, content-based search, etc., brings these two dimensions to

the foreground, along with the subject matter descriptions.

Two initiatives prevail the efforts towards machine processable semantic con-

tent metadata, the Semantic Web activity

1

of the W3C and ISO’s Multimedia

Content Description Interface

2

(MPEG-7) [5, 6], delineating corresponding ap-

proaches with respect to multimedia semantic annotation [7, 8]. Through a lay-

ered architecture of successively increased expressivity, the Semantic Web (SW)

advocates formal semantics and reasoning through logically grounded meaning.

The respective rule and ontology languages embody the general mechanisms

for capturing, representing and reasoning with semantics. They do not capture

application specific knowledge. In contrast, MPEG-7 addresses specifically the

description of audiovisual content and comprises not only the representation

language, in the form of the Description Definition Language (DDL), but also

specific, media and domain, definitions; thus from a SW perspective, MPEG-

7 serves the twofold role of a representation language and a domain specific

ontology.

Overcoming the syntactic and semantic interoperability issues between MPEG-

7 and the SW has been the subject of very active research in the current decade,

highly motivated by the complementary aspects characterising the two afore-

mentioned metadata initiatives: media sp ecific, yet not formal, semantics on one

hand, and general mechanisms for logically grounded semantics on the other

hand. A number of so called multimedia ontologies [9–13] issued in an attempt

to add formal semantics to MPEG-7 descriptions and thereby enable linking with

existing ontologies and the semantic management of existing MPEG-7 metadata

repositories. Furthermore, initiatives such the W3C Multimedia Annotation on

the Semantic Web Taskforce

3

, the W3C Multimedia Semantics Incubator Group

4

and the Common Multimedia Ontology Framework

5

, have been established to

address the technologies, advantages and open issues related to the creation,

storage, manipulation and processing of multimedia semantic metadata.

In this chapter, bearing in mind the significance of manual image and video

annotation in combination with the different possibilities afforded by the SW

and MPEG-7 initiatives, we present a detailed overview of the most well known

1

http://www.w3.org/2001/sw/

2

http://www.chiariglione.org/mpeg/

3

http://www.w3.org/2001/sw/BestPractices/MM/

4

http://www.w3.org/2005/Incubator/mmsem/

5

http://www.acemedia.org/aceMedia/reference/multimedia ontology/index.html

manual annotation tools, addressing both functionality aspects, such as coverage

& granularity of annotations, as well as interoperability concerns with respect to

the supported annotation vocabularies and representation languages. Interoper-

ability though does not address solely the harmonisation between the SW and

MPEG-7 initiatives; a significant number of tools, specially regarding video an-

notation, follow customised approaches, aggravating the challenges. As such, this

survey serves a twofold role; it provides a common framework for reference and

comparison purposes, while highlighting issues pertaining to the communication,

sharing and reuse of the produced metadata.

The rest of the chapter is organised as follows. Section 2 describes the criteria

along which the assessment and comparison of the examined annotation tools

is performed. Sections 3 and 4 discuss the individual image and video tools

respectively, while Section 5 concludes the paper, summarising the resulting

observations and open issues.

2 Semantic Image and Video Annotation

Image and video assets constitute extremely rich information sources, ubiqui-

tous in a wide variety of diverse applications and tasks related to information

management, both for personal and professional purposes. Inevitably, the value

of the endowed information amounts to the effectiveness and efficiency at which

it can be accessed and managed. This is where semantic annotation comes in, as

it designates the schemes for capturing the information related to the content.

As already indicated, two crucial requirements featuring content annotation

are the interoperability of the created metadata and the ability to automatically

process them. The former encompasses the capacity to share and reuse anno-

tations, and by consequence determines the level of seamless content utilisation

and the benefits issued from the annotations made available; the latter is vital

to the realisation of intelligent content management services. Towards their ac-

complishment, the existence of commonly agreed vocabularies and syntax, and

respectively of commonly agreed semantics and interpretation mechanisms, are

essential elements.

Within the context of visual content, these general prerequisites incur more

specific conditions issuing from the particular traits of image and video assets.

Visual content semantics, as multimedia semantics in general, comes into a mul-

tilayered, intertwined fashion [14, 15]. It encompasses, amongst others, thematic

descriptions addressing the subject matter depicted (scene categorisation, ob-

jects, events, etc.), media descriptions referring to low-level features and related

information such as the algorithms used for their extraction, respective param-

eters, etc., as well as structural descriptions addressing the decomposition of

content into constituent segments and the spatiotemporal configuration of these

segments. As in this chapter semantic annotation is investigated mostly with re-

spect to content retrieval and analysis tasks, aspects addressing concerns related

to authoring, access and privacy, and so forth, are only shallowly treated.

Fig. 1. Multi-layer image semantics.

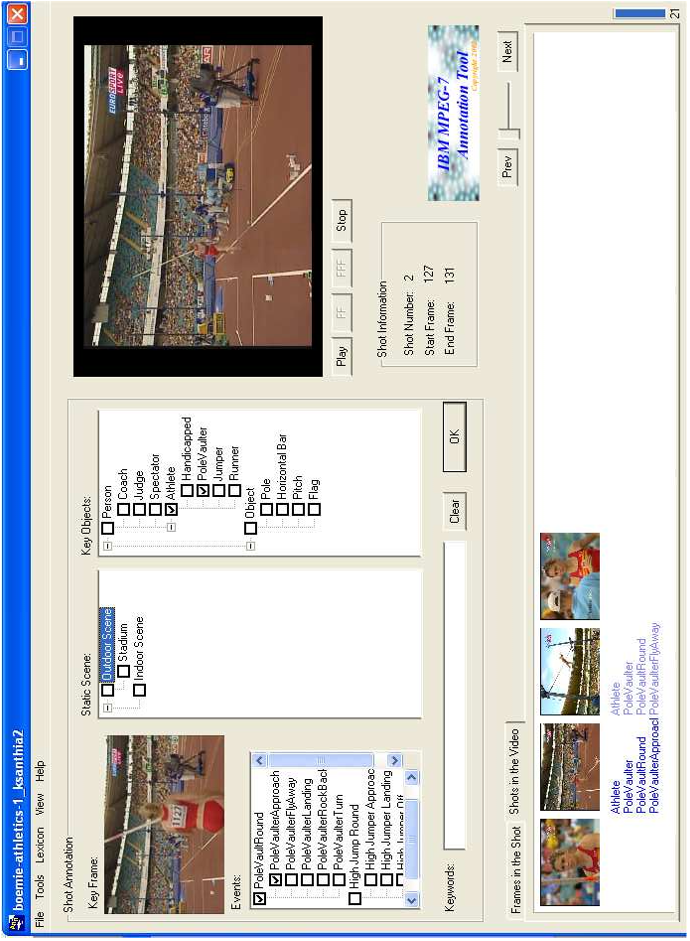

Figure 1 shows such an example, illustrating subject matter descriptions such

as “Sky” and “Pole Vaulter, Athlete”, structural descriptions such as the three

identified regions, the spatial configuration between two of them (i.e. region2

above region3), and the ScalableColour and RegionsShape descriptor values ex-

tracted for two regions. The different layers correspond to different annotation

dimensions and serve different purposes, further differentiated by the individual

application context. For example, for a search and retrieval service regarding

a device of limited resources (e.g. PDA, mobile phone), content management

becomes more effective if specific temporal parts of video can be returned to a

query rather than the whole video asset, leaving the user with the cumbersome

task of browsing through it, till reaching the relative parts and assessing if they

satisfy her query.

The aforementioned considerations intertwine, establishing a number of di-

mensions and corresponding criteria along which image and video annotation

can be characterised. As such, interoperability, explicit semantics in terms of lia-

bility to automated processing, and reuse, apply both to all types of description

dimensions and to their interlinking, and not only to subject matter descriptions,

as is the common case for textual content resources.

In the following, we describe the criteria along which we overview the different

annotation tools in order to assess them with respect to the aforementioned

considerations. Criteria addressing concerns of similar nature have been grouped

together, resulting in three categories.

2.1 Input & Output

This category includes criteria regarding the way the tool interacts in terms of

requested / supported input and the output produced.

– Annotation Vocabulary. Refers to whether the annotation is performed ac-

cording to a predefined set of terms (e.g. lexicon / thesaurus, taxonomy,

ontology) or if it is provided by the user in the form of keywords and free

text. In the case of controlled vocabulary, we differentiate the case where the

user has to explicitly provide it (e.g. as when uploading a sp ecific ontology)

or whether it is provided by the tool as a built-in; the formalisms supported

for the representation of the vocabulary constitute a further attribute. We

note that annotation vocabularies may refer not only to subject matter de-

scriptions, but as well to media and structural descriptions. Naturally, the

more formal and well-defined the semantics of the annotation vocabulary,

the more opportunities for achieving interoperable and machine understand-

able annotations.

– Metadata Format. Considers the representation format in which the pro-

duced annotations are expressed. Naturally, the output format is strongly

related to the supported annotation vocabularies. As will be shown in the

sequel though, where the individual tools are described, there is not nec-

essarily a strict correspondence (e.g. a tool may use an RDFS

6

or OWL

7

ontology as the subject matter vocabulary, and yet output annotations in

RDF

8

). The format is equally significant to the annotation vocabulary as

with respect to the annotations interoperability and sharing.

– Content Type. Refers to the supported image/video formats, e.g. jpg, png,

mpeg, etc.

2.2 Annotation Level

This category addresses attributes of the annotations per se. Naturally, the types

of information addressed by the descriptions issue from the intended context of

usage. Subject matter annotations, i.e. thematic descriptions with respect to the

depicted objects and events, are indispensable for any application scenario ad-

dressing content-based retrieval at the level of meaning conveyed. Such retrieval

may address concept-based queries or queries involving relations between con-

cepts, entailing respective annotation specifications. Structural information is

crucial for services where it is important to know the exact content parts associ-

ated with specific thematic descriptions, as for example in the case of semantic

transcoding or enhanced retrieval and presentation, where the parts of interest

can be indicated in an elaborated manner. Analogously, annotations intended for

6

http://www.w3.org/TR/rdf-schema/

7

http://www.w3.org/TR/owl-features/

8

http://www.w3.org/RDF/