Did you find this useful? Give us your feedback

1,352 citations

906 citations

...The best local monomial approximation g̃(x0) of g(x0) near x0 can be easily verified [4]....

[...]

619 citations

596 citations

510 citations

...Detailed discussion of GP can be found in the following books, book chapters, and survey articles: [52, 133, 10, 6, 51, 103, 54, 20]....

[...]

[...]

33,341 citations

17,420 citations

...This is a nonlinear least-squares problem, which can be solved (usually) using methods such as the Gauss-Newton method [13, 102, 116]....

[...]

12,671 citations

...This is a nonlinear least-squares problem, which can be solved (usually) using methods such as the Gauss-Newton method [13, 102, 116]....

[...]

4,908 citations

The main trick to solving a GP efficiently is to convert it to a nonlinear but convex optimization problem, i.e., a problem with convex objective and inequality constraint functions, and linear equality constraints.

One useful extension of monomial fitting is to include a constant offset, i.e., to fit the data (x(i), f (i)) to a model of the formf (x) = b + cxa11 · · ·xann ,where b ≥ 0 is another model parameter.

The wire segment resistance and capacitance are both posynomial functions of the wire widths wi , which will be their design variables.

Another common method for finding the trade-off curve (or surface) of the objective and one or more constraints is the weighted sum method.

The constraint that the truss should be strong enough to carry the load F1 means that the stress caused by the external force F1 must not exceed a given maximum value.

Applications of geometric programming in other fields include:• Chemical engineering (Clasen 1984; Salomone and Iribarren 1993; Salomone et al.

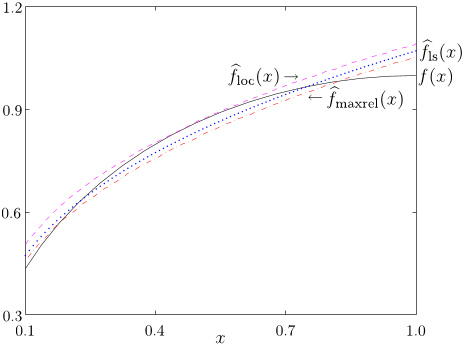

The authors illustrate posynomial fitting using the same data points as those used in the max-monomial fitting example given in Sect. 8.4. The authors used a Gauss-Newton method to find K-term posynomial approximations, ĥK(x), for K = 3, 5, 7, which (locally, at least) minimize the sum of the squares of the relative errors.

The optimal trade-off curve (or surface) can be found by solving the perturbed GP (12) for many values of the parameter (or parameters) to be varied.

This analysis suggests that the authors can handle composition of a generalized posynomial with any function whose series expansion has no negative coefficients, at least approximately, by truncating the series.

This is a nonlinear least-squares problem, which can be solved (usually) using methods such as the Gauss-Newton method (Bertsekas 1999; Luenberger 1984; Nocedal and Wright 1999).

8.2 Local monomial approximationThe authors consider the problem of finding a monomial approximation of a differentiable positive function f near a point x (with xi > 0).

The authors first describe the method for the case with only one generalized posynomial equality constraint (since it is readily generalizes to the case of multiple generalized posynomial equality constraints).

The interesting part here is the converse for generalized posynomials, i.e., the observation that if F can be approximated by a convex function, then f can be approximated by a generalized posynomial.