372 citations

298 citations

296 citations

...A survey by Aucouturier and Pachet (2003) describes a number of popular features for music similarity and classification, and research continues (e.g. Bello et al. (2005), Pampalk et al. (2005))....

[...]

284 citations

266 citations

...The three performance measures used here are Precision (P), the ratio of Hits to Detected Changes and Recall (R), the ratio of hits to transcribed changes and the f-measure (F) which combines the two (see equation 5) [ 1 ]....

[...]

3,830 citations

...The algorithms described in [30] are concerned with detecting this change of sign....

[...]

...1) Model-Based Change Point Detection Methods: A wellknown approach is based on the sequential probability ratio test [30]....

[...]

2,968 citations

1,956 citations

...Given the importance of musical events, it is clear that identifying and characterizing these events is an important aspect of this process....

[...]

1,659 citations

...However, we will focus only on two processes that are consistently mentioned in the literature, and that appear to be of particular relevance to onset detection schemes, especially when simple reduction methods are implemented: separating the signal into multiple frequency bands, and…...

[...]

793 citations

...By implementing a multiple-band scheme, the approach effectively avoids the constraints imposed by the use of a single reduction method, while having different time resolutions for different frequency bands....

[...]

The goal of this paper is to review, categorize, and compare some of the most commonly used techniques for onset detection, and to present possible enhancements. The authors discuss methods based on the use of explicitly predefined signal features: the signal ’ s amplitude envelope, spectral magnitudes and phases, time-frequency representations ; and methods based on probabilistic signal models: model-based change point detection, surprise signals, etc. Using a choice of test cases, the authors provide some guidelines for choosing the appropriate method for a given application.

For nontrivial sounds, onset detection schemes benefit from using richer representations of the signal (e.g., a time-frequency representation).

Under model , the expectation is(15)If the authors assume that the signal initially follows model , and switches to model at some unknown time, then the short-time average of the log-likelihood ratio will change sign.

The scheme takes advantage of the correlations across scales of the coefficients: large wavelet coefficients, related to transients in the signal, are not evenly spread within the dyadic plane but rather form “structures”.

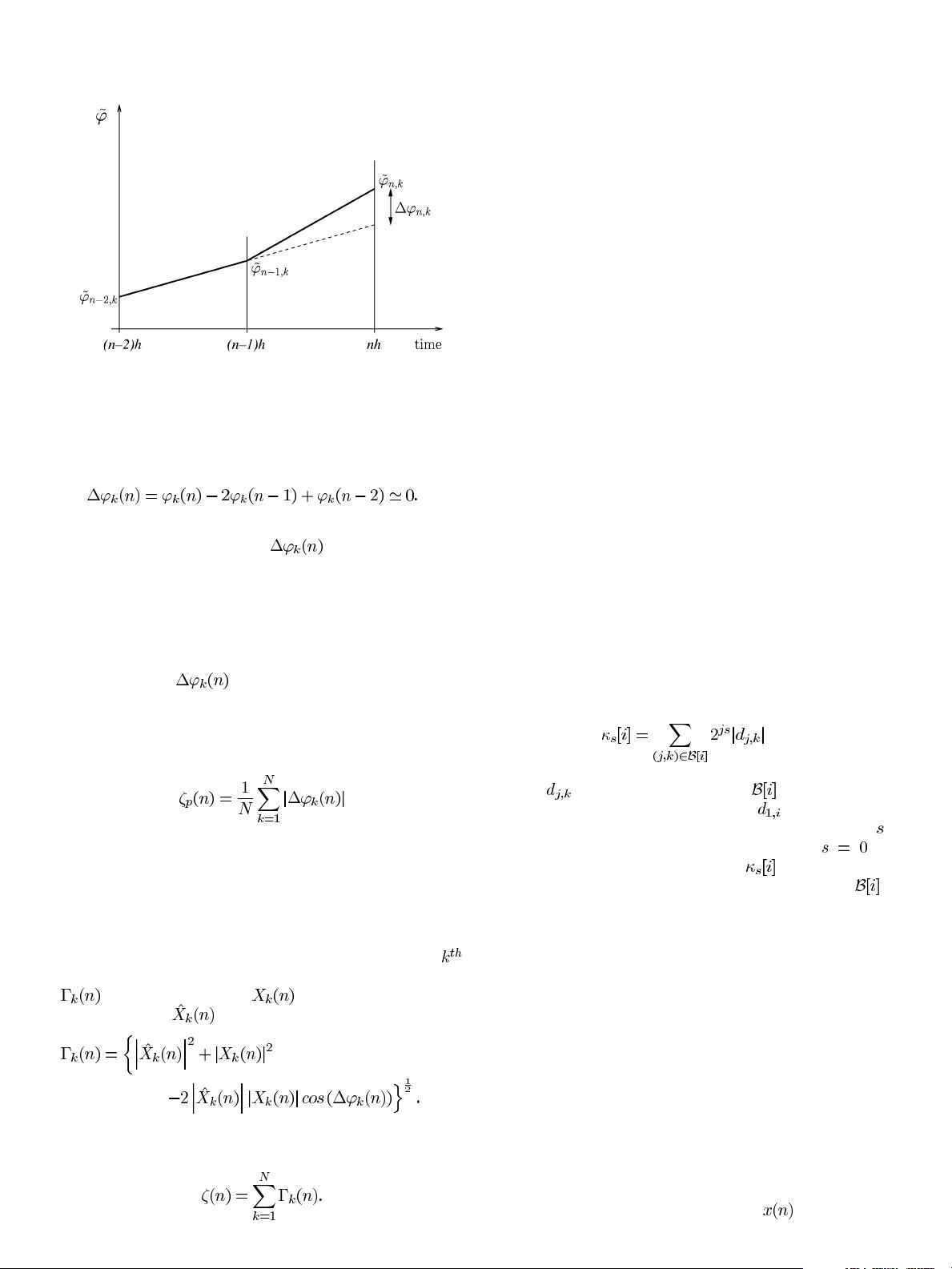

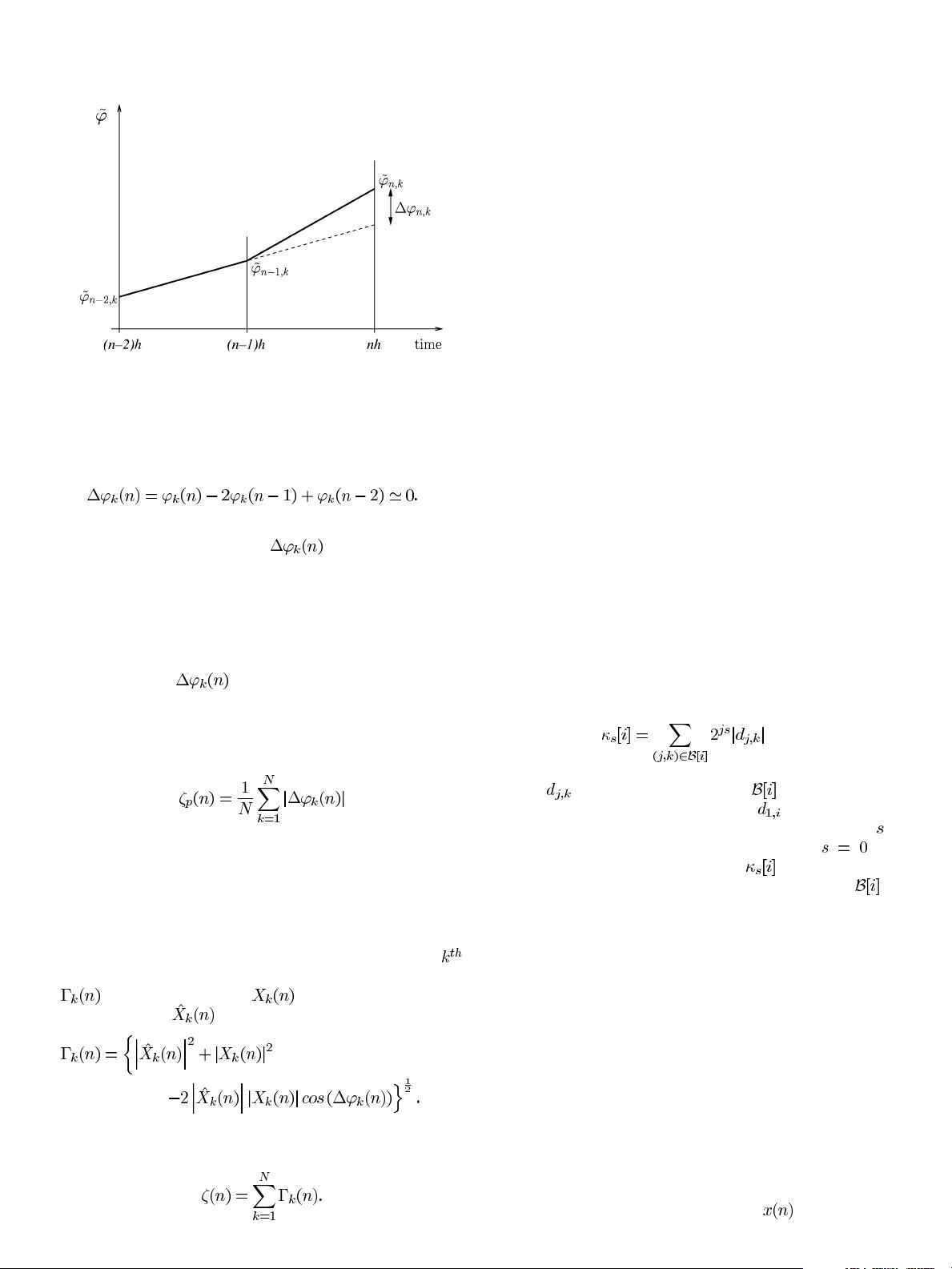

Fig. 2 illustrates the procedure employed in the majority of onset detection algorithms: from the original audio signal, which can be pre-processed to improve the performance of subsequent stages, a detection function is derived at a lower sampling rate, to which a peak-picking algorithm is applied to locate the onsets.

An alternative to the analysis of the temporal envelope of the signal and of Fourier spectral coefficients, is the use of time-scale or timefrequency representations (TFR).

A more general approach based on changes in the spectrum is to formulate the detection function as a “distance” between successive short-term Fourier spectra, treating them as points in an -dimensional space.

All peak-picking parameters (e.g., filter’s cutoff frequency, ) were held constant, except for the threshold which was varied to trace out the performance curve.