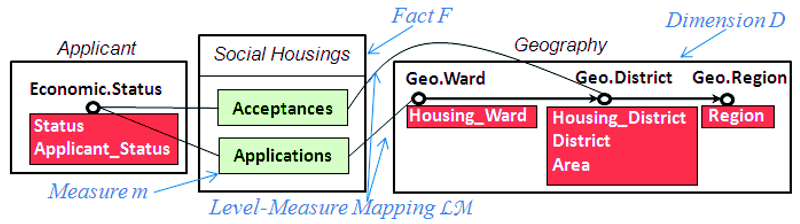

and status (cf. figure 1(b)). LOD1 follows a multidimensional structure expressed in RDF Data Cube

Vocabulary (QB)

3

. The QB format only allows including one granularity in each analysis axis. The

decision-maker needs new analysis possibilities to aggregate data based on multiple granularities. To

discover more geographical granularities, the decision-maker looks into another dataset named LOD2.

This dataset is managed by the Office for National Statistics of the UK

4

; it associates several areas

(including districts) with one corresponding region (cf. figure 1(c)). Both LOD1 and LOD2 are real-

world LOD which can be accessed through querying endpoints

56

.

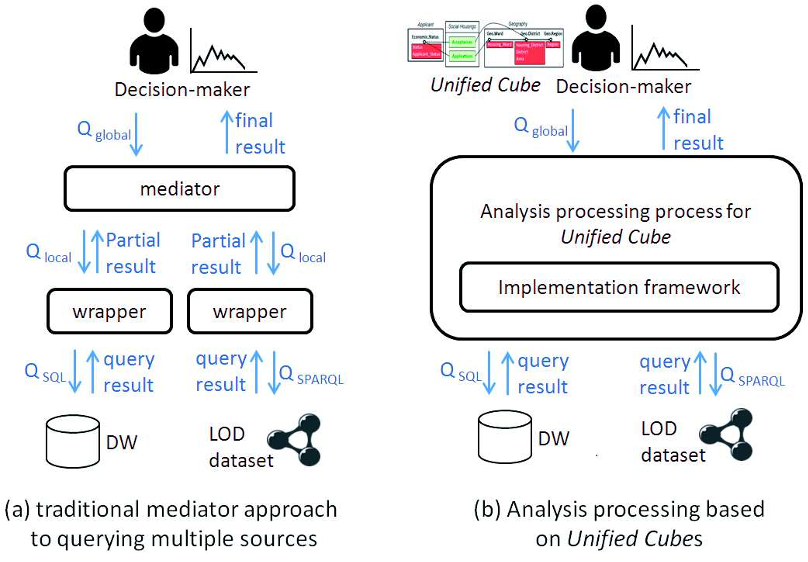

The above-mentioned warehoused data and LOD share some similar multidimensional features, as

they are organized according to analysis subjects and analysis axes. However, analyzing data scattered

in several sources is difficult without a unified data representation. During analyses, decision-makers

must search for useful information in several sources. The efficiency of such analyses is low, since

different sources may follow different schemas and contain different data instances. Facing these

issues, the decision-maker needs a business-oriented view unifying data from both the DW and the

LOD datasets. She/he makes the following requests regarding the view:

• An analysis subject should include all related numeric indicators from different sources, even

though these indicators cannot be aggregated according to the same analytical granularities. To

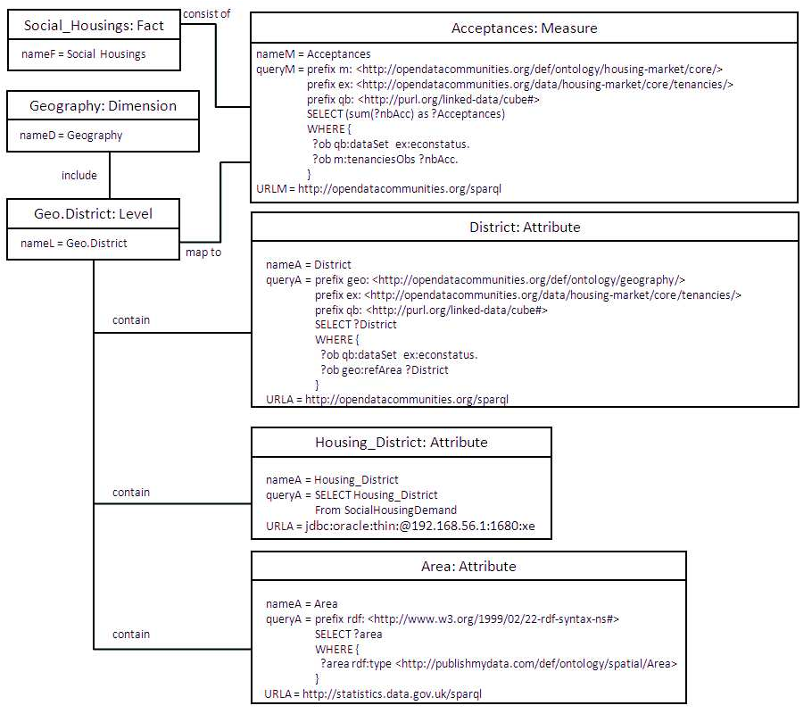

support real-time analyses, numeric indicators (e.g. Applications from the DW, Acceptances

from the LOD1 dataset) and their descriptive attributes (e.g. Housing

Ward, Housing District

and Applicant

Status from the DW, District and Status from the LOD1 dataset) at different

analytical granularities should be queried on-the-fly from sources;

• Analytical granularities related to the same analysis axis should be grouped together. For in-

stance, the Housing

Ward and Housing District granularities from the DW, the District granu-

larity from the LOD1 dataset, the Area and Region granularities from the LOD2 dataset should

be merged into one analysis axis;

• Attributes describing the same analytical granularity should be grouped together. The correl-

ative relationships between instances of these attributes should be managed. For instance, the

attribute Housing

District from the DW, the attribute District from the LOD1 dataset and the

attribute Area from the LOD2 dataset should be all included in one analytical granularity related

to districts. Correlative instances ”Birmingham” from the DW, ”Birmingham E08000025” from

the LOD1 dataset and ”Birmingham xsd:string” from the LOD2 dataset should be associated

together, since they both refer to the same district;

• Summarizable analytical granularities should be indicated for each numeric indicator. For in-

stance, only the measure Applications from the DW can be aggregated according the Ward

analytical granularity. The other measure Acceptances from the LOD1 dataset is only summa-

rizable starting from the district analytical granularity on the geographical analysis axis.

3

http://www.w3.org/TR/vocab-data-cube

4

https://www.ons.gov.uk/

5

http://opendatacommunities.org/sparql

6

http://statistics.data.gov.uk/sparql