A Verifiable High Level Data Path Synthesis

Framework

G

¨

orker Alp Malazgirt

∗

, Ender Culha

†

, Alper Sen

∗

, Faik Baskaya

†

, Arda Yurdakul

∗

∗

Department of Computer Engineering

†

Department of Electrical Engineering

Bogazici University

Bebek 34042, Istanbul, Turkey

alp.malazgirt, ender.culha, alper.sen, baskaya, yurdakul @ boun.edu.tr

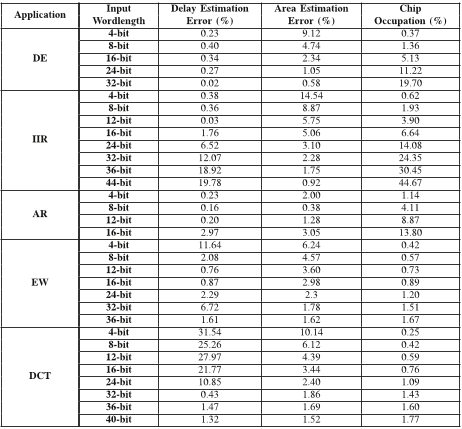

Abstract—This work presents a synthesis framework that

generates a formally verifiable RTL from a high level language.

We develop an estimation model for area, delay and power

metrics of arithmetic components for Xilinx Spartan 3 FPGA

family. Our estimation model works 300 times faster than Xilinx’s

toolchain with an average error of 6.57% for delay and 3.76% for

area estimations. Our framework extracts CDFGs from ANSI-C,

LRH(+) [1] and VHDL. CDFGs are verified using the symbolic

model checker NuSMV [2] with temporal logic properties. This

method guarantees detection of hardware redundancy and word-

length mismatch related bugs by static code checking.

I. INTRODUCTIO N

Current system designs aim to pack as much capability as

possible in order to meet application specific demands. This

has brought the importance of design automation and emer-

gence of technologies like reconfigurable computing in order

to cope with the increasing complexity both algorithmically

and quantitatively. Therefore, FPGAs become widely used for

prototyping, designing and testing Digital Signal Processing

(DSP) applications. However, the majority of DSP algorithm

developers are not familiar with HDL design. They prefer

to use high level programming languages (HLL) to design,

prototype and test their algorithms. That causes the necessity

of synthesis tools which converts HLL into HDL with as little

overhead as possible.

Short time to market conditions increase the importance

of early estimation of delay, area and power behaviors of

the applications in hardware. The commercial tools which

give information about these performances like Xilinx [3] and

Altera [4] can estimate these performances by going through

the steps of synthesis, placement and routing. However, this

can take minutes to hours depending on the application.

The role of verification has been increasing among research

on efficient reconfigurable architectures in different domains

because a good design is not enough to guarantee a working

system. For example, in DSP domain, increasing complexity

has been dealt with switching from low level programming

languages (assembly) to high level programming languages

(C/C++, Java). High Level Synthesis (HLS) tools have been

providing application description mapping HLL to Register

Transfer Level (RTL).

Our framework proposes to address the aforementioned

challenges by creating a framework which creates RTL from

Fig. 1: Framework process flow

HLLs (ANSI-C, LRH(+)) and verifies four properties which

might be increased. Our estimation models of area, delay

and power behavior of arithmetic components are used while

generating RTL. The estimation model that we present in

this work is for Xilinx Spartan 3 platform. A typical HLS

chain includes steps such as scheduling, resource allocation,

binding and register optimization. However, this paper presents

generation and verification of HLL to Golden-RTL generation,

but the implementation of the toolchain supports the steps

of the HLS chain as submodules which can be integrated

to the system as the further work. The process flow we

apply is shown in Figure 1. Input to the framework is an

HLL, either ANSI-C or LRH(+) - the language of the RH(+)

[1] framework. LRH(+) enables sequential and concurrent

programming in the same environment and includes both

traditional programming constructs and the constructs special

to reconfigurable computation. LRH(+) introduces bit-length

flexible data types, custom operations and explicit parallelism

on the application source code. In Step 1, we generate Control

Data Flow Graph (CDFG) of the input code by applying static

code analysis.

Static code analysis is one of the methods of verification

in which the analysis of code is performed without actually

executing the code as opposed to dynamic analysis with

execution of code. We apply static code analysis to both source

code and to the generated RTL files. We generate NuSMV

code both from our CDFG and RTL. The properties we apply

are s imilar to the ones in compiler verification techniques

[23]. Hence, t he uniqueness of our method is to apply static

code analysis both to source code and RTL code. In addition,

we apply known compiler verification properties to verify our

RTL. These properties our explained in Section V-C

The generation process is explained in Section V-B. Step

2 is our proposed RTL generation process. RTL generation

lies on our hardware synthesis estimation model which is

explained in Section III. The RTL generation is explained

thoroughly in Section IV. Generated HDL files are analysed

in Step 3 and we generate a new CDFG. In Step 4, we

apply the same conversion methods in step one to generate

a second NuSMV state machine code. Step 5 is the execution,

comparison and logging stage. NuSMV codes are executed and

outputs are compared with supplied temporal logic properties.

This is explained in Section V-D.

II. RELATED WORK

There are several estimation tools for FPGA based imple-

mentations. A method of area estimation model for Look-

Up-Table (LUT) based FPGAs is proposed in [5]. This paper

presents accurate area estimation results but does not present

any delay estimation model. The method in [6] develops

delay and area model at the Data flow graph level (DFG).

Unfortunately, the number of operations that are supported

are li mited and estimation error is high for some applications.

In [7], area, time, power models are given for Xilinx IP

core which is integrated to FANTOM design automation tool.

In [8], an estimation technique is proposed dealing with a

MATLAB specification.

There exist commercial [9], [10], [11] and academic envi-

ronments [12], [13] that allows rapid hardware development

with limitations. Evidently, the compilation process of such

languages is not completely different than regular software

compilation processes. The main difference has been generally

on the back-end of the compilers.

Systems that can be realized as finite state machines are

formally verified by model checking [14]. The importance of

formal verification is known to be able to show full functional

correctness by proof-based methods. However, it is known

to be very complex and generally requires expert knowledge.

Code review is the human comprehension of the given finite

state machine or the software. Although it can address design

flaws at the higher level, lower level details can be missed

very easily. Model checking of high level programs have

existed for some time [15], [16]. There also exist off the

shelf and research-level hardware model checkers at the netlist

level [ 17], [18] and at CDFG level [19] with standard logic

assertions.

Verification of high level RTL generation mainly differs

based on the methodologies. Authors of [20] favor to divide

the complex checking of algorithmic description into smaller

problems which are intra cycle equivalence and valid register

sharing property. The structural RTL definition and structural

behavior might not be completely equal but by defining

conflicting register sharing cases, the temporal model check-

ing properties are written. Similarly, intra cycle equivalence

checks that at any given time the behavioral structural register

mappings should match all the input assignment operators

that is performing. Our method inherently checks this because

the extraction of variables from HLL allows us to bind each

variable to an operator and our properties check if any bugs

exist by wrong usage of variables. Therefore, our aim is to

verify that the design uses as little memory units as possible.

This is achieved by removing redundant variables which are

stored either in registers or in memory. The work i n [21]

suggests a verification methodology which converts data-path

and control-path specification to a proof script. The concept

of critical states and paths are introduced. Critical path is a

path that starts and ends from a critical state (critical state

can be input output variables and conditionals etc.). The

proof checking equates the given behavioral description to the

RTL. Our method can capture the same behavior because it

inherently traverses all the paths from input to output path.

Therefore, extra pre-processing is neglected in our solution

yielding relatively quicker verification.

III. ESTIMATION MODEL OF ARITHMETIC COMPONENTS

Shrinking time to market, short product lifetimes increase

the necessity of early performance estimation of DSP algo-

rithms during the development, prototyping cycle. An esti-

mation model for our RH(+) HLS and RTL generation tool

is proposed in this section. Firstly, datapath components are

selected according to their delay and area behaviors. Then,

behavior estimations of these components are measured with

Xilinx XST tool by synthesizing them on FPGA with changing

parameters of these components. We use Xilinx XST synthesis

reports in our analyses. We apply Linear Regression on t hese

measurements to extract model for delay, area and power

metrics. The output of the RH(+) HLS tool is the data flow

graph that represents the algorithm defined in high level

language. These DFGs are used for performance estimation

and for RTL generation purposes. The estimation methodology

is handled in two steps; node estimation and graph estimation.

A. Selection of Arithmetic Components

The estimation is modeled for four basic types of operators;

adder, subtractor, multiplier and divider. The subtypes of the

adders/subtractors are inspected for design space exploration.

These subtypes are Carry Lookahead Adder (CLA), Carry Skip

Adder (CSKA), Carry Select Adder (CSLA), and Ripple Carry

Adder (RCA). It has been seen that unlike ASIC, RCAs are

the fastest and smallest adder in FPGAs because FPGAs have

a dedicated logic for implementing RCA. CLA, CSKA and

CSLA are inspected in terms of area and delay behaviors.

CLA and CSKA do not have any advantage on RCA for

FPGAs neither in area nor in delay behavior. However, CSLA

has a slight advantage on delay over RCA for the bit sizes

wider than 128 but not in area. So, the RCA and CSLA are

placed on the adder library for RTL generation and design

space exploration purposes. The subtractor has no difference in

architecture and performance so subtypes of subtractors are the

same as adders. The LogiCore IP Multiplier 11.1 and Divider

3.0 from Xilinx IP Core library [3] are used as multiplier

and divider components. All the aforementioned estimation,

generation and verification processes are measured for the

multiplier and divider cores from Xilinx library with hardware

blocks enabled (instead of LUT implementations).

B. Parametric Area and Latency Estimation for Nodes

The arithmetic components are synthesized in Xilinx ISE

13.2 with different port sizes. The delay and area behavior

results are gathered from the synthesis reports. Curve fitting

is applied to these results by using linear regression analysis

so as to embed these models into RH(+) framework and

RTL generation software. Polynomial functions and piecewise

polynomial functions are used for curve fitting. For Spartan 3

FPGA, we observe that RCA model is very regular in behavior.

Hence, polynomial function in 1 fits well.

y = p

0

∗ x + p

1

(1)

In equation (1), x corresponds to input bitsize, y corresponds to

delay in nanoseconds or area in slices and p

0

, p

1

corresponds

to model parameters. However, the models of CSLA, multi-

plier and divider are not so regular. For that reason, piecewise

polynomial functions are applied for these components. The

following equation is an example for multiplier which is a

piecewise polynomial function.

F or 0 < x < 32

f = p

0

+ p

1

∗ x + p

2

∗ y + p

3

∗ x

2

+ p

4

∗ x ∗ y + p

5

∗ y

2

+

p

6

∗ x

3

+ p

7

∗ x

2

∗ y + p

8

∗ x ∗ y

2

+ p

9

∗ y

3

; (2)

F or 32 6 x < 64

f = p

10

+ p

11

∗ x + p

12

y + p

13

∗ x

2

+

p

14

∗ x ∗ y + p

15

∗ y

2

+ p

16

∗ x

3

+

p

17

∗ x

2

∗ y + p

18

∗ x ∗ y

2

+ p

19

∗ y

3

; (3)

In equations (2) and (3), x and y are the bit sizes of the inputs,

f is the delay in nanoseconds, parameters p

0

to p

17

are model

parameters.

Listing 1: HBD File of RCA Adder

@synth

∗ v a r i a b l e = ( x )

@hbd

∗ P r o p e r t y = D e l a y

∗ f u n c t i o n T y p e = p o l y

∗ ( 1 : 0 . 0 5 5 9 ) + ( 0 : 1 . 5 3 1 6 )

@endhbd

@hbd

∗ P r o p e r t y = Area

∗ f u n c t i o n T y p e = p o l y

∗ ( 1 : 0 . 4 9 9 6 ) + ( 0 : 0 . 0 5 5 )

@endhbd

@hbd

∗ P r o p e r t y = Power

∗ f u n c t i o n T y p e = p o l y

∗ ( 4 : 0 . 0 0 0 0 0 0 0 0 3 2 8 8 ) + ( 3 : − 0 . 0 0 0 0 0 1 4 9 1 8 ) + ( 2 : 0 . 0 0 0 2 0 2 7 2 )

@endhbd

@end synth

Every node in DFG represents an arithmetic operator which

has delay and area cost. The created model for our arithmetic

operator is embedded in RTL generation software with hard-

ware behavior description files. Hardware behavior description

(hbd) file is a file with a format that defines the area, delay

and power models of arithmetic units. In listing 1, hbd file

of RCA adder is shown as an example. Hbd files start with

@synth and @endsynth. The fields between every @hbd and

@endhbd describe a model function. Property field describes

whether this is delay, area or power model. FunctionType

field describes the characteristic of the function which can be

polynomial, piece-wise linear or piece-wise polynomial. The

line after FunctionType describes the function in a specific

format. The function in listing 1 corresponds to the equation

1. The value between ”(” and ”:” corresponds to power of

the variable and the value between ”:” and ”)” corresponds to

coefficient.

For example, for the function of delay property in listing

1, 0.0559 corresponds to p

0

and 1.5316 corresponds to p

1

of

equation 1. Every arithmetic operator has a hbd file. During

performance estimation, the software reads and parses the

hbd file and extracts equations. For every node in the DFG

the hbd file is read and estimation values are calculated.Hbd

files are useful for embedding estimation model in the RTL

generation software. Moreover, it gives extendibility to the

design automation tool. Hbd file has a user-friendly format

and the user can easily introduce a module to the HLS tool

by specifying its area, delay and power consumption with an

hbd file.

C. Area and Latency Estimation of the CDFG

With node estimation, latency and area of every vertex

in the CDFG is estimated. However, for the estimation of

the overall performance, a complete estimation of the CDFG

is necessary.When our estimation model is compared with

[6] and [7], Model in [6] make estimations by extracting

general information such as how many components are used

and what the average input length does the operators have.

It simply calculates how much resource used in FPGA and

estimates delay based on the resource usage. However, in our

methodology, we calculate t he delay and area costs for every

component in the datapath and calculate the area estimation

by summing these values and calculate the delay estimation

by finding critical path with graph processing. Estimation of

our model is more accurate especially when the application‘s

critical path is not proportional to graph size because Enzler‘s

[6] model does not use critical path in calculations. The model

in [7] is closer to our approach but it estimates only area not

delay.

IV. RTL GENERATION

The Dataflow graphs that are generated by the RH(+) tool

are synthesized to VHDL as Golden-RTL. Golden RTL is the

datapath circuit without any multiplexer and resource sharing.

Golden RTLs are used for verification of design units. In

the graph, every vertex represents an arithmetic operation and

edges represent the signals between the operations. Generation

of Golden RTL program consist of two main functions.

A. HDL Generation

We generate necessary adder, subtractor, multiplier and

divider VHDL files as components and connect them at the

top level. During generation, firstly, every vertex in the graph

is generated as a component. Every arithmetic operator in our

library has a template file and parameter file. Template file is

a template VHDL file of the operator which has fixed fields

and editable fields. Parameter file defines the editable fields in

template file and corresponding values of these fields. Using

template and parameter file decreases the software run-time

by not allowing the fixed fields to be generated over and

over again, moreover it provides extendibility on the library.

The user can integrate an operator to the RH(+) framework

by putting template and parameter files in the library. The

parameter files and template files are used for generating the

arithmetic components by the RTL generator software. After

every necessary component is generated, these components are

connected at the top level. Signed or unsigned extension can

be done during the port mapping of the signals and operators.

The RTL is generated with 4 options that can be selected by

the user.

• Neither i nputs nor the outputs will be registered

• Only inputs are registered

• Only outputs are registered

• Both the inputs and outputs are registered

V. FORMAL VERIFICATION OF GOLDEN RTL

CDFG represents all the paths that might be traversed

through a program during its execution. Each node in the

CDFG represents operations or control structures. Each edge

represents the data dependency between operations. CDFGs

are concurrent structures. Since it lies in the middle of RTL

level and high-level representation, it is mostly where all the

optimizations, reductions and decisions about the system is

made. Hence, it is important to capture as many details as

possible from higher to lower levels. By using Computational

Tree Logic (CTL) and Linear Time Logic (LTL) properties

[14], bugs are detected right before converting the CDFG to

RTL.

A. CDFG Structure

Vertex and Edge data structures are defined to represent the

CDFG. Vertex data structure holds the necessary information

of operations and variables. Variables represent storage

elements. They can either be registers, internal or external

memory based on the architecture. The important properties

of the Vertex is given below:

• Name: Name of the Vertex

• Index: ID of the Vertex

• IsOperator: Flag for identifying if the vertex is an oper-

ator or a variable

• OperatorType: Types can be Adder, Subtractor, Divider

and Multiplier

• PrecedenceLevel: Precedence level of the operators/vari-

ables in CDFG. It helps to identify dependencies between

components.

• Processed: Flag that helps to identify if any operator/-

variable is processed

• Wordlength: Wordlength of the operation/variable

Edge structure holds the dependencies between vertices. Apart

from pointers to and from Vertices, only a single property is

necessary:

• Name Name: Name of the Edge

B. Generation of NuSMV Representation

NuSMV [2] is a symbolic model checker developed as the

reimplementation and extension of SMV [22]. NuSMV takes a

text consisting of program describing a model and some spec-

ifications (temporal logic formulas). It outputs ”true” if the

specification holds or prints a trace showing a counterexample.

The conversion of CDFG to NuSMV was done automatically

by the RH(+) framework. The conversion steps are given

below:

• Identify precedence levels of the CDFG in order to

differentiate the input variables, output variables and the

operators.

• Generate boolean and integer variables for marking and

storing usage, declaration and wordlength information.

• Generate a state in NuSMV for each node in CDFG.

• Declare transitions f or each states according to the de-

pendencies in CDFG

• Generate properties to check for each variable or opera-

tion

The process starts with identifying precedence levels. Iden-

tifying precedence levels is important because it produces an

initial scheduling for the given CDFG. Each operation needs

inputs and those inputs can either be variables or outputs of

other operations. In addition, it clears the input nodes and

the output nodes of the CDFG. Two new nodes are inserted

in the CDFG which are Sink and Source. After identifying

the precedence levels, the process starts to create boolean

and integer variables. Those variables and their intentions are

summarized below:

• defined : Operands used by operations are stored as vari-

ables. Before a variable is used, it must be defined.

This boolean flag identifies in which state the variable

is defined in CDFG.

• used: Both variables and operands are defined but not

necessarily used. This flag determines in which state the

variables and operations are used.

• assigned: After variables are defined, they must be ini-

tialized (assigned) in order to be used because their

intrinsic values can be random. Hence, this flag is true

whenever the variables are initialized or assigned and

false otherwise.

• wordlength: Wordlength is used to correctly calculate

the wordlengths of the operators, their inputs and output

sizes as well as variable wordlengths. Each operation

has different contribution to wordlengths. Overestimat-

ing wordlengths of operations increases area and power

consumption. Underestimating wordlengths creates faulty

results and wrong connections between components. So,

wordlengths are essential for each operation and variable.

After we create the flags, first state to create is Source. All the

states emerging from the Source state are the states that have

precedence level equal to one. Based on the precedence levels

and transitions in CDFG, we make next states assignments.

Similar to Source state assignment, the final state is Sink state.

States that have the highest precedence levels make transitions

to the Sink state.

The procedure explained above from CDFG to NuSMV fol-

lows the routines below:

• GenerateSMV: This routine is separated into subroutines:

– GenerateVars: This routine makes all the declarations

of the variable and operation properties

– GenerateAssignment: This routine makes all the ini-

tial assignments of the variables and the operators

– GenerateState: This routine creates the states depend-

ing on the node information taken from the CDFG

– GenerateVarState: This routine creates all the state

transitions of the variables

– GenerateCompState: This routine creates all the state

transitions of the operations

• UpdateGraphSMV: LRH(+) code is parsed, necessary

data properties are filled and precedence levels are cal-

culated. For precedence level calculation, the algorithm

traverses the CDFG and checks the successor predecessor

relationship.

C. Temporal Logic Properties

The temporal properties are written either in CTL or in LTL.

For this project, there are four properties that are checked. We

combine atomic formulas with a set of connectives to form

well formed formulas. We summarize the semantics of the

connectives below:

• AG: For all the computational paths, the formula must

hold globally

• AF : For all the computational paths, there will be some

future state where the formula holds

• EF : There exists a computational path, where there will

be some future state the formula holds

• EX: In some next state, the formula holds

• E[s U t]: There exists a computational path such that

s is true until t is true

Based upon the semantics above, the properties are explained

below:

• Unused Variables Property

: This property checks that all

variables that have been declared can be used eventually.

The CTL formula is straightforward:

AG(defined → EF used)

• Assigned value is never used

: When a value is assigned

to a variable it should be used eventually. A value may

not be used on all paths and it is not useful to check f or

this general case.

AG(assigned → EX(E[!assigned U used]))

• Deadcode Elimination

: Dead code is code that can never

be reached in a program. By adding source and sink

nodes, we check that from sink every path eventually

leads to the source.

AG(sink → AF (source))

• Wordlength Check

: Given a CDFG and input variable

wordlengths, depending on the operations on the CDFG,

output variables have expected wordlengths (similar to

worst case). When CDFG is converted to NuSMV rep-

resentation, these expected wordlengths are assigned as

initial values. Then depending on the state transitions

in NuSMV, the wordlengths alter. The check of final

wordlength with the initial(estimated) wordlength equates

that the results match in wordlength, otherwise a coun-

terexample path is printed.

AF (out

wordlength = val) is checking the output

variable’s wordlength as an example. Wrong connections

can alter the wordlength.

These properties are not unique to hardware design. For

instance, similar properties are used for software verification

in [23]. Although these properties are not unique to hardware

design the usage such as our work helps identifying important

faults in hardware. Uninitialized variables mean that they will

occupy extra storage either in expensive register-file or an

external memory which increase overall latency. Therefore by

reducing unnecessary variables, power consumption, area and

latency performances are improved. Dead code elimination

finds connection faults and redundant hardware. Unnecessary

hardware can also degrade performance and area. Therefore,

all the properties have direct effect on area, power consump-

tion and latency of the generated RTL.

D. Backward Process: Generation of CDFG from RTL

The generation of CDFG from RTL and generation of

NuSMV from the new CDFG enables the designer to verify the