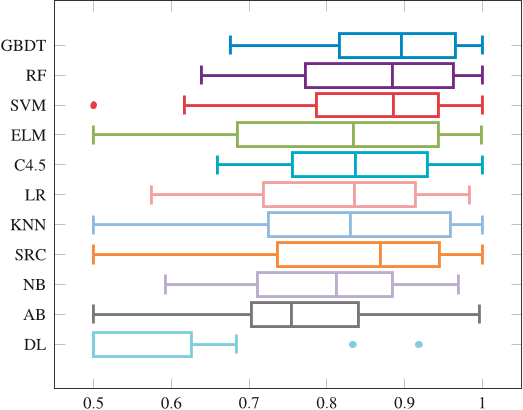

Abstract: Up-to-date report on the accuracy and efficiency of state-of-the-art classifiers.We compare the accuracy of 11 classification algorithms pairwise and groupwise.We examine separately the training, parameter-tuning, and testing time.GBDT and Random Forests yield highest accuracy, outperforming SVM.GBDT is the fastest in testing, Naive Bayes the fastest in training. Current benchmark reports of classification algorithms generally concern common classifiers and their variants but do not include many algorithms that have been introduced in recent years. Moreover, important properties such as the dependency on number of classes and features and CPU running time are typically not examined. In this paper, we carry out a comparative empirical study on both established classifiers and more recently proposed ones on 71 data sets originating from different domains, publicly available at UCI and KEEL repositories. The list of 11 algorithms studied includes Extreme Learning Machine (ELM), Sparse Representation based Classification (SRC), and Deep Learning (DL), which have not been thoroughly investigated in existing comparative studies. It is found that Stochastic Gradient Boosting Trees (GBDT) matches or exceeds the prediction performance of Support Vector Machines (SVM) and Random Forests (RF), while being the fastest algorithm in terms of prediction efficiency. ELM also yields good accuracy results, ranking in the top-5, alongside GBDT, RF, SVM, and C4.5 but this performance varies widely across all data sets. Unsurprisingly, top accuracy performers have average or slow training time efficiency. DL is the worst performer in terms of accuracy but second fastest in prediction efficiency. SRC shows good accuracy performance but it is the slowest classifier in both training and testing.