Did you find this useful? Give us your feedback

626 citations

...When these tags are part of our physical environment, devices with a tag reader can retrieve digital information from them [18, 10], activate associated actions, or attach information to them [17]....

[...]

578 citations

572 citations

...The Olivetti Active Badge [5] was used in several systems, for example to aid a telephone receptionist by dynamically updating the telephone extension a user was closest to. Augmentable Reality [ 6 ] allows users to dynamically attach digital information such as voice notes or photographs to the physical environment....

[...]

542 citations

471 citations

...This concept can be implemented on separate desktop displays (Smith & Mariani, 1997), handheld displays (Rekimoto et al., 1998), HMDs (Butz et al....

[...]

...This concept can be implemented on separate desktop displays (Smith & Mariani, 1997), handheld displays (Rekimoto et al., 1998), HMDs (Butz et al., 1999) or time-interlacing displays such as the two-user workbench (Agrawala et al., 1997)....

[...]

1,825 citations

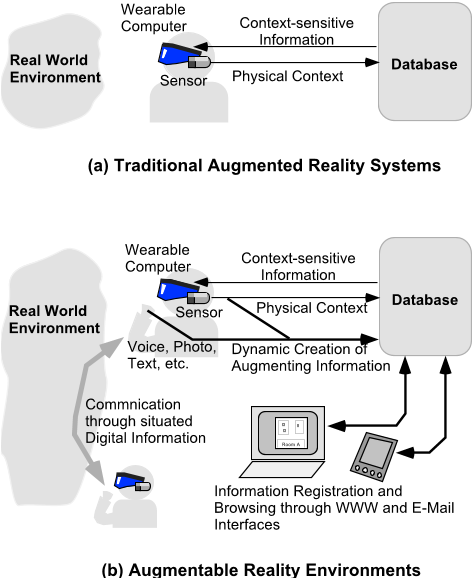

...Augmented Reality (AR) systems are designed to provide an enhanced view of the real world through see-through head-mounted displays[16] or hand-held devices[11]....

[...]

1,032 citations

...Various kinds of context sensing technologies, such as position sensors[5, 4], or ID readers[11], are used to determine digital information according to the user’s current physical context....

[...]

...For example, KARMA[4], which is a well-known AR system, displays information about the laser printer based on the current physical position of a head-worn display....

[...]

916 citations

753 citations

...‘‘Pick and Drop’’[10] provides a method for carrying digital data within a physical space using a stylus....

[...]

573 citations

Since humans rely on artificial markers such as traffic signals or indication panels, the authors believe that having appropriate artificial supports in the physical environment is the key to a successful AR and wearable systems.

Since this applet can be started from Java-enabled web browsers (such as Netscape or Internet Explorer), the user can access the attached data from virtually anywhere.

This paper presents an environment that supports information registration in the real world contexts through wearable and traditional computers.

Various kinds of context sensing technologies, such as position sensors[5, 4], or ID readers[11], are used to determine digital information according to the user’s current physical context.

Their interface design for attaching data is partially inspired by ‘‘Fix and Float’’[13], an interface technique for carrying data within a virtual 3D environment.

Based-on their initial experience with the prototype system, the authors feel that the key design issue on augment-able reality is how the system can gracefully notify situated information.

The head-worn part of the wearable system (Figure 4, above) consists of a monocular see-through head-up display (based on Sony Glasstron), a CCD camera, an infrared sensor (based on a remote commander chip for consumer electronics).

In this paper, the authors have described ‘‘augment-able reality’’ where people can dynamically create digital data and attach it to the physical context.

Their current approach is to overlay information on a see-through headsup display; the authors expect this approach to be less obtrusive when the display is embedded in eyeglasses [14].