Did you find this useful? Give us your feedback

![Fig. 18: The primary and backup tasks synchronize at each epoch in [Bressoud and Schneider 1996]](/figures/fig-18-the-primary-and-backup-tasks-synchronize-at-each-2n281h73.png)

![Fig. 19: Scheduling backup tasks in a multiprocessor system in [Ghosh et al. 1994]](/figures/fig-19-scheduling-backup-tasks-in-a-multiprocessor-system-in-25eib9r8.png)

![Fig. 15: Redundant multithreading at the OS level in [Döbel et al. 2012]](/figures/fig-15-redundant-multithreading-at-the-os-level-in-dobel-et-25bqh7ky.png)

![Fig. 21: The insertion of a checkpoint in the CDFG turns the number of required hardware registers to four instead of two at control step 3 (adapted from [Blough et al. 1997])](/figures/fig-21-the-insertion-of-a-checkpoint-in-the-cdfg-turns-the-qiockm39.png)

133 citations

103 citations

82 citations

54 citations

38 citations

11,671 citations

5,408 citations

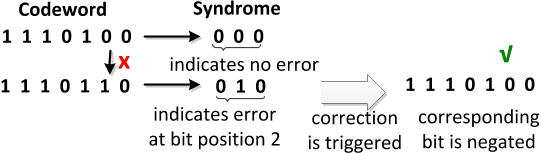

...Literature examples on the aforementioned concepts include algorithmic noise tolerance (ANT) (Hegde and Shanbhag 2001) on modules with reduced functionality, and Hamming (1950) and Dutt et al. (2014) on ECC ©S. ACM Computing Surveys, Vol. 50, No. 4, Article 50....

[...]

...Figure 6 shows an example of a single bit correction with the Hamming code (Hamming 1950)....

[...]

4,695 citations

...Reliability-related errors that occur due to hardware (HW)-design errors, insufficiently specified systems or malicious attacks (Avizienis et al. 2004), or erroneous SW interaction (i.e., manifestation of SW bugs due to SW of reduced quality (Lochmann and Goeb 2011)) are beyond the current scope....

[...]

...Reliability-related errors that occur due to hardware-design errors, insufficiently specified systems or malicious attacks [Avizienis et al. 2004] or erroneous software interaction (i....

[...]

4,335 citations

2,140 citations

The most prominent being that mapping and SW provides a lot of flexibility due to the re-mapping possibilities of a given task sequence onto the “ fixed ” HW. Networked applications expanded further the deliverable functionality possibilities. The system behavior can be adapted at run time whenever significant environmental changes take place, or according to varying error rates. This is especially so, as errors can be masked as they propagate through the different hardware and software layers ( including the application itself ).

Cons include latency (depending on the checkpointing granularity), performance (depending also on whether checkpointing is overlapped with normal execution) and the limitation to transient errors.

Cons include the need for system-specific solutions, the low error protection (through isolation), the potential performance degradation.

Cons include the potentially high storage and power overhead, the potentially very high latency and performance (depending also on whether checkpointing is overlapped with normal execution).

Further technology trends like 3D integration, incorporating heterogeneous technologies on a single platform and dark silicon pose new challenges and opportunities for the fault tolerance techniques.

Other examples of emerging error-tolerant application domains are Recognition, Mining and Synthesis (RMS) [Dubey 2005] as well as artificial neural networks (ANNs) [Temam 2012].

Pros include the limited area and power, performance overhead as the new implementation will typically satisfy the system requirements, while minimizing additional cost.

The term task in this paper is used as an umbrella term, which can denoteACM Computing Surveys, Vol. V, No. N, Article XX, Publication date: January XXXX.

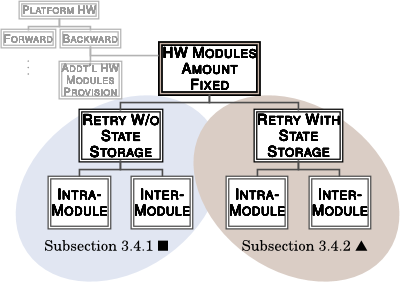

These four classes are discussed in the following subsections, as shown in Figure 13 s. Main criteria for further categorization into classes include whether modifications are required in: existing functionalities, existing task implementations, the resource allocation, the interaction with neighbouring tasks, execution mode (of additional tasks), cooperation among HW modules.

Rather than saving checkpoints at fixed intervals, checkpoints can be stored in a customized way so that the amount of stored data is minimized.

Compared to global schemes, local schemes reduce the amount of data to be stored during checkpointing but require typically a more complicated recovery algorithm.

Instead of adding modules with the same functionality, modules with different functionality can be added; the added modules play an active role in the recovery as in the previous category.

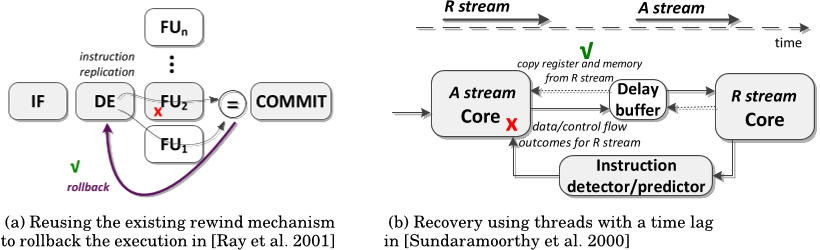

Error recovery is further split into forward error recovery (FER), which includes redundancy, like for example triple modular redundancy, and backward error recovery (BER), which includes rolling back to a previously saved correct state of the system.

Beyond the earlier discussed types of systems, intra-module schemes may address applications that are amenable to numerous non-deterministic events: uncertain functions (like human input functions), interrupts, system calls, I/O operations due to communication with external devices.

system-specific strategies have been developed which deal with events coming from the external environment, especially events due to communication with external devices s. Online multiprocessor checkpointing can be broadly characterized as local and global.

The other group of backward techniques includes the techniques that retry the execution by storing the state of the system at intermediate points.