Q2. What have the authors stated for future works in "Coastal flooding event definition based on damages: case study of biarritz grande plage on the french basque coast" ?

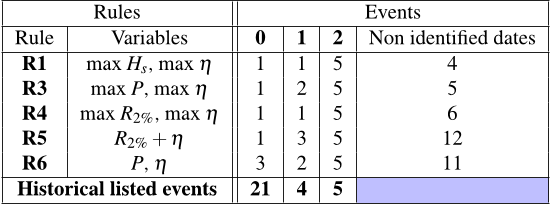

Then, the rules skill was retrospectively tested over the total time span, showing the existence of efficient rules, which could be potentially used for damage prediction for future events. Nevertheless, from the results of the paper, it seems that there is still significant work to be done to ensure that each individual storm potential impact is assessed accurately on an appropriate metric respecting the point of view defended in this paper.

Q3. What is the test based on Kendall’s coefficient?

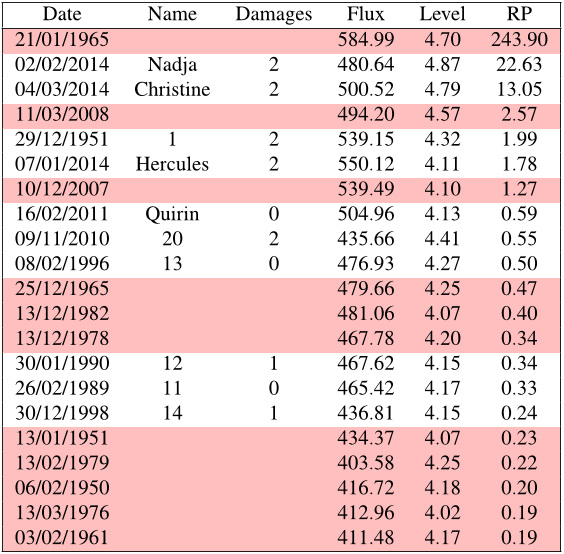

The test based on Kendall’s τ coefficient does not reject independence between the two variables composing the event dataset, allowing the use of equation (8) to estimate the joint probability.

Q4. How many storms were observed at the Grande Plage?

The number of storms, for which only Biarritz was mentioned is 30 and the number of flood events at the Grande Plage is 13, which represents one third of the storms observed over the period 1950-2014.

Q5. What is the main reason for the increasing population on the coast?

Whereas the problem is more and more acute due to the growing coastal population and associated infrastructures [1], climate change also increases pressure on the coast by sea level rise which allows the ocean to reach usually protected areas [2].

Q6. What is the main limitation of the paper?

another limitation of this study is the unknownbeach profile variability over time during the studied period and its effect on the damages induced by coastal flooding.

Q7. What is the rule for a storm?

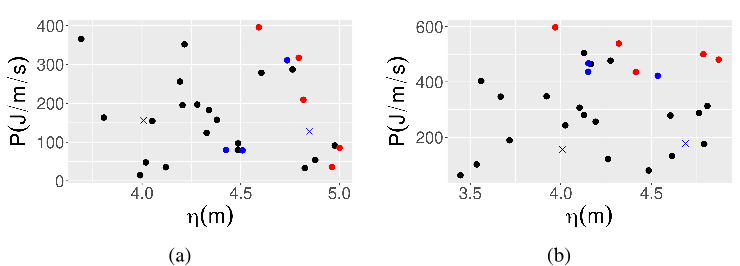

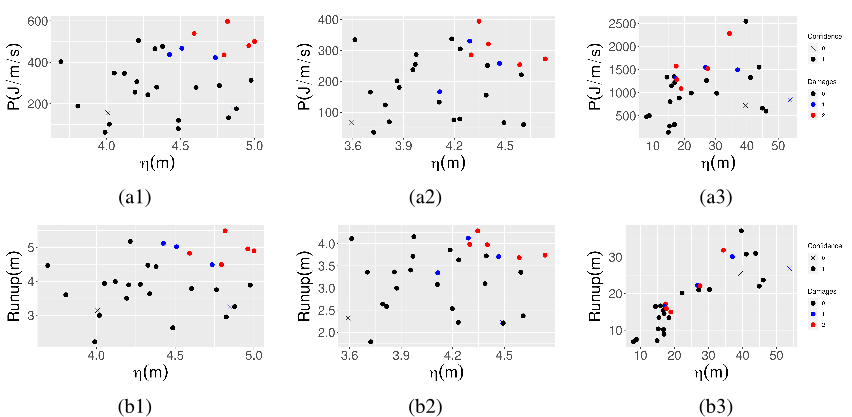

The best rule was the one based on wave energy flux (or equivalently the significant wave height) and water level maxima over the event.•

Q8. What is the main reason why RP should be used in applied studies?

Stakeholders being mostly concerned by the impacts to the coast and population, RP should reflect this aspect in applied studies.

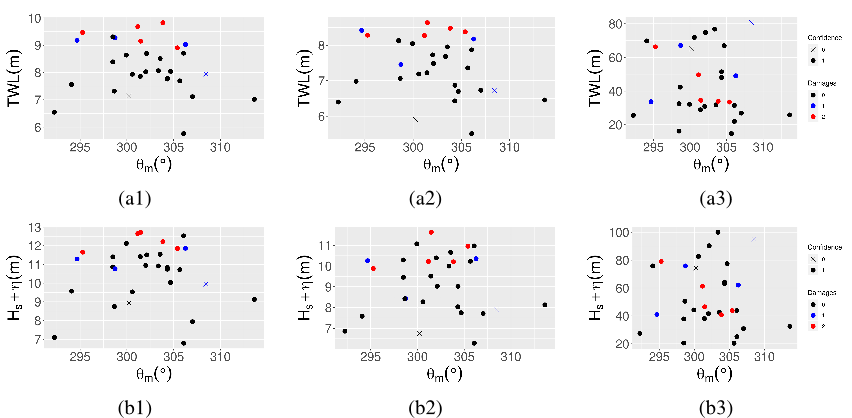

Q9. What is the effect of the analysis of quantile graphs on the sea state?

The analysis of quantile/quantile graphs shows an underestimation by the model for extreme sea states, detrimental to this type of study precisely focused on these events.

Q10. What is the return period of the event x > x,y > y?

The return period of the event {x > x,y > y} is thennaturally computed asRP(x,y) = µP̂r(x > x,y > y)= µP̂r(x > x)P̂r(y > y)= µ{1− Ĝx(x)}{1− Ĝy(y)} ,(8)whereµ = 2015−1949+1∑t∈T 1{x(t)> ux,y(t)> uy} .