Did you find this useful? Give us your feedback

![Figure 8. Comparison with [11]. Whole images are available in our project website.](/figures/figure-8-comparison-with-11-whole-images-are-available-in-2l3xrcgj.png)

![Figure 10. Image restoration from haze images. Close-ups shown in (d) are cut from (a-c). The left two are input NIR and haze images. The right three patches are results of [16], [9], and our method.](/figures/figure-10-image-restoration-from-haze-images-close-ups-shown-iwo7n2l1.png)

267 citations

...For a target image, the guidance image can either be the target image itself [10,6], highresolution RGB images [6,2,3], images from different sensing modalities [11,12,5], or filtering outputs from previous iterations [9]....

[...]

...Image filtering with a guidance signal, known as joint or guided filtering, has been successfully applied to a variety of computer vision and computer graphics tasks, such as depth map enhancement [1,2,3], joint upsampling [4,1], cross-modality noise reduction [5,6,7], and structure-texture separation [8,9]....

[...]

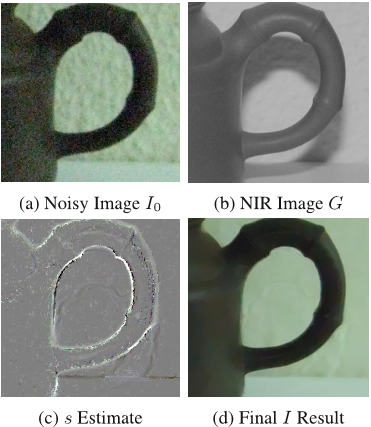

...(c) Restoration [5] (d) Ours (g) Restoration [5] (h) Ours...

[...]

...The filtering results by our method are comparable to those of the state-of-the-art technique [5]....

[...]

...Guided by a flash image, the filtering result of our method is comparable to that of [5], as shown in Figure 8(e)-(h)....

[...]

183 citations

165 citations

...from the reference image to the target image for color/depth image super-resolution [15], [16], image restoration [36], etc....

[...]

162 citations

123 citations

12,560 citations

...Our regularization term is defined with anisotropic gradient tensors [13, 4]....

[...]

8,738 citations

...Simple joint image filtering [18, 8] could blur weak edges due to the inherent smoothing property....

[...]

7,912 citations

3,668 citations

2,215 citations

...Simple joint image filtering [18, 8] could blur weak edges due to the inherent smoothing property....

[...]

because of the popularity of other imaging devices, more computational photography and computer vision solutions based on images captured under different configurations were developed.

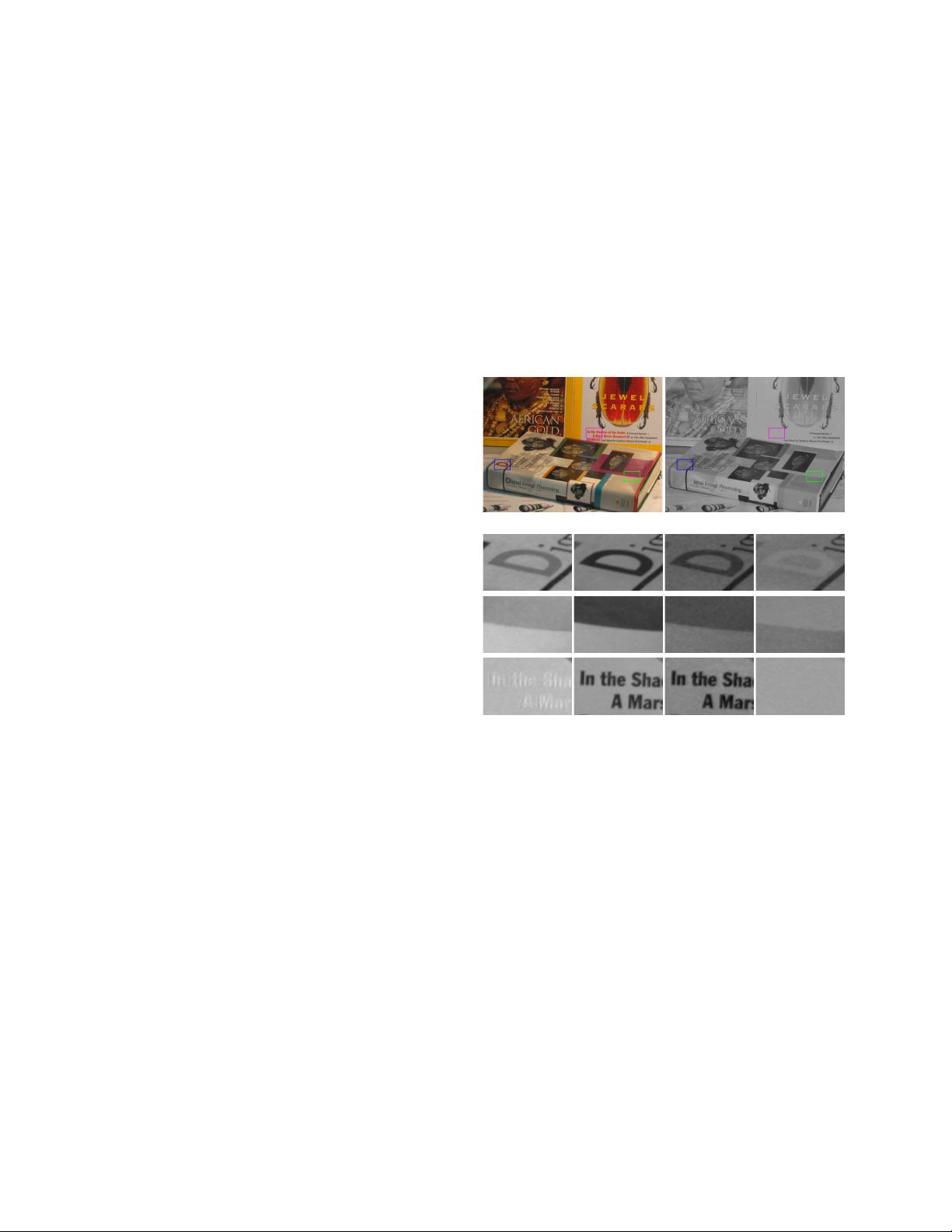

To solve the non-convex function E(s, I) defined in Eq. (14), the authors employ the iterative reweighted least squares (IRLS), which make it possible to convert the original problem to a few corresponding linear systems without losing generality.

The authors contrarily propose an iterative method, which finds constraints to shape the s map according to its characteristics and yields the effect to remove intensive noise from input I0.

The restoration result shown in (d) is with much less highlight and shadow, which is impossible to achieve by gradient transfer or joint filtering.

Their estimated s map shown in (c) contains large values along object boundaries, and has close-to-zero values for highlight and shadow.

Since the two input images are color ones under visible light, the authors use each channel from the flash image to guide image restoration in the corresponding channel of the nonflash noisy image.

The authors introduce an auxiliary map s with the same size as G, which is key to their method, to adapt structure of G to that of I∗ – the ground truth noise-free image.

The limitation of their current method is on the situation that the guidance does not exist, corresponding to zero∇G and non-zero ∇I∗ pixels.

This enables a configuration to take an NIR image with less noisy details by dark flash [11] to guide corresponding noisy color image restoration.

Further to avoid the extreme situation when∇xGi or∇yGi is close to zero, and enlist the ability to reject outliers, the authors define their data term asE1(s, I) = ∑i( ρ(|si −pi,x∇xIi|)+ρ(|si−pi,y∇yIi|) ) , (4)where ρ is a robust function defined asρ(x) = |x|α, 0 < α < 1. (5)It is used to remove estimation outliers.

In previous methods, Krishnan et al. [11] used gradients of a dark-flashed image, capturing ultraviolet (UV) and NIR light to guide noise removal in the color image.

The final linear system in the matrix form is((CTx (Px) 2At+1,tx Cx + C T y (Py) 2At+1,ty Cy) + λB t+1,t ) I= (CTx PxA t+1,t x + C T y PyA t+1,t y )s + λBt+1,tI0. (23)The linear system is also solved using PCG and the solution is denoted as I(t+1).