read more

![Figure 12: Diagram demonstrating execution model for molecular dynamics with replica exchange. Short simulation segments are run in an ensemble with asynchronous data exchanges [24].](/figures/figure-12-diagram-demonstrating-execution-model-for-3heg48tw.png)

GeMTC is currently optimized for executing within environments containing a single GPU per node, such as Blue Waters ; but future work aims to address heterogeneous accelerator environments. The authors leave this for future work. Future work also includes performance evaluation of diverse application kernels ; analysis of the ability of such kernels to effectively utilize concurrent warps ; enabling of virtual warps [ 25 ] which can both subdivide and span physical warps ; support for other accelerators such as the Xeon Phi ; and continued performance refinement.

Many important application classes and programming techniques that are driving the requirements for such extremescale systems include branch and bound, stochastic programming, materials by design, and uncertainty quantification.

The dataflow programming model of the Swift parallel scripting language can elegantly express, through implicit parallelism, the massive concurrency demanded by these applications while retaining the productivity benefits of a high-level language.

To optimize the GeMTC framework for fine-grained tasks, the authors have implemented a task-bundling system to reduce the amount of communication between the host and GPU.

Future work also includes performance evaluation of diverse application kernels; analysis of the ability of such kernels to effectively utilize concurrent warps; enabling of virtual warps [25] which can both subdivide and span physical warps; support for other accelerators such as the Xeon Phi; and continued performance refinement.

The GeMTC implementation on the Xeon-Phi will benefit greatly from avoiding memory and thread oversubscription, as highlighted in this work.

Tasks typically run to completion: they follow the simple input-process-output model of procedures, rather than retaining state as in web services or MPI processes.

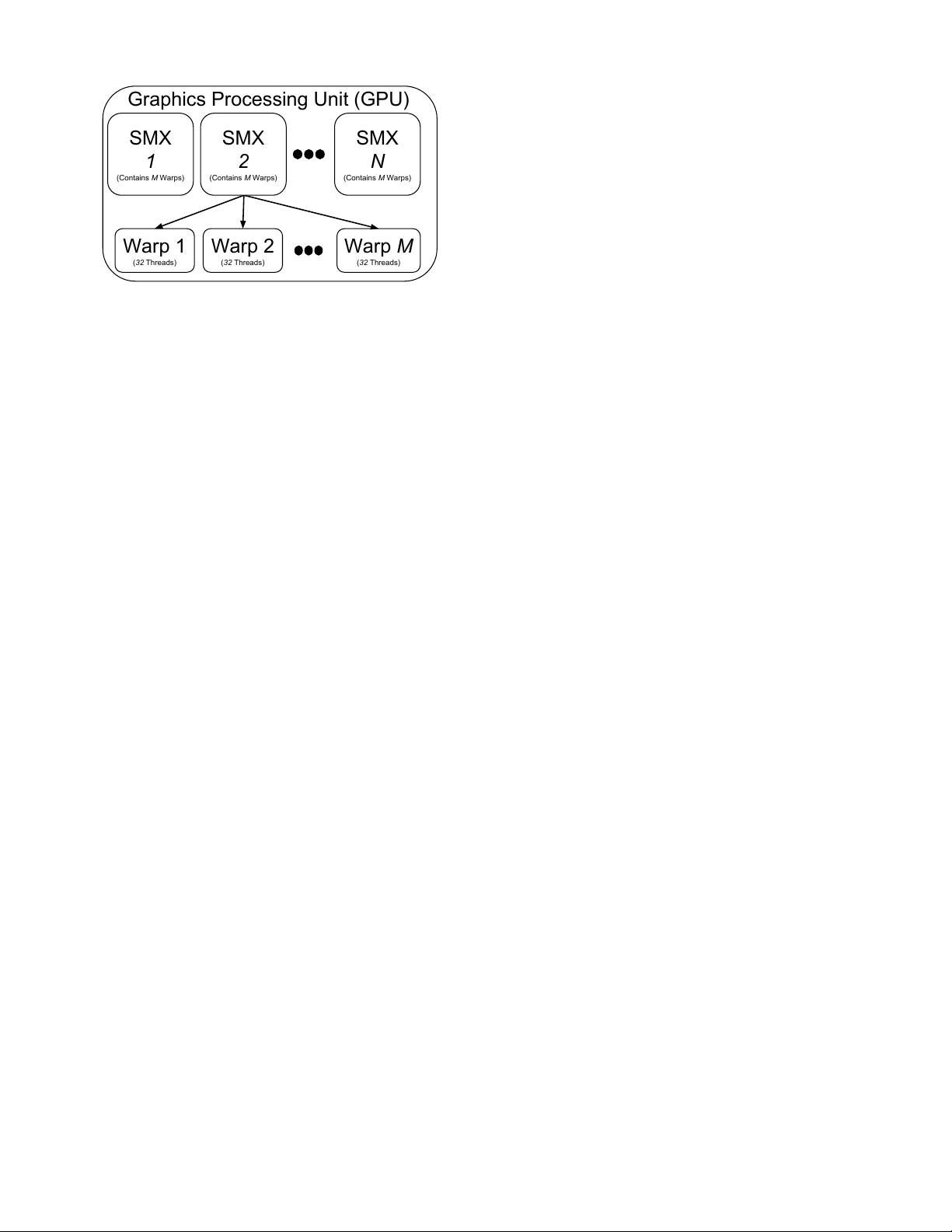

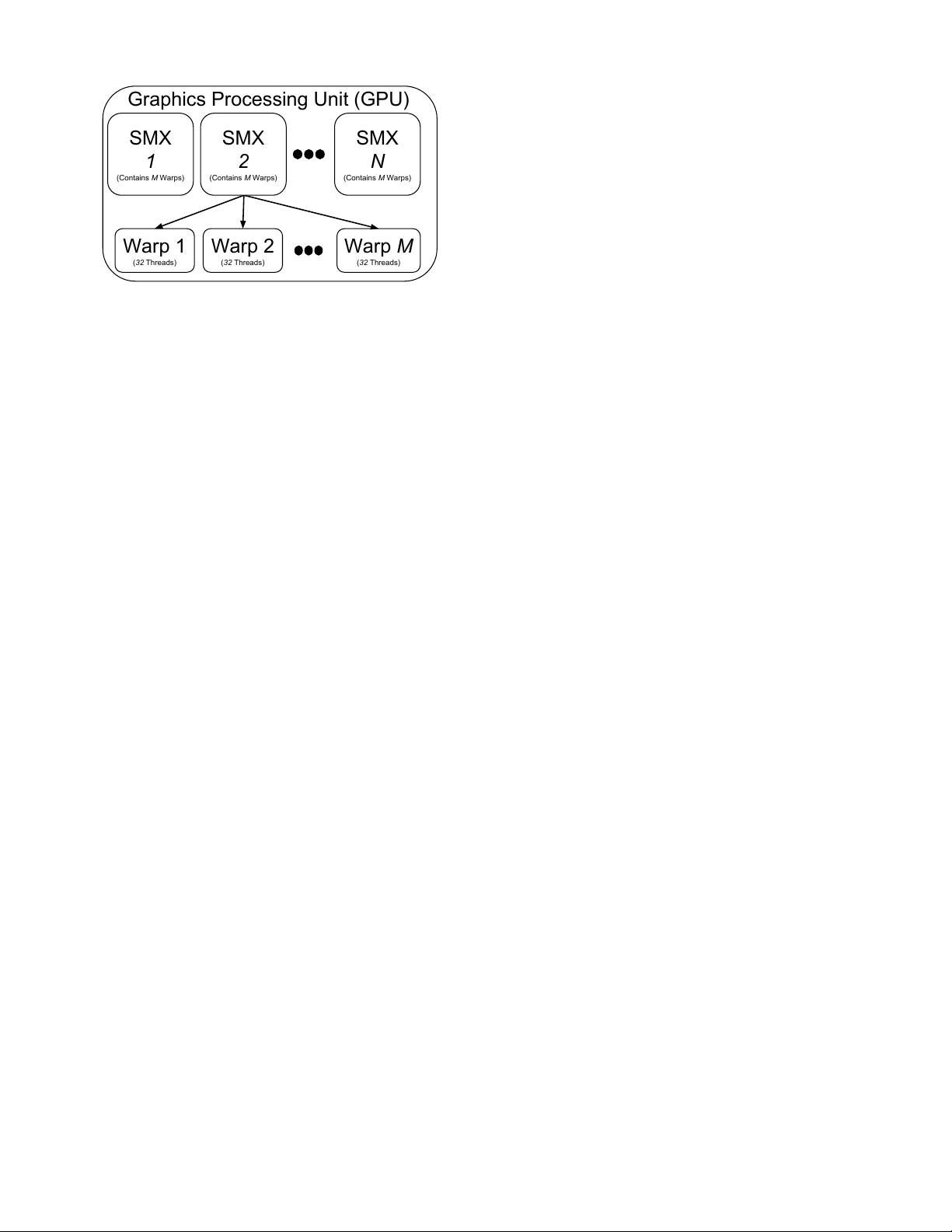

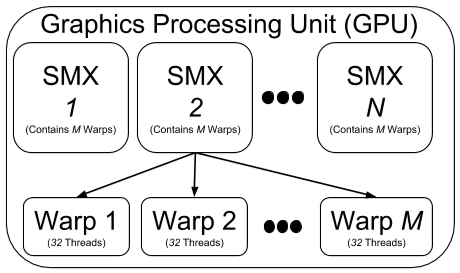

Scaling an application down to the level of concurrency available within a single warp can provide the highest level of thread utilization for some applications.

MTC workloads that send only single tasks, or small numbers of large tasks, to accelerator devices observe near-serialized performance, and leave a significant portion of device processor capability unused.

Instead of an application launching hundreds or thousands of threads, which could quickly become more challenging to manage, GeMTC AppKernels are optimized at the warp level, meaning the programmer and AppKernel logic are responsible for managing only 32 threads in a given application.

The Pegasus project runs at the hypervisor level and promotes GPU sharing across virtual machines, while including a custom DomA scheduler for GPU task scheduling.

Each task enqueued requires at least two device allocations: the first for the task itself and the second for parameters and results.

The main bottleneck for obtaining high task throughput through GeMTC is the latency associated with writing a task to the GPU DRAM memory.

If the compiler is able to generate device code and parallel instructions, the developer may opt to write sequential code and benefit from accelerator speedup.

The precompiled MD AppKernel already knows how to pack and unpack the function parameters from memory; and once the function completes, the result is packed into memory and placed on the result queue.

With traditional CUDA programming models the current best practice is to allocate all memory needed by an application at launch time and then manually manage and reuse this memory as needed.

While the walltime of MDLite successfully decreases as more threads are added, the speedup obtained is significantly less than ideal after 8 threads are active within a single warp.