Did you find this useful? Give us your feedback

265 citations

...[235] were the first to introduce the concept of cache freshness in opportunistic mobile networks....

[...]

213 citations

141 citations

...RADON, Give2Get, SRed and Li and Cao’s scheme are easy to operate, while MobiID and IROMAN are of high reliability thanks to the exploring of social community and group strength....

[...]

...Li and Cao [79] presented a similar scheme to migrate routing misbehavior through detecting packet dropping....

[...]

...Recently, Cao et al. [100], for the first time, proposed a scheme to efficiently maintain the cache freshness by organizing the caching nodes as a tree structure during data access....

[...]

51 citations

45 citations

...Furthermore, since the cached data may be refreshed periodically and is subject to expiration, a novel scheme was proposed in [24] to efficiently maintain freshness of the cached data....

[...]

113 citations

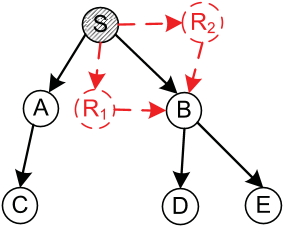

...𝑆 only replicates the data update to 𝑅𝑘 if 𝑈𝑅𝑘 ≥ 𝑈𝑅𝑗 for ∀𝑗 ∈ [0, 𝑘), and 𝑆 itself is considered as 𝑅0....

[...]

101 citations

...For example, news from CNN or the New York Times may be refreshed frequently, and smaller Δ (e.g., 1 hour) should be applied accordingly....

[...]

...Currently, research efforts have been focusing on determining the appropriate caching locations [27], [19], [17] or the optimal caching policies for minimizing the data access delay [28], [22]....

[...]

100 citations

...For example, news from CNN or the New York Times may be refreshed frequently, and smaller Δ (e.g., 1 hour) should be applied accordingly....

[...]

...Currently, research efforts have been focusing on determining the appropriate caching locations [27], [19], [17] or the optimal caching policies for minimizing the data access delay [28], [22]....

[...]

88 citations

...For example, news from CNN or the New York Times may be refreshed frequently, and smaller Δ (e.g., 1 hour) should be applied accordingly....

[...]

...Currently, research efforts have been focusing on determining the appropriate caching locations [27], [19], [17] or the optimal caching policies for minimizing the data access delay [28], [22]....

[...]

85 citations

...However, there are cases where an application might have specific requirements on Δ and 𝑝 to achieve sufficient levels of data freshness....

[...]

Due to the intermittent network connectivity in opportunistic mobile networks, data is forwarded in a “carry-and-forward” manner.

Due to possible version inconsistency among different data copies cached in the DAT, opportunistic refreshing may have some side-effects on cache freshness.

Their results show that up to a boundary on the order of several minutes, the decay of the CCDF is well approximated as exponential.

since different values of 𝑝 do not affect the calculation of utilities of data updates, such increase of refreshing overhead is relatively smaller than that of decreasing Δ.Section IV-C shows that the refreshing patterns of web RSS data is temporally skewed, such that the majority of data updates are generated during specific time periods of a day.

The performance of their proposed scheme on maintaining cache freshness is evaluated by extensive tracedriven simulations on realistic mobile traces.

From Figure 12 the authors observe that, when the value of Δ is small, the cache freshness is mainly constrained by the network contact capability, and the actual refreshing delay is much higher than the required Δ. Such inability to satisfy the cache freshness requirements leads to more replications of data updates as described in Section V-B, and makes caching nodes more prone to perform opportunistic refreshing.