Did you find this useful? Give us your feedback

729 citations

275 citations

162 citations

63 citations

49 citations

2,478 citations

1,985 citations

...Due to the complexity of many streaming applications, they often cannot be modeled as static dataflow graphs [30], [31], where consumption and production rates are known at compile time....

[...]

1,011 citations

729 citations

687 citations

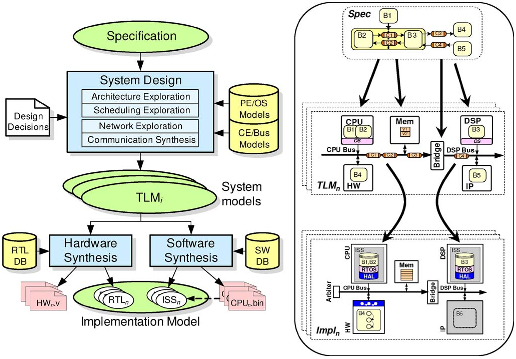

...Furthermore, compared to lower levels where refinement is often reduced to producing a simple netlist, generating an implementation of system-level computation and communication decisions is a nontrivial task that requires significant coding effort....

[...]

...Furthermore, based on such observations, synergies between different approaches can be explored, and corresponding interfaces between different tools can be defined and established in the future....

[...]

Nevertheless, no single approach currently provides a complete solution, and further research in many areas is required. In the future, the authors plan to investigate such interoperability issues using combinations of different tools presented in this paper. On the other hand, based on the common concepts and principles identified in this classification, it should be possible to define interfaces such that different point tools can be combined into an overall ESL design environment. Last, but not least, the authors would like to thank the reviewers for their helpful comments and suggestions in making this paper a much stronger contribution.

constant methods called guards (e.g., check) can be used to test values of internal variables and data in the input channels.

Finding a good application-to-architecture mapping is carried out during a two-phase automatic architecture exploration step consisting of static and dynamic (i.e., simulative) exploration methods using a TAPM MoP.

Due to the highly automated design flow of Daedalus, all DSE and prototyping work was performed in only a short amount of time, five days in total.

Examples of simulation-based MoPs for different classes of timing granularity are cycle-accurate performance models (CAPMs), instruction-set-accurate performance models (ISAPMs), or task-accurate performance models (TAPMs) [12].

Lower level flows can be supplied either in the form of corresponding synthesis tools or by providing predesigned intellectual property (IP) components to be plugged into the system architecture.

The FSM controlling the communication behavior of the SysteMoC actor checks for available input data (e.g., #i1 ≥ 1) and available space on the output channels (e.g., #o1 ≥ 1) to store results.

An important observation that can be made from Fig. 1 is that, at the RT level, hardware and software worlds unite again, both feeding into (traditional) logic design processes down to the final manufacturing output.

Due to the complexity of many streaming applications, they often cannot be modeled as static dataflow graphs [30], [31], where consumption and production rates are known at compile time.

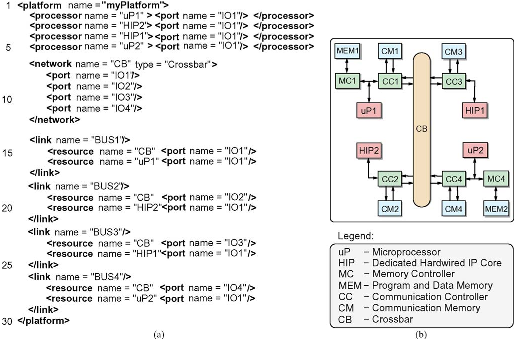

The complete cellphone specification consists of about 16 000 lines of SpecC code and is refined down to 30 000 lines in the final TLM.

These components include a variety of programmable processors, dedicated hardwired IP cores, memories, and interconnects, thereby allowing the implementation of a wide range of heterogeneous MPSoC platforms.

KPNgen [23] allows for automatically converting a sequential (SANLP) behavioral specification written in C into a concurrent KPN [22] specification.

Once this selection has been made, the last step of the proposed ESL design flow is the rapid prototyping of the corresponding FPGA-based implementation in terms of model refinement.