Abstract— Accurate localization is an important part of

successful autonomous driving. Recent studies suggest that when

using map-based localization methods, the representation and

layout of real-world phenomena within the prebuilt map is a

source of error. To date, the investigations have been limited to

3D point clouds and normal distribution (ND) maps. This paper

explores the potential of using OpenStreetMap (OSM) as a proxy

to estimate vehicle localization error. Specifically, the

experiment uses random forest regression to estimate mean 3D

localization error from map matching using LiDAR scans and

ND maps. Six map evaluation factors were defined for 2D

geographic information in a vector format. Initial results for a

1.2 km path in Shinjuku, Tokyo, show that vehicle localization

error can be estimated with 56.3% model prediction accuracy

with two existing OSM data layers only. When OSM data

quality issues (inconsistency and completeness) were addressed,

the model prediction accuracy was improved to 73.1%.

I. I

NTRODUCTION

Accurate localization is a crucial requirement of successful

autonomous driving, allowing self-driving vehicles to navigate

safely. Major sources of positioning information are Global

Navigation Satellite Systems (GNSS) such as GPS,

GLONASS, Galileo or BeiDou. The positional accuracy using

just GNSS, however, can exhibit errors of tens of meters

within dense urban environments due to buildings blocking

and reflecting the satellite signal [1]. To address this issue,

self-driving vehicles use vision and range-based sensors to

determine where they are located. One such method utilizes

light detection and ranging (LiDAR) sensors for map

matching, whereby the input scan is registered against a

prebuilt map to obtain a position. Despite the advancement in

LiDAR sensor technology, map-based localization can still

suffer from error, arising from sources such as the input scan

or the matching algorithm.

To improve map-based localization accuracy, research so

far has focused on refining the map matching algorithms [2] as

well as producing increasingly accurate High Definition maps

[3]. In contrast, there is a lack of research on the impact of the

map itself, and the representation of the environment, as a

source of localization error. For example, within a tunnel or

urban canyon environment, even with a locally and globally

accurate map, the lack of longitudinal features in the map can

cause localization error in the moving direction. So far, the

author is aware of only one set of studies which have been

conducted to evaluate the relation between the representation

within the map and localization accuracy [4], [5].

To date, the evaluation of a map’s capability for vehicle

localization has been limited to the 3D point cloud format.

Kelvin Wong, Ehsan Javanmardi, Mahdi Javanmardi, Yanlei Gu and

Shunsuke Kamijo are with The Institute of Industrial Science (IIS), The

There are several challenges with this approach. Firstly,

localization error can only be estimated for an area if a prebuilt

point cloud map is available. If not, data must be collected,

which can be costly and time-consuming. These 3D point

cloud maps also require substantial effort to maintain and keep

up to date. Secondly, the large size of the point cloud data can

make it challenging to manage. For example, a 300×300 m

area may consist of over 250 million points. Coupled with the

unstructured nature of the data, this leads to a large amount of

pre-processing, processing, and subsequent management.

Considering these challenges, there is a need to explore

whether other mapping formats and sources of data, such as

geographic information, can offer an alternative approach for

estimating vehicle localization error.

This paper aims to investigate whether it is possible to

estimate vehicle localization error using OpenStreetMap

(OSM) as a proxy. OpenStreetMap is a freely editable 2D map

of the world. The main objective is to leverage OSM’s public

availability, wide coverage, and comparatively ‘light’ data to

enable a more efficient alternative for estimating localization

error. This work builds upon Javanmardi et al.’s [4] format-

agnostic map evaluation criteria, by defining six new factors

specific for 2D geographic information (as used by

OpenStreetMap). The resulting work can enable the estimation

of localization error without requiring the collection of data to

create a prebuilt map. It can help identify and highlight areas

with high localization error – either as the result of the map

itself, or the real-world environment. Further, the estimated

error model can be used in conjunction with other sensor

information to improve the accuracy of localization [6].

The remainder of this paper is structured as follows: in

Section II, a short overview of vehicle localization and its

sources of error is presented, followed by an overview of

geographic information and OpenStreetMap. Section III

describes the datasets used in this study, as well as the

formulation of the six map evaluation factors. The results of

the experiment are presented in Section IV. Following a

discussion on the capability of OSM, and the advantages and

challenges of the approach, the final Section presents the

conclusions and future work.

II. B

ACKGROUND

A. Vehicle localization

Localization is the estimation of where a vehicle is situated

in the world. While Global Navigation Satellite Systems can

provide a position with meter-level accuracy, this is

insufficient for the application of autonomous driving. One

University of Tokyo, Tokyo, 153-8505 Japan. (Tel: +81-(0)3-5452-6273; e-

mail: {kelvinwong, ehsan, mahdi, guyanlei, kamijo}@kmj.iis.u-tokyo.ac.jp.

Evaluating the Capability of OpenStreetMap for Estimating Vehicle

Localization Error

Kelvin Wong, Ehsan Javanmardi, Mahdi Javanmardi, Yanlei Gu and Shunsuke Kamijo

meter can be the difference between driving safely on the road

in the correct lane and driving along the pavement.

Centimeter-level positional accuracy is therefore required for

successful autonomous driving. To achieve this, autonomous

vehicles are equipped with a myriad of sensors, including

LiDAR, radar, and onboard cameras.

One approach for localization is LiDAR map matching.

For this, a map is prebuilt using a high-end mobile mapping

system. Subsequently, the LiDAR scan from an autonomous

vehicle can then be used to match against the prebuilt map to

obtain a position. Despite the importance of accurate vehicle

self-localization, there is yet to be an international standard for

required accuracy. As guidance, the Japanese government’s

Cross-ministerial Strategic Innovation Promotion (SIP)

Program recommends an accuracy of less than 25 cm. This

value is based on satellite image resolution and the physical

width of a car tire.

B. Sources of localization error for LiDAR map matching

Sources of localization error for map matching using

LiDAR can be divided broadly into four categories: 1) Input

scan; 2) Matching algorithm; 3) Dynamic phenomena, and; 4)

Prebuilt map.

Firstly, errors from the input scan may be dictated by

choice of the laser scanner. For example, the lower-end

Velodyne VLP-16 has 16 laser transmitting and receiving

sensors with a range of up to 100m. In contrast, the latest

model (VLS-128) has 128 sensors and up to 300 m range,

allowing the capture of more detail in a wider area, thus

enabling more accurate map matching. In addition, distortion

can be introduced during the capture phase when in motion –

a vehicle traveling at 20 m/s, with a scanner at 10Hz would

result in a 2 m distortion as the scanner rotates 360 degrees.

Errors can also be introduced in the post-processing where the

input scan is required to be downsampled for the matching

algorithm. While this reduces noise within the data, it can also

remove features and details useful for map matching.

Secondly, the choice of the matching algorithm can be a

source of error. As matching is an optimization problem, some

algorithms are designed to be more robust to local optimum,

while others are more immune to initialization error [7]. In

general, Normal Distribution Transform (NDT) is more

resilient to sensor noise and sampling resolution (than other

algorithms such as Iterative Closest Point) but suffers from

slower convergence speed [8].

Thirdly, dynamic phenomena in the environment can

contribute error. During the mapping phase, if a large vehicle

such as a lorry or a bus blocks the laser scanner, then a large

portion of the map will be missing. During the localization

phase, some phenomena or features may have moved or

shifted. For example, parked cars found in the prebuilt map

may have since moved or buildings may have been built or

demolished. Furthermore, seasonal changes such as the

presence and absence of leaves on trees between the mapping

and localization phases can introduce error.

Lastly, the prebuilt map itself is a source of error. As

mentioned earlier, having a highly accurate map does not

always result in a low localization error. There are instances

within the environment, e.g. tunnels, urban canyons, open

spaces whereby map matching is not a suitable localization

method. In these cases, it is the physical attributes of the

phenomena and its representation on the map which is the

source of localization error. This is discussed further in the

next section.

C. Quantifying the sources of map-derived errors

To quantify the sources of map-derived errors, Javanmardi

et al. [4] defined four criteria to evaluate a map’s capability for

vehicle localization: 1) feature sufficiency; 2) layout; 3) local

similarity, and; 4) representation quality.

Feature sufficiency describes the number of mapped

phenomena in the vicinity which can be used for localization.

For example, within urban areas, buildings and other built

structures provide lots of features to match against, resulting

in more accurate localization. An insufficient number of

features in the vicinity (such as found in open rural areas) may

result in lower localization accuracy.

The layout is also an important consideration, as it is not

simply the number of features in the vicinity, but also how they

are arranged. Even if there are lots of high-quality features

nearby, if they are all concentrated in one direction, the quality

of the matching degrades.

To further compound the issue, in certain scenarios, even

with sufficient and well-distributed features, accurate

localization remains difficult due to local similarity. In these

cases, there may be geometrically similar features either side

of the vehicle, making it difficult during the optimization

process to determine the position in the traveling direction.

Lastly, representation quality describes how well a map

feature represents its real-world counterpart. In theory, the

closer the map is to reality, the more accurate the prediction. It

is important to note, however, that this criterion is slightly

different from the level of abstraction or generalization of the

map. For example, a flat wall can be highly abstracted as a

single line but still have high representation quality.

D. OpenStreetMap

First introduced in 2004, OpenStreetMap is an open source

collaborative project providing user-generated street maps of

the world. As of March 2019, the project has over 5.2 million

contributors to the project who create and edit map data using

GPS traces, local knowledge, and aerial imagery. In the past,

commercial and governmental mapping organizations have

also donated data towards the project.

Features added to OSM are modeled and stored as tagged

geometric primitives (points, lines, and polygons). For

example, a road may be represented as a line vector geometry

with tags such as ‘highway = primary; name:en = Ome Kaido;

oneway = yes’. Despite OSM operating on a free-tagging

system (and thus allowing a theoretically unlimited number of

attributes and tag combinations), there is a set of commonly

used tags for primary features which operate as an ‘informal

standard’ [9].

As with any user-generated content, OSM attracts

concerns about data quality and credibility. Extensive research

has been conducted on assessing the quality of OSM datasets,

including evaluating the completeness of the data. For

example, Barrington-Leigh and Millard-Ball [10] estimate the

global OSM street layer to be ∼83% complete, with more than

40% of countries having fully mapped networks. When

comparing the number of OSM tags in Japan to official

government counts (TABLE I. ), ‘completeness’ varies with

different features [11]. While fire departments, police stations

and post offices are well represented, temples and shrines are

underrepresented. Despite this variance in completeness, OSM

still represents a viable and useful source of geospatial data. In

fact, in certain areas, OSM is more complete and more

accurate (for both location and semantic information) than

corresponding proprietary datasets [12], [13]. As mapping data

are abstract representations of the world, it can also be argued

that a map can never truly be complete. Instead, mapping data

should be collected as fit-for-purpose, and specific for the

required application. OSM is successfully used in a wide

variety of practical and scientific applications from different

domains, demonstrating the usefulness of crowdsourced

mapping data.

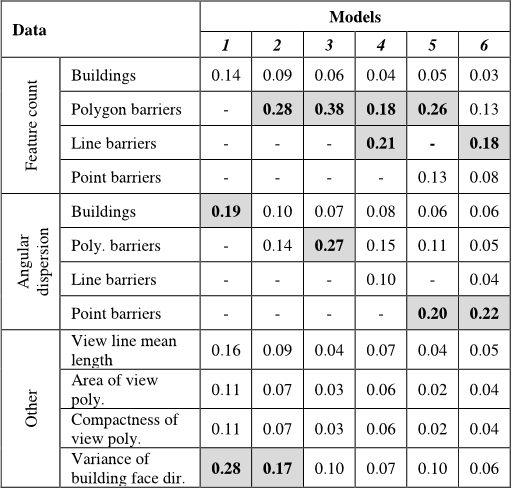

TABLE I. C

OVERAGE OF

OSM

TAGS IN

J

APAN

2017

[11]

Tag # Actual # in OSM Coverage

School 36,024 45,568 126.5%

a

Fire department 5,604 5,028 89.7%

Police station 15,034 13,152 87.5%

Post office 24,052 20,795 86.5%

Traffic lights 191,770 108,498 56.6%

Convenience store 55,176 30,710 55.7%

Bank 13,595 7,077 52.1%

Gas station 32,333 8,944 27.7%

Pharmacy 58,326 7,842 13.4%

Shrine 88,281 10,292 11.7%

Temple 85,045 9,610 11.3%

Postal post 181,523 7,522 4.1%

Vending machine 3,648,600 10,311 0.3%

a. Note that the coverage of schools is higher than 100%. This is the result of schools comprising of

multiple buildings and therefore having more than one polygon or point represented within OSM.

E. Related work

There are a several studies on the estimation of vehicle

localization error in literature. Akai et al. [6] estimate the error

of 3D NDT scan matching in a pre-experiment, which was

then subsequently used in the localization phase for pose

correction. Javanmardi et al. [4] proposed ten map evaluation

factors to quantify the capability of normal distribution (ND)

maps for vehicle self-localization, allowing error to be

modelled with an RMSE and R

2

of 0.52 m and 0.788

respectively. The subsequent error model was then used to

dynamically determine the NDT map resolution, resulting in a

reduction of map size by 32.4% while keeping mean error

within 0.141 m. For both studies [4], [6], the estimation of

localization error used 3D point cloud and ND maps. As far as

the authors are aware, no previous study has investigated the

use of OpenStreetMap as a proxy for the estimation of

autonomous vehicle localization error.

III.

M

ETHOD

A. Study area

The study area is Shinjuku, Tokyo, Japan. The architecture

is relatively heterogeneous, with buildings ranging from low-

rise shops to multi-story structures. Similarly, roads vary from

single narrow lanes to wide multi-lane carriageways. The

experiment path is 1.2 km and is shown in Figure 1.

Figure 1. Experiment path in Shinjuku, Tokyo, Japan.

B. Mapping data

Mapping data from OSM was extracted from the

geofabrik.de server. For the study area, 49 data layers

(referencing 23 tags) were available. Note that each tag can be

represented by a maximum of three layers, one for each

geometry primitive (point, line, polygon). TABLE II. shows a

summary of all the tags found in the study area and their

feature counts.

TABLE II. OSM

TAGS AND FEATURE COUNT IN THE STUDY AREA

OSM tags Feature count

OSM tags Feature count

aeroway 12 natural 595

amenity 2,109 office 35

barrier 675 place 4

boundary 16 power 20

building 10,723

public

transport

114

craft 2 railway 485

emergency 8 route 100

highway 4,021 shop 580

historic 7 tourism 168

landuse 202 unknown 598

leisure 87 waterway 2

man made 12

Note that OSM tags can represent geometric or semantic

features. For example, features labeled as ‘building’ may

represent the physical walls of the structure, whereas a

‘boundary’ feature may represent an administrative region,

e.g., ward boundary. For this experiment, the aim is to model

what the LiDAR scanner ‘sees’ during the localization phase.

As such, only the geometric features from OSM were useful.

To model the surrounding environment, four OSM data

layers were used: 1) building_polygon; 2) barrier_polygon; 3)

barrier_line, and; 4) barrier_point. The buildings layer was

selected as they represent the most stable feature within the

urban landscape. Buildings, in general, are least affected by

seasonality or time of day (unlike other features such as trees).

For vehicle localization, however, the use of buildings alone

for map matching may be insufficient. For example, on wide

multi-lane roads or within the rural environment, buildings

may not be present or visible by the LiDAR scanner. In these

scenarios, roadside features (such as central reservations,

guard rails, and pole-like features) become increasingly

important. Within OSM, these are classified under the

‘barriers’ tag. Areal features, such as hedges, are classed under

‘barrier_polygon’. Linear features, such as guard rails and

traffic barriers, are classed as ‘barrier_line’. Lastly, pole-like

features (e.g., bollards) are classed as ‘barrier_point’.

The completeness of OSM varies within the study area. For

buildings, the data is 74.3% complete when compared to GSI

Japan building footprints, using area as a crude measure. For

barriers, an authoritative equivalent dataset was not available

for reference. In a visual comparison with aerial imagery, over

half of the hedges and central reservations (polygon barriers)

were missing along the experiment path. There were no line or

point barriers mapped within 20 m of the experiment path

(although they were present in the wider study area).

To mitigate any issues of inconsistency and

incompleteness (as highlighted in Section II.D), an additional

set of ‘completed’ OSM layers were created. Supplementary

data (aerial imagery and base map) from ATEC and the

Geospatial Information Authority of Japan were used to

support the manual digitization. By creating these ‘completed’

layers, it allowed the assessment of the capability of OSM, in

a scenario where users had correctly and fully mapped all

required features. This allowed for a more robust evaluation,

without being hindered by data quality issues. In total, 63

polygon-barriers, 31 line-barriers, and 204 point-barriers were

manually added along the experiment path. The ‘completed’

OSM layers are presented in Figure 2. TABLE III. presents a

summary of all the mapping data used in the study.

TABLE III. S

UMMARY OF MAPPING DATA USED

Layer

name

Type Description Count

Original OpenStreetMap layers

Building Polygon

Individual buildings or groups of

connected buildings, e.g.,

apartments, office, cathedral

10,723

Polygon

barriers

Polygon

Areal barriers and obstacles, e.g.

hedges, central reservation

410

Completed OpenStreetMap layers

Polygon

barriers

(completed)

Polygon

Areal barriers and obstacles,

manually completed using aerial

imagery and external reference

maps.

473

Line

barriers

(completed)

Line

Linear barriers and obstacles, e.g.

guard rails. Manually digitized

using aerial imagery and external

reference maps.

229

Point

barriers

(completed)

Point

Point barriers and obstacles, e.g.

pole-like objects, lamp posts,

traffic lights. Manually digitized

using aerial imagery and external

reference maps

271

Figure 2. Map showing the ‘completed’ OSM layers and experiment path

C. Localization error data

For localization error, data from a previous experiment was

used. Specifically, the mean 3D localization error from map

matching using a LiDAR input scan and a prebuilt normal

distribution (ND) map with a 2.0 m grid size. The process of

obtaining the mean 3D localization error is described below.

Firstly, a point cloud map of the area was captured using a

Velodyne VLP-16 mounted on the roof of a vehicle at the

height of 2.45 m. The vehicle speed was limited to 2 m/s, with

a scanner frequency of 20 Hz to mitigate motion distortion.

From this point cloud map, an ND map was generated with a

2.0 m grid size. Next, during the localization phase, a second

scan was obtained with the laser scan range limited to 20 m.

This second scan was then registered to the ND map (NDT

map matching) to obtain a location. Thirdly, to evaluate the

map factors, sample points along the experiment path at 1.0m

intervals were selected. For each sample point, mean 3D

localization error was calculated by averaging the localization

error from 441 initial guesses at different positions evenly

distributed around the sample point, at 0.2 m intervals within

a range of 2 m. Further details on the experimental set-up and

the creation of the data can be found in [4].

D. Formulating map evaluation factors

Javanmardi et al. [4] proposed ten map evaluation factors

to quantify the capability of ND map for vehicle self-

localization. Unlike the four map evaluation criteria, the map

factors were designed specifically to evaluate 3D ND maps

!

(

!(

!

(

!

(

!

(

!(

!

(

!

(

!

(

!

(

!

(

!

(

!

(

!

(

!

(

!

(

!

(

!

(

!(

!

(

!

(

!

(

!

(

!

(!(

!

(

!(

!(

!

(

!

(

!

(

!

(

!

(

!(

!

(

!

(

!

(

!(

!

(

!

(

!

(

!

(

!

(

!

(

!

(

!

(

!

(

!

(

!

(

!

(

!(

!

( !

(

!

(

!

(

!

(

!

(

!

(

!(

!

(

!

(

!

(

!

(

!

(

!

(

!

(

!

(

!

(

!(

!

(

!

(

!

(

!

(

!

(

!(

!

(

!

(

!

(

!(

!

(

!

(

!

(

!

(

!

(

!

(

!

(

!

(

!

(

!

(

!

(

!

(

!(

!

(

!

(

!

(

!

(

!(

!

(

!

(

!

(

!

(

!

(

!

(

!

(

!

(

!

(

!(

!

(

!

(

!(

!

(

!

(

!(

!(

!

(

!

(

!

(

!

(

!

(

!

(

!

(

!

(

!

(

!

(

!(

!

(

!

(

!

(

!

(

!

(

!

(

!

(!(

!

(

!

(

!(

!(

!(

!(

!

(

!

(

!

(

!

(

!

(

!

(

!

(

!

(

!

(

!

(

!

(

!

(

!(

!

(

!

(

!

(

!

(

!(!

(

!

(

!

(

!

(

!

(

!

(

!(!(

!

(

!

(

!

(

!

(

!

(

!

(

!

(

!

(

!

(

!

(

!

(

!

(

!

(

!

(

!

(

!

(

!

(

!

(

!(

!

(

!

(

!(

!(

!

(

!(

!

(

!

(

!

(

!

(

!

(

!(

!

(

!

(

!(

!

(

!

(

!

(

!

(

!(

Key

!

(

Point barriers

Line barriers

Polygon barriers

Buildings

Experiment path

±

0 150

Meters

and cannot be easily transferred to another mapping format

without modification. Therefore, six new factors for 2D vector

mapping format were defined, based on Javanmardi et al.’s [4]

map evaluation criteria. Specifically, the focus was on feature

sufficiency of the map and layout of the map. Local similarity

and representation quality were not considered in this study,

due to the high level of abstraction of OSM and the lack of a

higher quality dataset available for comparison.

To model the behavior of the laser scanner and localization

process, two auxiliary layers were produced: view lines and

view polygons. Firstly, for each sample point, 360 view lines,

each 20 m in length, radiating outwards from the center at 1°

interval, were generated (Figure 3a). These lines represent the

laser scanner’s 20 m range. Within the urban environment, the

walls of buildings represent a ‘solid’ and opaque barrier for the

laser scanner. As OSM is 2D, it is assumed that buildings are

infinitely tall, and view lines were clipped where they intersect

with the building layer (Figure 3b). This assumption was based

on the 30° vertical field of view (+/- 15° up and down) of the

Velodyne VLP-16. Mounted at 2.45 m, and with a laser range

of 20 m, the effective vertical view is between 0 to 7.8 m. This

is equivalent to an average two-story building. Considering the

buildings in the experiment area were, at a minimum, three

stories high, this assumption was acceptable. Secondly, from

these clipped view lines, a minimum bounding convex hull

was generated as an areal representation of what the laser

scanner ‘sees’ and is referred to as a view polygon (Figure 3c).

Figure 3. View lines and view polygons.

E. Factors for feature sufficiency criterion

1) Feature count

The first factor is the feature count. It is an adaption of

Javanmardi et al.’s [4] factor, providing a simple count of all

nearby features. However, unlike 3D ND map formats, there

are three geometry primitives for 2D vector mapping: points,

lines, and polygons. Further, the 3D nature of the scanner must

be abstracted in 2D.

To account for the differences between the geometric

representations, feature count was calculated based on the

intersection points between the layer and the view lines or

view polygons.

For buildings, feature count was the total number of view

lines which intersected with the buildings layer. In this

scenario, the intersection points were also the endpoints of the

view lines as they were previously clipped (as described in

Section III.D). At these end points of the view lines, it is

assumed that the sensor cannot ‘see’ any further past the

opaque building walls.

For point barriers, this was a simple count of features

which intersected with (and were therefore within) the view

polygon.

For line barriers, all intersection points between all view

lines and line barriers were counted.

Polygon barriers, unlike buildings, were not considered to

be infinitely tall and opaque as the LiDAR sensor can often

‘see’ over them. Modelling this was not as straight forward, as

the intersection between a view line and a polygon barrier is a

line. To remedy this, points were generated along the

intersection with view lines at 10 cm intervals i.e. the portion

of the view line which overlaps with any polygon barrier were

converted into a series of points. The value of 10 cm was

arbitrary chosen as an optimal balance between resolution and

computation time, after trialing multiple other values (1 cm, 5

cm, 25 cm). These generated points were then counted for the

feature count. One point to note is that this method results in

the generation of multiple points per view line, unlike other

layers which may only have single intersection points per

feature. This, however, does not affect the factors, as each

layer is considered separately during the calculation.

F. Factors for the layout of the map

1) Angular dispersion of features

Angular dispersion is a measure of the arrangement of

features around the sample point. Akin to Javanmardi et al.’s

[4] Feature DOP measure (which in turn was inspired by

GDOP in global positioning systems), this factor describes the

uniformity of dispersion and is calculated as follows:

=

∑

sin

+

∑

cos

where α is the azimuth of the features, in radians. The factor

returns a value between 0 and 1, with a value of 0 indicating a

uniform dispersion and a value of 1 meaning a complete

concentration in one direction. For this factor, the dispersion

of the intersection points between the view lines or view

polygons and the respective layer was calculated.

2) View line mean length

For each sample point, the mean length for every view line

which intersected with the building layer was calculated. Non-

intersecting view lines were not considered as they would

skew the metric.

3) Area of view polygon

This is the area of the view polygon. It represents the

theoretical area that the laser scanner can ‘see’. A small area

suggests that there are lots of building features in the vicinity

to localize against.

Key

!

Sample point

OSM Buildings

Generated view lines (20 m)

Clipped view lines

View polygon

! !

!

±

(a) (b)

(c)

![TABLE I. COVERAGE OF OSM TAGS IN JAPAN 2017 [11]](/figures/table-i-coverage-of-osm-tags-in-japan-2017-11-3p63pv94.png)