Did you find this useful? Give us your feedback

14 citations

...These insights would also in a a broader scope be useful to develop a unified framework under which a comparative analysis can be made among clusters deployed with other types of application accelerators such as PowerXCell 8i [9] and Field Programmable Gate Arrays (FPGA) [10]....

[...]

14 citations

...Keywords-FPGA, traversal cache, pointers, speedup I. INTRODUCTION Much previous work has shown that field-programmable gate arrays (FPGAs) can achieve order of magnitude speedups compared to microprocessors for many important embedded and scientific computing applications [3][4][9]....

[...]

11 citations

10 citations

9 citations

...FPGAs are widely employed as co-processors in personal computers such as GPUs or as accelerators in specific purpose devices as high-capacity network systems (Djordjevic et al., 2009) or high-performance computing (Craven & Athanas, 2007)....

[...]

1,666 citations

...A wide body of research over two decades has repeatedly demonstrated significant performance improvements for certain classes of applications through hardware acceleration in an FPGA [1]....

[...]

401 citations

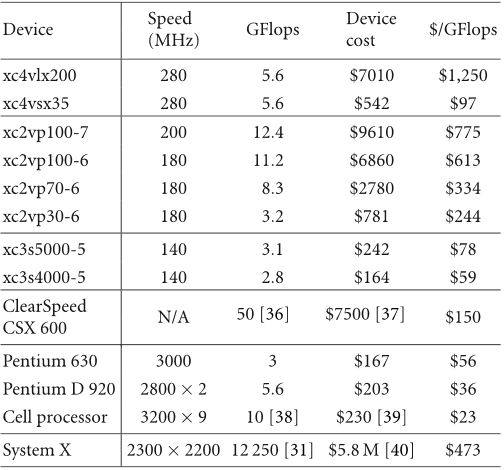

...Cell processor 3200 × 9 10 [38] $230 [39] $23 System X 2300 × 2200 12 250 [31] $5....

[...]

390 citations

341 citations

...Additional data was obtained by extrapolating the results of Underwood’s historical analysis [25] to include the Virtex 4 family....

[...]

...Extrapolated Cost-Performance Comparison While the larger FPGA devices that are prevalent in computational accelerators do not provide a cost benefit for the double precision floating-point calculations required by the HPC community, historical trends [25] suggest that FPGA performance is improving at a rate faster than that of processors....

[...]

224 citations

...6 GFLOPS by placing 39 floating-point processing elements on a theoretical Xilinx XC2VP125 FPGA [14]....

[...]

...Due to the prevalence of floating-point arithmetic in HPC applications, research in academia and industry has focused on floating-point hardware designs [14, 15], libraries [16, 17], and development tools [18] to effectively perform floating-point math on FPGAs....

[...]

...[14], representing the fastest double-precision floating-point MAC design, was extrapolated to the largest parts in several Xilinx device families....

[...]

...Dou et al. published one of the highest performance benchmarks of 15.6 GFLOPS by placing 39 floating-point processing elements on a theoretical Xilinx XC2VP125 FPGA [14]....

[...]

The strong suit of FPGAs, however, is low-precision fixed-point or integer arithmetic and no current device families contain dedicated floating-point operators though dedicated integer multipliers are prevalent.

One of the most efficient multiplication algorithms for large integers utilizes the FFT, treating the number being squared as a long sequence of smaller numbers.

When comparing HPC architectures many factors must be weighed, including memory and I/O bandwidth, communication latencies, and peak and sustained performance.

Many HPC applications and benchmarks require doubleprecision floating-point arithmetic to support a large dy-namic range and ensure numerical stability.

to permit their design to be more costcompetitive, even against efficient software implementations, smaller more cost-effective FPGAs could be used.

The distributed computing project GIMPS was created to identify large Mersenne primes and a reward of US$100,000 has been issued for the first person to identify a prime number with greater than 10 million digits.

Floating-point arithmetic is so prevalent that the benchmarking application ranking supercomputers, LINPACK, heavily utilizes doubleprecision floating-point math.

Due to the prevalence of floating-point arithmetic in HPC applications, research in academia and industry has focused on floating-point hardware designs [14, 15], libraries [16, 17], and development tools [18] to effectively perform floating-point math on FPGAs.

For Xilinx’s double-precision floatingpoint core 16 of these 18-bit multipliers are required [35] for each multiplier, while for the Dou et al. design only nine are needed.

A slightly reworked implementation, designed as an FFT accelerator with all serial functions implemented on an attached processor, could achieve a speedup of 2.6 compared to a processor alone.

The availability of high-performance clusters incorporating FPGAs has prompted efforts to explore acceleration of HPC applications.

The key contributions of this paper are the addition of an economic analysis to a discussion of FPGA supercomputing projects and the presentation of an effective benchmark for comparing FPGAs and processors on an equal footing.

Performing a traditional port of the algorithm from software to hardware involves the creation of a floating-point FFT on the FPGA.

In spite of the unique all-integer algorithmic approach, the stand-alone FPGA implementation only achieved a speedup of 1.76 compared to a 3.4 GHz Pentium 4 processor.

While there is always a danger from drawing conclusions from a small data set, both the Dou et al. and Underwood design results point to a crossover point sometime around 2009 to 2012 when the largest FPGA devices, like those typically found in commercial FPGA-augmented HPC clusters, will be cost effectively compared to processors for doubleprecision floating-point calculations.