Did you find this useful? Give us your feedback

743 citations

711 citations

256 citations

..., [29] and [30], we will extend it to our detection in the rest of this paper....

[...]

247 citations

159 citations

26 citations

...In the absence of a culture independent metric, modellers are forced to use parameters with embedded culture dependence....

[...]

25 citations

...Accurate cache models can therefore be built without any need to consider cultural effects that are hard to predict....

[...]

9 citations

2 citations

Number of requests used to calculate exponentThe authors have been able to obtain samples in excess of 500000 file requests for 5 very different caches.

In order to compare data from different caches reliably it is necessary to ensure that differences are real and not due to insufficiently large samples.

With appropriate care it is possible to fit an inverse power law curve to cache popularity curves, with an exponent of between -0.9 and -0.5, and with a high degree of confidence.

From consideration of the work of Zipf on word use in different cultures, it seems likely that cultural differences will often be expressed through differences in the K factor in the power curve rather than the exponent.

While filtering is one possible factor affecting the exponent of the locality curve, other factors possibly influence the exponent.

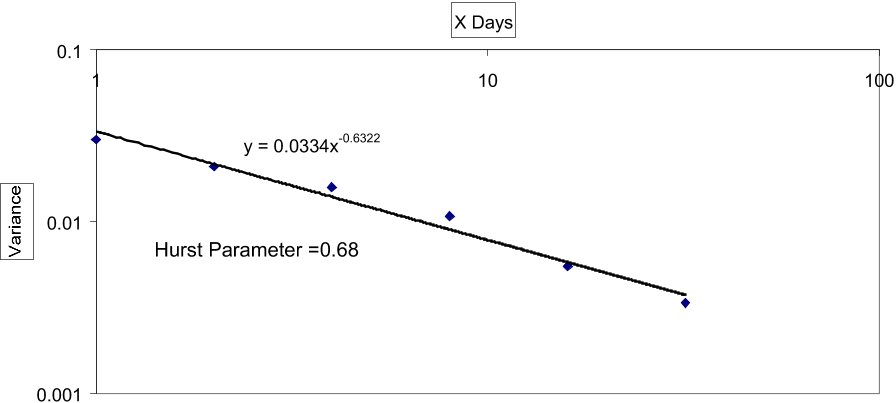

The analysis of cache popularity curves requires careful definition of what is to be analysed and, since the data displays significant long range dependency, very large sample sizes.

The authors demonstrate for the first time in this paper that, with appropriate care in the analysis, it can be shown that whilst the power law curves are not strictly Zipf curves they are still culture independent.

Over these six months the fitted exponent ranged from -0.23 to -1.34 with a mean of -0.5958 and a variance of 0.03 (figure 4), using the 'averaged' ranking method mentioned above.

The exponent does not appear to depend on cache size, on time, or on the culture of the cache users, but only depends on the topological position of the cache in the network.

Cache logs can be extremely comprehensive, detailing time of request, bytes transferred, file name and other useful metrics [e.g. ftp://ircache.nlanr.net/Traces/].