Did you find this useful? Give us your feedback

185 citations

110 citations

...Pan et al. (2019) introduced the CNN model to predict daily precipitation and tested it at 14 sites across the contiguous United States and proved that, if provided with sufficient data, the precipitation estimates from the CNNmethod are better than the reanalysis precipitation products and…...

[...]

104 citations

91 citations

78 citations

[...]

79,257 citations

...Details of the models and feature extraction are given in the supporting information (Breiman, 2001; Louppe, 2014; Wolfram, 2018; Pearson, 1901; Shlens, 2014)....

[...]

[...]

46,982 citations

...Through down-sampling, the higher layer convolutions work on extracted local features, which enables learning higher-level abstractions on the expanded receptive field (LeCun et al., 2015)....

[...]

...…features through building multiple levels of representation of the data, which are achieved by composing simple but nonlinear modules (named as neurons) that each transform the representation at one level into a representation at a higher, slightly more abstract level (LeCun et al., 2015)....

[...]

...A comprehensive review is beyond the scope of this work and can be found in LeCun et al. (2015), Schmidhuber (2015), and Goodfellow et al. (2016)....

[...]

...These layers learn representations of the data with multiple levels of abstraction (LeCun et al., 2015)....

[...]

...In a canonical ML modeling process, the raw form data, which quantify certain attributes of the study object, should be transformed into a suitable feature vector before being effectively processed for the learning objective (Goodfellow et al., 2016; LeCun et al., 2015)....

[...]

42,067 citations

40,257 citations

[...]

38,208 citations

In the following studies, the authors plan to make more comprehensive examination on the impact of different information processing unions in the network. Also, the authors wish to explore novel network architectures and advanced regularization approaches to support more accurate and high-resolution precipitation estimation.

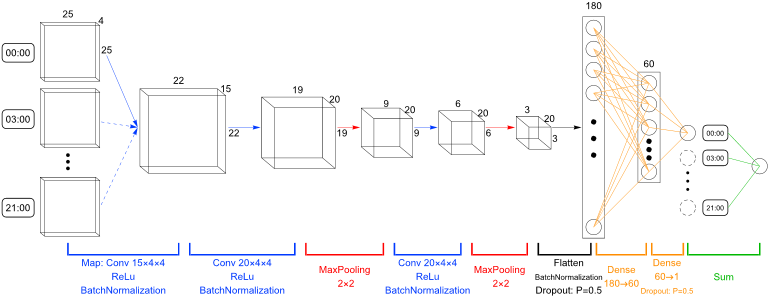

to disintegrate the impact of the cyclone geometric shape and position, the authors adopt the convolution mechanism in the network modeling.

The best performance in the comparison experiments is achieved by the linear regression model using input of the leading 16 PCs of the circulation field (r = 0.81, RMSE = 6.98).

The authors include the dropout (Srivastava et al., 2014) and batchnormalization (Ioffe & Szegedy, 2015) modules to enhance the model's performance.

the kernels that are used to extract the salient features from the resolved dynamical field are optimized by backpropagating the precipitation estimation error through the convolutional layers.

The computational demanding components in numerical simulations can be replaced by data-driven model counterparts to accelerate the simulation without significant loss of accuracy.

The authors carry out simulations using input composed of the leading 2, 8, 16, 64, and 256 PCs of the circulation field data, as well as simulations using the raw circulation field data.

To guarantee model's robustness with respect to parameter initialization, the authors carry out several implementations with different parameter initializations.

2308To test the applicability of the model for different climate conditions, the authors selected 14 sample grids that roughly cover the characteristic climate divisions of the contiguous United States.

The kernel size of the included convolutional layers is set to 20 × c × 4 × 4, where c is the channel number of the previous layer.

The predictors used for building the network models are the GPH and PW field data from the National Centers for Environmental Prediction (NCEP) North American Regional Reanalysis (NARR) data set (Mesinger et al., 2006).

By varying the receptive field of the convolutional layers, the authors verify that the CNN model outperforms conventional fully connected ANN SD in estimating precipitation through explicit encoding of local spatial circulation structures.

For the middle part of the continent, the CNN model shows slightly worse performance, which can be attributed to model overfitting when there are limited precipitation samples for training the model.

The deeper CNN models are constructed by adding two/four extra convolutional layers before the first pooling layer for the default network architecture in Figure 4.

This is achieved by utilizing the inner structure of the data to reduce the model structural redundancy and foster effective information extraction.