Did you find this useful? Give us your feedback

![Table 2. Feature selection approaches considered in the experiments [29, 27]. The table reports their Type, class (Cl.), complexity (Compl.), and execution times in seconds (Exec.Time). As for the complexity, T is the number of samples, n is the number of initial features, i is the number of iterations in the case of iterative algorithms, and C is the number of classes.](/figures/table-2-feature-selection-approaches-considered-in-the-2in0z9sm.png)

148 citations

...A number of feature scoring algorithms are available according to different criteria such as Mutual Information (MI) (Cover and Thomas, 2012), Fisher score (Gu et al., 2012), ReliefF (Liu and Motoda, 2007) (see, (Roffo et al., 2017) for their comparisons by using different datasets)....

[...]

...) classification model by using advanced dimension reduction approaches (Roffo et al., 2017); (ii) Design more levels of yellow rust infection or combining the data of different disease developmental stages so that regression analysis can be performed between SVIs and disease severity in a quantitative manner (Liu et al....

[...]

..., 2012), ReliefF (Liu and Motoda, 2007) (see, (Roffo et al., 2017) for their comparisons by using different datasets)....

[...]

...…SVI combination to build a better (in terms of simplicity, accuracy, etc.) classification model by using advanced dimension reduction approaches (Roffo et al., 2017); (ii) Design more levels of yellow rust infection or combining the data of different disease developmental stages so that…...

[...]

85 citations

82 citations

...Some commonly used feature selection methods are, the Fisher method [149], infinite latent feature selection (ILFS) [150], feature selection via eigenvector centrality (EC-FS) [151], dependence guided unsupervised feature selection (DGUFS) [152], feature selection with adaptive structure learning (FSASL) [153], unsupervised feature selection with ordinal locality (UFSOL) [154], least absolute shrinkage and selection operator (LASSO) [155] and feature selection via concave minimization (FSV) [156]....

[...]

77 citations

76 citations

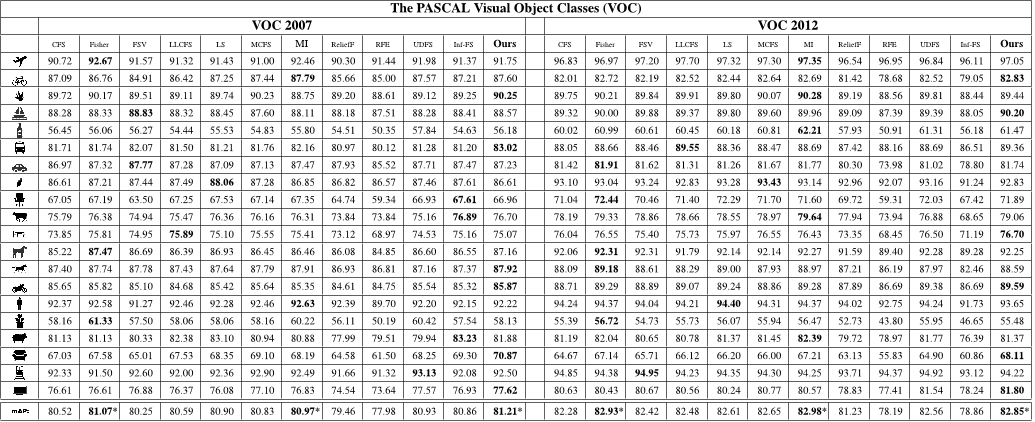

...In this section, we compare our framework with several feature selection methods considering both recent approaches [22], [40], [41], [43], [45], [55], [58], as so as some established algorithms [11], [27], [28], [32], [33], [46], [50], [54]....

[...]

...In ILFS, the features are grouped into token by probabilistic latent semantic analysis (PLSA), which in practice learns the weights of the adjacency graph of Inf-FS as to provide better class separability....

[...]

...The proposed framework generalizes the previously published Infinite Feature Selection (Inf-FS) [22], [23] presented as an unsupervised filtering approach, explained by algebraic motivations....

[...]

...Recently, other graph-based approaches have been proposed such as the eigenvector centrality (ECFS) [40], [41], [42] and the infinite latent feature selection (ILFS) [22], which is an extension of the unsupervised Inf-FSU ....

[...]

...NHTP [59] ECFS [42] Fisher [33] FSV [11] ILFS [22] LASSOU [56] LASSOH [55] MI [34] ReliefF [30] RFE [47] Inf-FSS...

[...]

49,914 citations

12,530 citations

...We selected 10 publicly available benchmarks of cancer classification and prediction on DNA microarray data (Colon [32], Lymphoma [14], Leukemia [14], Lung [15], Prostate [1]), handwritten character recognition (GINA [2]), text classification from the NIPS feature selection challenge (DEXTER [18]), and a movie reviews corpus for sentiment analysis (POLARITY [26])....

[...]

10,382 citations

7,939 citations

4,131 citations

This study also points to many future directions.