Did you find this useful? Give us your feedback

13 citations

...ture configurations that could occur in production (all potential configurations) [25], [26]....

[...]

9 citations

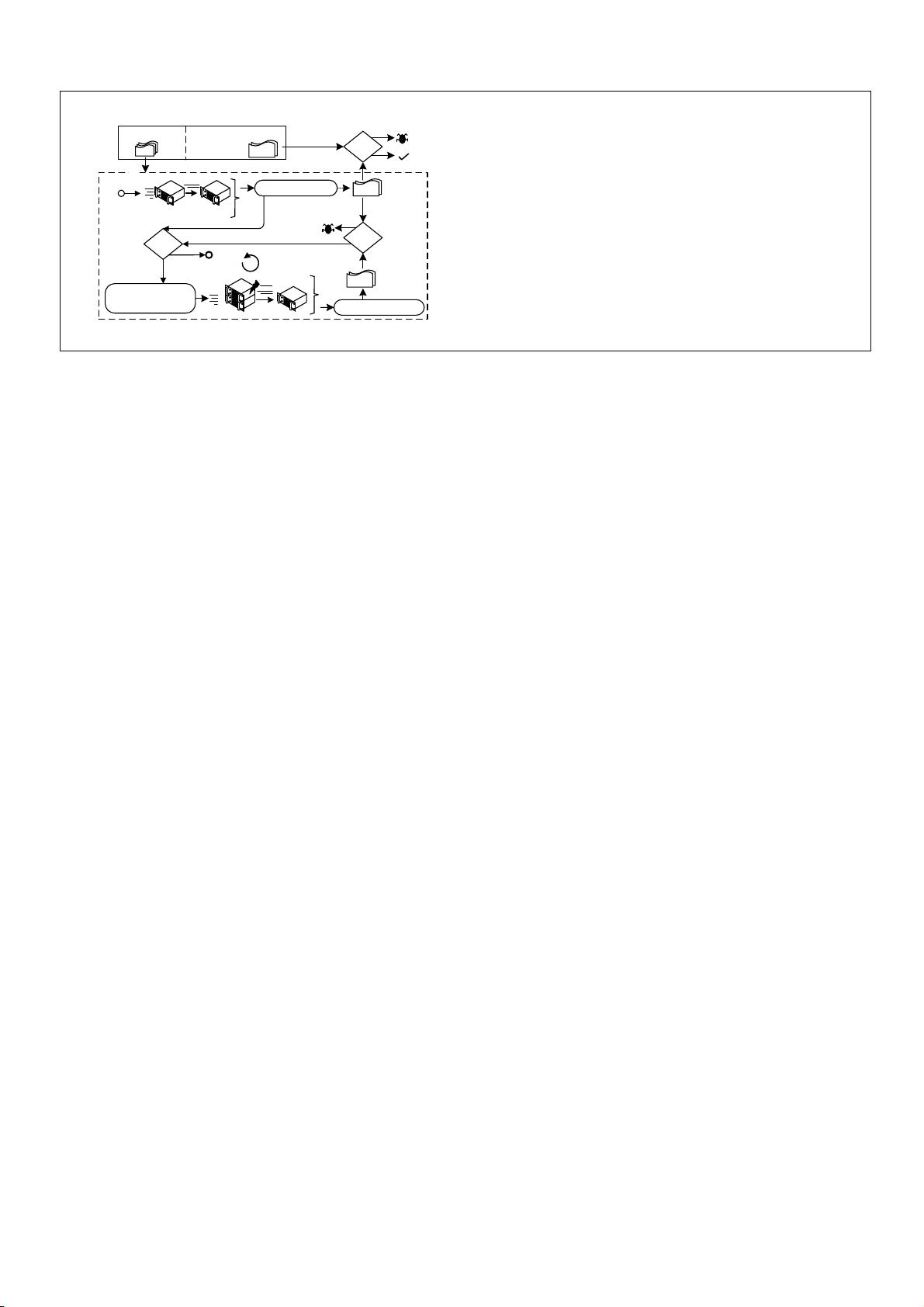

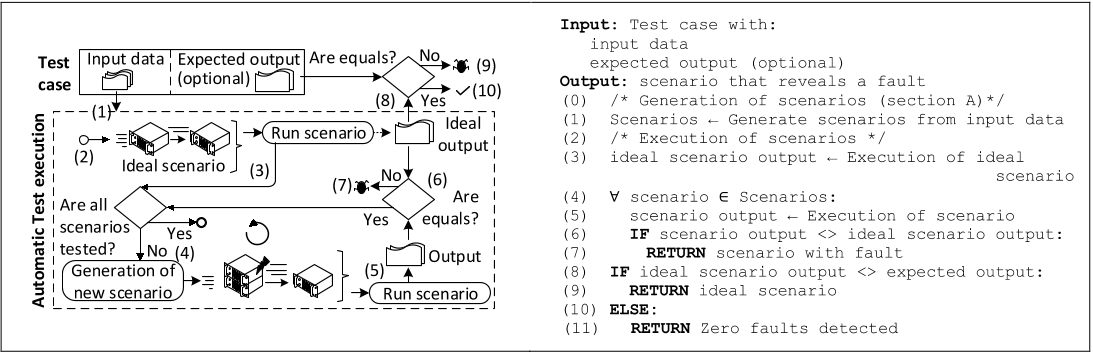

...Finally, the testing is performed using a specific MapReduce testing technique [22], [34] that only needs the test input data and the program to detect functional faults (6)....

[...]

...[34] designed an automatic testing technique based on combinatorics and simulation....

[...]

...For example, data mining and machine learning methods are used to reduce the size of the original dataset or generate synthetic datasets [3, 4] for the testing purpose....

[...]

...Previous work reported in [1, 2, 3, 4, 5] focuses on generating tests that help to identify functional faults, i....

[...]

...also proposed a technique to generate different infrastructure configurations for a given MapReduce program that can be used to reveal functional faults [4]....

[...]

...Some approaches have been proposed to reduce the effort required for testing and debugging big data applications at the system level [1, 2, 3, 4, 5]....

[...]

20,309 citations

17,663 citations

834 citations

...Despite the testing challenges of the Big Data applications [15, 16] and the progresses in the testing techniques [17], little effort is focused on testing the MapReduce programs [18], one of the principal paradigms of Big Data [19]....

[...]

518 citations

...These faults are often masked during the test execution because the tests usually run over an infrastructure configuration without considering the different situations that could occur in production, as for example different parallelism levels or the infrastructure failures [6]....

[...]

442 citations

In the unit testing, JUnit [32] could be used together with mock tools, or directly by MRUnit library [10] adapted to the MapReduce paradigm.

In this paper a test engine is implemented by an MRUnit extension that automatically generates and executes the different infrastructure configurations that could occur in production.

there are other approaches oriented to obtain the test input data of MapReduce programs, such as [12] that employs data flow testing and [29] based on a bacteriological algorithm.

Given a test input data, the goal is to generate the different infrastructure configurations, also called in this context scenarios.

The test input data can be obtained with a generic testing technique or onespecifically designed for MapReduce, such as MRFlow [12].

One common type of fault is produced when the data should reach the Reducer in a specific order, but the parallel execution causes these data to arrive disordered.

In this work the following parameters are considered based on previous work [5] that classifies different types of faults of the MapReduce applications: Mapper parameters: (1) Number of Mapper tasks, (2) Inputs processed per each Mapper, and (3) Data processing order of the inputs, that is, which data are processed before other data in the Mapper and which data are processed after.

In this paper, given a test input data, several configurations are generated and then executed in order to reveal functional faults.

This fault was analysed by Csallner et al. [24] and Chen et al. [25] using some testing techniques based on symbolic execution and model checking.

The failure is produced whenever this infrastructure configuration is executed, regardless of the computer failures, slow net or others.

The second Mapper processes only row 2, but no other information about the mandatory columns or data quality threshold, so this Mapper cannot emit any output.