Did you find this useful? Give us your feedback

520 citations

235 citations

202 citations

166 citations

110 citations

...[15, 26, 45]) simply omit memory model considerations....

[...]

10,262 citations

...Past research on mapping dynamic programming, e.g., the Smith-Waterman (SWat) algorithm, onto the GPU used graphics primitives [15, 14] in a task parallel fashion....

[...]

..., Smith-Waterman [25]), and bitonic sort [4] — and evaluate their effectiveness....

[...]

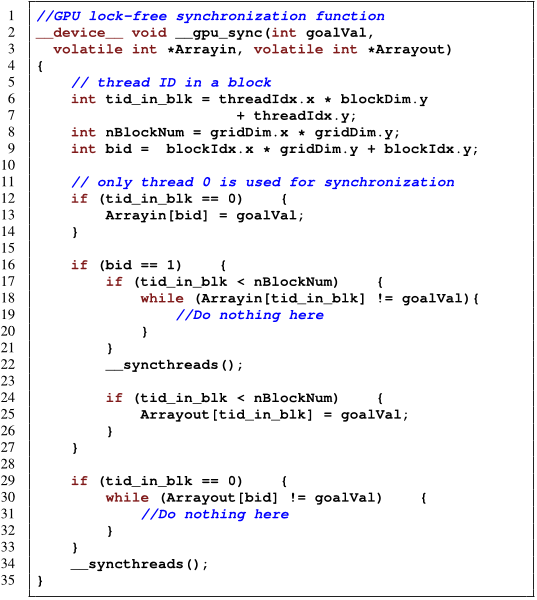

...This is due to three facts: 1) There have been a lot of works [19, 25, 15, 6, 10] to decrease the computation time....

[...]

...The three algorithms are Fast Fourier Transformation [16], Smith-Waterman [25], and bitonic sort [4]....

[...]

...We then introduce these GPU synchronization strategies into three different algorithms — Fast Fourier Transform (FFT) [16], dynamic programming (e.g., Smith-Waterman [25]), and bitonic sort [4] — and evaluate their effectiveness....

[...]

2,553 citations

...More detailed information about bitonic sort is in [4]....

[...]

...The three algorithms are Fast Fourier Transformation [16], Smith-Waterman [25], and bitonic sort [4]....

[...]

...Bitonic sort is one of the fastest sorting networks [13], which is a special type of sorting algorithm devised by Ken Batcher [4]....

[...]

..., Smith-Waterman [25]), and bitonic sort [4] — and evaluate their effectiveness....

[...]

1,222 citations

...A detailed description of the FFT algorithm can be found in [16]....

[...]

...We then introduce these GPU synchronization strategies into three different algorithms — Fast Fourier Transform (FFT) [16], dynamic programming (e....

[...]

...The three algorithms are Fast Fourier Transformation [16], Smith-Waterman [25], and bitonic sort [4]....

[...]

...To accelerate FFT [16], Govindaraju et al....

[...]

993 citations

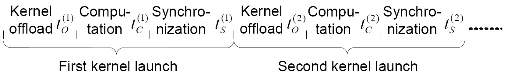

...However, GPUs typically map well only to data or task parallel applications whose execution requires minimal or even no interblock communication [9, 24, 26, 30]....

[...]

...With these programming models, more and more applications have been mapped to GPUs and accelerated [6, 7, 10, 12, 18, 19, 23, 24, 26, 30]....

[...]

787 citations

As for future work, the authors will further investigate the reasons for the irregularity of the FFT ’ s synchronization time versus the number of blocks in the kernel. Second, the authors will propose a general model to characterize algorithms ’ parallelism properties, based on which, better performance can be obtained for their parallelization on multi- and many-core architectures.

For bitonic sort, Greβ et al. [7] improve the algorithmic complexity of GPU-ABisort to O (n log n) with an adaptive data structure that enables merges to be done in linear time.

In addition, the authors allocate all available shared memory on an SM to each block so that no two blocks can be scheduled to the same SM because of the memory constraint.

In addition, the authors integrate each of their GPU synchronization approaches in a micro-benchmark and three well-known algorithms: FFT, dynamic programming, and bitonic sort.

Another parallel implementation of the bitonic sort is in the CUDA SDK [21], but there is only one block in the kernel to use the available barrier function __syncthreads(), thus restricting the maximum number of items that can be sorted to 512 — the maximum number of threads in a block.

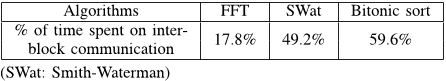

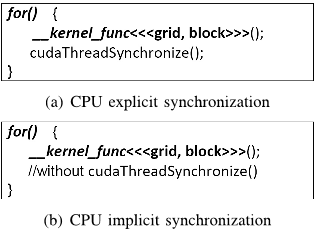

As described in [29], the barrier function cannot guarantee that inter-block communication is correct unless a memory consistency model is assumed.

It is worth noting that in the step 2) above, rather than having one thread to check all elements of Arrayin in serial as in [29], the authors use N threads to check the elements of Arrayin in parallel.

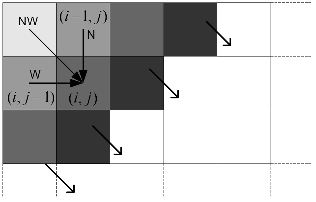

Past research on mapping dynamic programming, e.g., the Smith-Waterman (SWat) algorithm, onto the GPU uses graphics primitives [14], [15] in a task parallel fashion.