Did you find this useful? Give us your feedback

42 citations

...One possibility is to use floor plans or a 3D reconstruction system tomodel the room space, such as presented by Pintore and Gobbetti [22] or by Simon and Berger [27]....

[...]

8 citations

3 citations

46,906 citations

23,396 citations

15,558 citations

14,708 citations

...3SIFT features [21] are invariant to image scale and rotation and are shown robust to some extend to affine distortion, change in viewpoint and change to illumination....

[...]

14,282 citations

...This is algebraically expressed as [11]:...

[...]

...A plane projective transformation is a planar homology if it has a line of fixed points (the axis), together with a fixed point not on the line (the vertex) [11, 14]....

[...]

The authors presented a method for interactive building of multiplanar environments that has been validated on both synthetic and real data. The authors have shown the benefits of using semi-automatic rather than fully automatic algorithms for online building and augmenting of multiplanar scenes. When a lot of keyframes are available, this procedure may be time consuming.

As d1 is an overall scale factor4, and as the authors can use ||n1|| = ||n2|| = 1, the authors have to estimate 11 parameters: 2 for π1, 3 for π2, 3 for R and 3 for t.

The scale-dependent threshold problem may be tackled by segmenting planes into the 2-D video images instead of the 3-D point-cloud.

For instance, in [17], user cooperation is used for initializing the map: when the system is started, the user places the camera above the workspace and presses a key to capture a first key-frame.

Hz in tracking + filtering mode on a PC Dell Precision 390, 2.93 Ghz, while part of the processor was used to capture a video of the screen.

A minimum requirement to be able to perform this task is that some planar surfaces are identified into the map upon which the virtual objects can be placed with correct orientation.

Planar surfaces also make easy to handle self-occlusions of the map as well as collisions, occlusions, shadowing and reflections between the map and the added objects.

As the projection of the intersection line in the first image is known, the equations of the two planes can be expressed using 3 instead of 5 parameters.

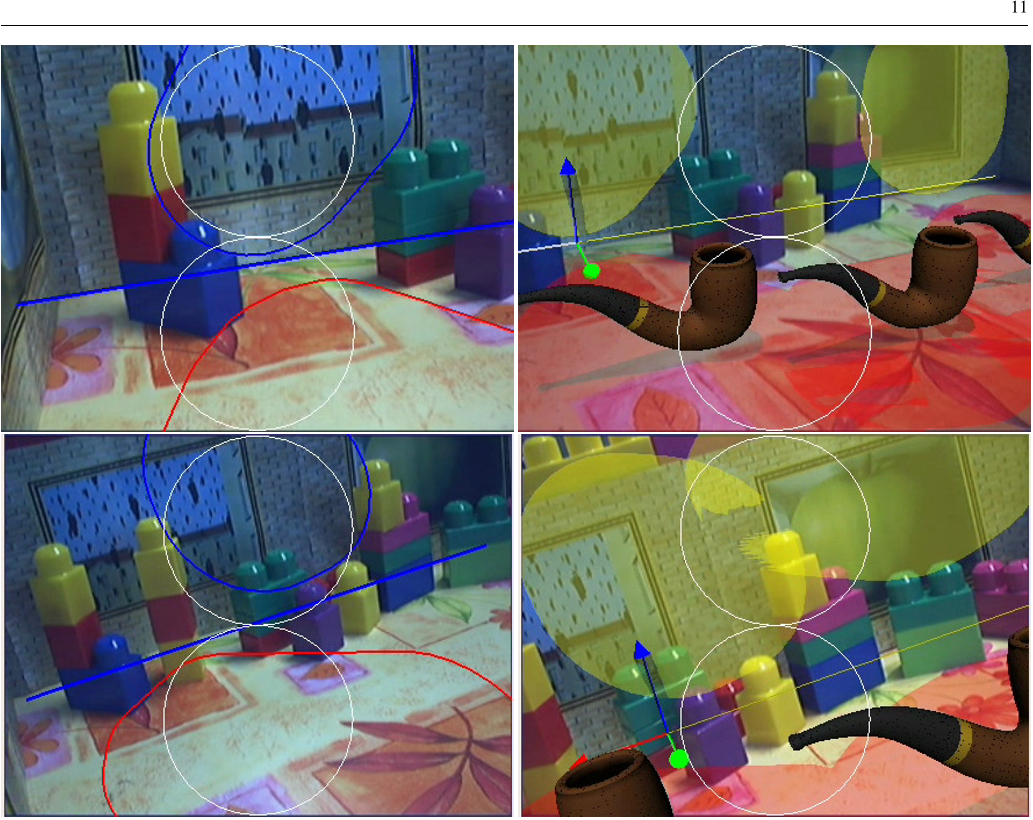

The benefit of using semiautomatic, rather than fully automatic algorithms, is that the authors can model relevant structures for augmentation.

their reconstruction procedure only depends on homographies which can be estimated from completely different sets of points matched between consecutive images.

The key idea is to represent the required posterior density function p(xi|z1:i), where z1:i is the set of all available measurements up to time i, by a set of random samples x ji with associatedweights w ji , and to compute estimates based on these sam-ples and weights:p(xi|z1:i) ≈N ∑j=1w ji δ(xi − xj i ),N ∑j=1w ji = 1.

The authors have shown the benefits of using semi-automatic rather than fully automatic algorithms for online building and augmenting of multiplanar scenes.