Load-Store Queue Management: an Energy-Efficient Design

Based on a State-Filtering Mechanism

∗

Fernando Castro, Daniel Chaver, Luis Pinuel, Manuel Prieto, Francisco Tirado

University Complutense of Madrid

fcastror@fis.ucm.es, {dani02, lpinuel, mpmatias, ptirado}@dacya.ucm.es

Michael Huang

University of Rochester

michael.huang@ece.rochester.edu

Abstract

Modern microprocessors incorporate sophisticated techniques to

allow early execution of loads without compromising program cor-

rectness. To do so, the structures that hold the memory instructions

(Load and Store Queues) implement several complex mechanisms

to dynamically resolve the memory-based dependences. Our main

objective in this paper is to design an efficient LQ-SQ structure,

which saves energy without sacrificing much performance. We pro-

pose a new design that divides the Load Queue into two structures, a

conventional associative queue and a simpler FIFO queue that does

not allow associative searching. A dependence predictor predicts

whether a load instruction has a memory dependence on any in-

flight store instruction. If so, the load is sent to the conventional

associative queue. Otherwise, it is sent to the non-associative queue

which can only detect dependence in an inexact and conservative

way. In addition, the load will not check the store queue at exe-

cution time. These measures combined save energy consumption.

We explore different predictor designs and runtime policies. Our

experiments indicate that such a design can reduce the energy con-

sumption in the Load-Store Queue by 35-50% with an insignificant

performance penalty of about 1%. When the energy cost of the in-

creased execution time is factored in, the processor still makes net

energy savings of about 3-4%.

1 Introduction

Modern out-of-order processors usually employ an array of sophis-

ticated techniques to allow early execution of loads to improve per-

formance. Almost all designs include techniques such as load by-

passing and load forwarding. More aggressive implementations go

a step further and allow speculative execution of loads when the ef-

fective address of a preceding store is not yet resolved. Such spec-

ulative execution can be premature if an earlier store in program

order writes to (part of) the memory space loaded and executes af-

terwards. Clearly, speculative techniques have to be applied such

that program correctness is not compromised. Thus, the processor

needs to detect, squash, and re-execute premature loads. All subse-

quent instructions or at least, the dependent instructions of the load

need to be re-executed as well.

To ensure safe out-of-order execution of memory instruc-

tions, conventional implementations employ extensive buffering

and cross-checking through what is referred to as the load-store

queue, often implemented as two separate queues, the load queue

(LQ) and the store queue (SQ). A memory instruction of one type

∗

This work has been supported by the Spanish goverment through the re-

search contract TIC 2002-750, the Hipeac European Network of Excellence,

and by the National Science Foundation through the grant CNS-0509270.

needs to check the queue of the opposite kind in an associative fash-

ion: a load searches the SQ to forward data from an earlier, in-flight

store and a store searches the LQ to identify loads that have exe-

cuted prematurely. The logic used is complex as it involves asso-

ciative comparison of wide operands (memory addresses), requires

priority encoding, and has to detect and handle non-trivial situations

such as operand size mismatch or misaligned data. These complex

implementations come at the expense of high energy consumption

and it is expected that this consumption will grow in future designs.

This is because the capacity of the queues needs to be scaled up to

accommodate more instructions to effectively tolerate the long, and

perhaps still growing, memory latencies. Furthermore, scaling of

these structures also increases their access latency, which makes it

hard to incorporate them in a high-frequency design. Beyond cer-

tain limits, further increasing the capacity will require multi-cycle

accesses, which can offset the benefit of increased capacity of in-

flight instructions.

To address the design challenges of orchestrating out-of-order

memory instruction execution, we explore a management strategy

that matches the characteristics of load instructions with the cir-

cuitry that handles them. We divide loads into those that tend to

communicate with in-flight stores and those that do not. This divi-

sion is driven by the empirical observation that most load instruc-

tions are strongly biased toward one type or another. We use the

conventional circuitry to handle the former, and a simpler alterna-

tive design for the latter. In this paper, we study both static and

dynamic mechanisms to classify load instructions into the two cat-

egories mentioned above. We also explore hardware support and

runtime policies in this design. We show that our design is effec-

tive, saving about 35-50% of the LSQ energy at a small performance

penalty of a few percent. After factoring in the energy lost due to

the slowdown, the processor as a whole still makes net energy sav-

ings. Thanks to the improved scalability of our design, we can also

increase the capacity of the LSQ much more easily and at a lower

energy overhead. This can mitigate the performance degradation

and the energy waste due to the slowdown.

The rest of the paper is organized as follows: Section 2 describes

our alternative LQ-SQ implementation; Section 3 outlines our ex-

perimental framework; Section 4 presents the quantitative analysis

of the design; Section 5 discusses the related work; and finally, Sec-

tion 6 concludes.

2 Architectural Design

2.1 Highlight of Conventional Design

High-performance microprocessors typically employ very aggres-

sive strategies for out-of-order memory instruction execution.

Loads access memory before prior stores have committed their data

1

(buffered in the SQ) to the memory subsystem. To maintain pro-

gram semantics, if an earlier in-flight store writes to the same loca-

tion the load is reading from, the data needs to be forwarded from

the SQ (see Figure 1). This involves associative searching of the

SQ to match the address and finding out the closest producer store

through priority encoding. In most implementations, a load can ex-

ecute despite the presence of prior store instructions with an unre-

solved address. Such speculative load execution may be incorrect if

the unresolved stores turn out to access the same location. To detect

and recover from mis-speculation, every store searches the LQ in

an associative manner to find younger loads with the same address

and when one is found initiates a squash and re-execution. This is

referred to as a load-store replay in [8].

Figure 1. Conventional load-store queue design. A load

checks the SQ associatively to forward data from the nearest

older store to the same location. A store checks the LQ asso-

ciatively to find younger loads to the same location that have

executed prematurely.

In typical implementations [11, 18], a replay squashes and re-

executes all instructions younger than the offending load. To reduce

the frequency of replays, some processors use a simple PC-indexed

table to predict whether a load will be dependent upon a previous

store [8]. Loads predicted to be dependent will wait until all prior

stores are resolved, whereas other loads execute as soon as their

effective address is available.

2.2 Rationale

While load forwarding, bypassing, and speculative execution im-

prove performance, they also require a large amount of hardware

that increases energy consumption. In this paper we explore an al-

ternative load-store queue design with a different management pol-

icy that allows for a significant energy reduction. This new approach

is based on the following observations:

1. Memory-based dependences are relatively infrequent. Our ex-

periments indicate that only around 12% of dynamic load in-

stances need forwarding. This suggests that the complex dis-

ambiguation hardware in modern microprocessors is underuti-

lized.

2. On average, around 74% of the memory instructions that ap-

pear in a program are loads. Therefore, their contribution to

the dynamic energy spent by the disambiguation hardware is

greater than that of the stores. This suggests that more atten-

tion must be paid to loads.

3. The behavior of a large majority of load instructions is usually

strongly biased. They either frequently communicate with an

in-flight store or almost never do so. This suggests that they

can be identified either through profiling or through a PC-

based predictor at runtime, and treated differently according

to their bias.

2.3 Overall Structure

Based on the above observations, we propose a design where we

identify those loads that rarely communicate with in-flight stores

and handle these loads using a different queue specially optimized

for them. For convenience of discussion, we loosely refer to these

loads as independent loads and the remainder dependent loads.

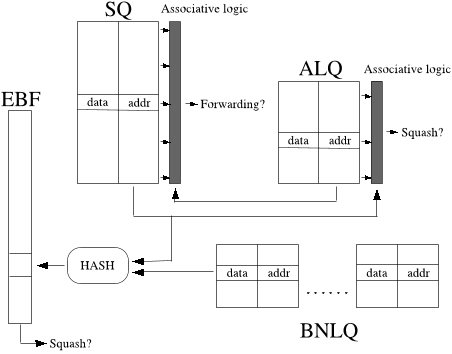

Figure 2. Design of modified LQ-SQ with filtering. The LQ

is split into two queues, an Associative Load Queue (ALQ)

and a Banked Non-associative Load Queue (BNLQ).

The overall structure of our design is shown in Figure 2. The con-

ventional LQ is split into two different structures: the Associative

Load Queue (ALQ) and the Banked Non-associative Load Queue

(BNLQ). The ALQ is similar to a conventional LQ, but smaller. It

provides fields for the address and the load data, as well as con-

trol logic to perform associative searches. The BNLQ is a non-

associative buffer that holds the load address and the load data. Its

logic is much simpler than that of the ALQ, mostly due to absence

of hardware for performing associative searches. The banking of

the BNLQ is purely for energy savings and not based on address.

Therefore, architecturally, the BNLQ is just a FIFO buffer simi-

lar to the ROB (re-order buffer). Though independent loads rarely

communicate with in-flight stores, we still need to detect any com-

munication and ensure that a load gets the correct data. To do so,

we use a mechanism denoted as Exclusive Bloom Filter (EBF) to

allow quick but conservative detection of potential communication

between an independent load and an in-flight store and perform a

squash when necessary.

2.4 Using BNLQ and EBF to Handle Independent

Loads

According to its dependency prediction (Section 2.5), a load is sent

to the ALQ or the BNLQ. Since an independent load is unlikely to

communicate with an in-flight store, we allocate an entry for it in

the BNLQ that does not provide the associative search capability.

Additionally, the load does not access the SQ for forwarding at the

time of issue. As such, if an in-flight store does write to the same

2

location as the load, the data returned by the load could be incorrect

and we need to take corrective measures.

To detect this situation, we use a bloom filter-based mechanism

similar to that proposed in [16]. The filter we use, denoted as EBF,

is a table of counters. When a load in the BNLQ is issued, it ac-

cesses the EBF based on its address and increments the correspond-

ing counter. (In our setup, counter overflow is very rare and thus

can be handled by any convenient mechanism as long as forward

progress is guaranteed. For example, the load can be rejected and

retried later. It can proceed when the counter is decremented or

until it becomes the oldest memory instruction in the processor, at

which time it can proceed without accessing the EBF.) When a store

commits, it checks the EBF using its address. If the corresponding

counter is greater than zero, then potentially one or more loads in

the BNLQ have accessed the memory prematurely. In this case, the

system conservatively squashes all instructions after the store. Note

that an EBF hit can be a false hit when the conflicting load and store

actually access different locations. We study a design that mitigates

the impact of such false hits in Section 2.6.

To correctly maintain the counter in the EBF, when the load in

the BNLQ commits, its corresponding EBF counter is decremented.

Additionally, when wrong-path instructions or replayed instructions

are flushed from the system, their modifications to the EBF should

be undone, otherwise the “residue” will quickly “clog” the filter.

This can be achieved by walking through the section of the BNLQ

corresponding to the flushed instructions and decrement the EBF

counter for any load that has issued. This is the solution we assume

in this paper. We have also experimented with “self-cleaning” EBFs

that do not actively clean out the residue. Instead, these filters tol-

erate the accumulation of residue and rely on periodic cleaning of

the whole filter to remove any residue. For example, we can use

alternating EBFs by dividing the dynamic instructions into fixed-

sized blocks and use a different EBF for a different block. When all

the instructions in one block have been committed, all entries in the

corresponding EBF can be reset to zero, cleaning all residue. How-

ever, in our limited exploration, we found that tolerating the residue

increases false hit rate of EBF and causes unnecessary flushes. The

increase can be quite significant in certain applications.

Load instructions allocated into the ALQ are still handled con-

ventionally. They check the store queue upon execution and the

stores check the ALQ too. Thus, when an independent load is dis-

patched and the BNLQ is full, it is accommodated in the ALQ.

Although, this “upgrade” increases the energy expenditure for that

load instruction, it avoids stalling dispatch which not only slows

down the program but also increases energy consumption. On the

other hand, a load predicted to go to the ALQ is not allocated into

the BNLQ when the ALQ is full. The reasoning is that there is a

high likelihood that doing so would trigger a squash which is very

costly both performance-wise and energy-wise. When the ALQ is

full during the dispatch of a dependent load, we simply stall the

dispatch.

Finally, the hashing function we use is simple:

address % EBF size. We use a prime number as the size

of the EBF (4001). Through experiments, we confirmed the

intuition that false hit rate is lower on average than if the size is

similar but is a two’s power (4096).

2.5 Dependency Prediction of Loads

In our design of the LQ-SQ mechanism, when a load instruction

is decoded, it is suggested by either a dynamic or a profile-based

predictor in which queue the load should be accommodated. We

study two different predictors.

2.5.1 Profiling-Based Predictor

In a profiling-based system, every static load is tagged as depen-

dent or independent based on profiling information. This way, load

dependence prediction is tied to the static instructions. This is a rea-

sonable approach because a large majority of loads have a strongly

biased behavior: they either frequently or almost never communi-

cate with an in-flight store. Table 1 shows the breakdown. On av-

erage, 92% of the static load instructions are strongly biased. The

dynamic instances of these instructions constitute a slightly lower

88% of all dynamic load instructions.

Static instructions

INT Avg FP Avg TOT Avg

Biased Independent Loads 75.5% 87.9% 82.4%

Biased Dependent Loads 11.8% 8.2% 9.8%

Unbiased Loads 12.7% 3.9% 7.8%

Dynamic instances

INT Avg FP Avg TOT Avg

Biased Independent Loads 63.8% 85.4% 75.8%

Biased Dependent Loads 16.1% 8.9% 12.1%

Unbiased Loads 20.1% 5.7% 12.1%

Table 1. Breakdown of static instructions into strongly-

biased dependent and independent loads and unbiased loads.

The same breakdown when each load instruction is weighted

by its total number of dynamic instances. Statistics are shown

as the average of the integer applications, that of the floating-

point applications, and the overall average. A load is consid-

ered a biased independent load when more than 99% of its

dynamic instances do not depend on an in-flight store. It is

considered a biased dependent load when more than 50% of

its dynamic instances are dependent on an in-flight store.

In the static profiling-based prediction, we mark any load instruc-

tion whose dynamic instances have a probability of more than a

threshold T h to conflict with an in-flight store in a profiling run.

More precisely, if t denotes the total number of instances of a cer-

tain static load, and d denotes the number of instances where this

load hashes to the same EBF entry as an in-flight store, this load is

predicted as dependent if d/t > T h. This notion of dependency is

broader than true data dependence as a load instance is considered

to be dependent even when there is a false dependence: load and

store to different locations map to the same EBF entry.

Note that in a realistic implementation, the profiling is likely to

require simulation support as we need to obtain the microarchitec-

tural information of how many instances of a load communicate

with an in-flight store. To explore the optimal threshold T h for each

application, in this paper, we simply perform brute-force search in

a region and find the one that best balances energy savings with

performance degradation. This exploration is discussed further in

Section 4. Once an optimal threshold is selected for an applica-

tion, static loads are marked in the program binary as dependent or

independent based on the threshold. This, of course, implies ISA

(instruction set architecture) support.

2.5.2 Dynamic Predictor

An alternative to a profile-based system is to generate dependence

prediction during execution. In our approach, the prediction infor-

3

mation is stored in a PC-indexed table similar to the one used in

Alpha 21264 [8] to delay certain loads to reduce replay frequency.

This information determines which queue a load is allocated to. Ini-

tially, all loads are considered independent. This initial prediction

is changed if a dependence is detected. As to what updates can

be made to an entry already in “dependent” state, we consider two

different policies. One possible choice, which we explored in [6],

is to hold this prediction for the rest of the execution. This deci-

sion, based on the stable behavior observed in Table 1, is simple and

works reasonably well. A second policy includes periodic refresh-

ing of the table, restoring all predictions to “independent” [7]. The

idea behind this policy is that even loads that rarely communicate

with an in-flight store will be predicted as dependent after the first

instance of an EBF match, forcing all subsequent instances of the

loads to the ALQ. Using periodic refreshing, this effect is limited

to within a time interval. We use this policy as it improves perfor-

mance at a very small hardware cost [6]. Unlike the profiling-based

approach, the dynamic alternative needs neither change in the ISA

nor profiling. However, it requires extra storage and a prediction

training phase after each action of refreshing of the table.

When a store finds an EBF hit with its address, there is a poten-

tial dependence between the store and a load in the BNLQ. Without

the associative search capability of the BNLQ, however, we can not

find the offending load the way we find it in a conventional LQ.

Therefore, as mentioned earlier, we simply squash all instructions

after the store. However, we need to identify the load(s) that conflict

with the store so that we can train the predictor to direct them to the

ALQ in the future. For this purpose, we introduce a special DPU

mode (dependence predictor update mode). In this mode, after the

squash, load instructions are inspected at commit time in order to

find out those that triggered the squash. We save the index of the

squash-triggering EBF entry and the counter value at the time of

squash in two dedicated registers. When an independent load com-

mits during the DPU mode, its EBF entry index is compared to the

saved index. Upon a match, its dependency prediction is changed

to “dependent” in the PC-indexed predictor. The DPU mode termi-

nates when the number of matching loads reaches the saved count.

Note that in the re-execution after the squash, the addresses of loads

in the BNLQ are not guaranteed to repeat those prior to the squash

– the processor may follow a different predicted path, for example.

Thus, the DPU mode should also terminate by time-out.

2.6 Handling of EBF False Hits

The price for the simple and fast membership test using the bloom

filter is the existence of false positives: an EBF match does not

necessarily suggest a true data dependence. In our base design de-

scribed above, a false dependence is treated just like a true depen-

dence. In addition to an unnecessary squash, the cost of a false de-

pendence also includes increased pressure on the ALQ, which needs

to accommodate all loads considered dependent, truly or falsely. To

avoid the cost associated with false hits, we consider architecture

support to handle them differently.

When an EBF hit happens at the commit time of a store, in-

stead of immediately squashing all subsequent instructions, we walk

through the BNLQ (from the oldest load backward) to determine

whether the hit is because of true dependences. This is done by

reading out the address of each load and compare it with that of the

store. If an address match happens, we squash the offending load

and all subsequent instructions. (When using the dynamic predic-

tor, we also update the predictor at this time. Hence ,there is no

need for the special DPU mode.) If we finish the searching without

finding an address match, nothing needs to be done and we avoided

an unnecessary squash.

This sequential searching of the BNLQ takes non-trivial amount

of time. Assuming we can only read out one load address from

one bank of the BNLQ, the bandwidth of this searching is only

a few loads per cycle. Thus, the process can take tens of cycles.

However, this search happens in the background and during this pe-

riod, normal processing continues. The only constraints are that any

unchecked load in the BNLQ can not be allowed to commit and that

before a search finishes, we can not start another one. In practice,

these are unlikely to cause any slowdown: with a reasonable band-

width, the checking of the BNLQ can easily outpace the retirement

of instructions. If no address match is found, the latency of the

search should have little if any impact on the performance. Even if

a match is found, the delay in commencing the squash is not a cost

as it appears: every cycle we push back the squash, we are saving

a few independent loads (and other instructions in between) from

being squashed, unnecessarily. The occupancy of the BNLQ is not

a concern either as an EBF hit (which triggers a BNLQ search) is

very rare.

3 Experimental Framework

We evaluate our proposed load-store queue design on a simulated,

generic out-of-order processor. The main parameters used in the

simulations as well as the applications used from SPEC CPU2000

suite are summarized in Table 2. As the evaluation tool, we use a

heavily modified version of SimpleScalar [4] that incorporates our

LQ-SQ model and a Wattch framework [3] that models the energy

consumption throughout the processor. Profiling is performed using

the train inputs from the SPEC CPU2000 distribution, whereas the

production runs are performed using ref input and single sim-point

regions [17] of one hundred million instructions.

Processor

8-issue out-of-order processor

Register File: 256 INT physical registers, 256 FP physical registers

Func. Units: 4 INT ALUs, 2 INT Mult-Dividers, 3 FP Adders, 1 FP Mult-Divider

Branch Predictor: Combined; Bimodal Predictor: 8K entries; 2-level: 8K entries,

13 bits history size; Meta-Table: 8K entries; BTB: 4K entries; RAS: 32 entries

Queues: INT-Queue: 128 entries, FP-Queue: 128 entries

Caches and Memory

L1 data cache: 32KB, 4 way, LRU, latency= 3 cycles

L2 data cache: 2MB, 8 way, LRU, latency= 12 cycles

L1 instruction cache: 64KB, 2 way, LRU, latency= 2 cycles

Memory access: 100 cycles

LSQ simulated configurations

Baseline LQ-SQ: LQ: 80 entries; SQ: 48 entries

Proposed LQ-SQ: BNLQ-ALQ: 32-48, 40-40, 48-32, 56-24; SQ: 48 entries; EBF:

4K entries (4 bits per entry)

Benchmarks SPEC CPU2000

Integer applications: bzip2, crafty, eon, gap, gzip, parser, twolf, vpr

FP applications: applu, apsi, art, facerec, fma3d, galgel, mesa, mgrid, sixtrack,

wupwise

Table 2. Simulation parameters.

4 Evaluation

4.1 Main Results

We first present some broad-brushed comparison of our LQ-SQ de-

sign versus the conventional design. For easier analysis, we keep the

overall capacity of the LQ the same in all configurations. Note that

this is favoring the conventional design to a large degree as an im-

4

(a)

(b)

Figure 3. Performance and energy impact of proposed LQ-SQ design using a profiling-based predictor (a) or using a dynamic

predictor (b). In all configurations, the combined capacity of the BNLQ and the ALQ is 80, the same as that of the LQ in the baseline

conventional system. In option A, a false EBF hit is handled just like a true dependence, whereas in option B, a false EBF hit is

detected through sequential searching of BNLQ and ignored.

Figure 4. Performance and energy impact of the proposed LQ-SQ design on individual applications. The configuration shown uses

a dynamic predictor and does not squash due to a false EBF hit (the “B” option). The size of the BNLQ and ALQ is 48 and 32,

respectively.

portant benefit of our design is the scalability of the BNLQ which

allows the processor to buffer more in-flight instructions to better

exploit long-range ILP. Due to the tremendous amount of data, in

many figures, we only show suite-wide averages. Recall that in our

LQ-SQ mechanism, a baseline design treats an EBF false hit just

the same as a true dependence, whereas a more advanced design se-

quentially searches the BNLQ upon an EBF hit and ignores a false

hit. For notational convenience, we refer to these two options as A

and B respectively.

We use the conventional design as the baseline of the compar-

ison and show the slowdown, energy savings in the LQ-SQ, and

processor-wide energy savings of our designs. In Figure 3-(a), we

show the results of using a profiling-based dependency predictor

and in Figure 3-(b), we show those of using a dynamic dependency

predictor. In all cases, we explore different distributions of the total

LQ capacity into the ALQ and the BNLQ.

From this figure, we can make a few observations. First, our

design does achieve the goal of reducing energy consumption of

the dynamic memory disambiguation mechanism without inducing

much performance penalty. We see that the average performance

degradation is about 1% in most configurations and that the energy

savings range between 35% and 52% of total LQ-SQ energy con-

sumption. Since energy is dissipated diversely throughout the pro-

cessor, the total energy savings for the entire processor (factoring

in the energy waste due to the increased execution time) are much

less significant. Nevertheless, the design makes net energy savings

in the processor.

Second, as we reduce the ALQ size, the energy consumption

in the LQ-SQ continues to reduce. However, the slowdown also

increases due to the more frequent stalls when the ALQ is full.

This increased performance degradation incurs global energy waste

which can negate the energy saved in the LQ-SQ. Indeed, although

infrequent, memory-based dependences still exist and require effi-

cient handling. When the resource is not sufficiently provided, the

machine becomes unbalanced and thus inefficient. We have per-

formed some experiments using a degenerated configuration with-

out an ALQ. In this case, the average slowdown is about 19% and

the energy consumption of the whole processor increases by about

9% (over the baseline conventional system) despite that the LQ-SQ

energy is reduced.

Third, on balance, the profile-based predictor is slightly better.

This is largely because that with meticulous tuning, the predictor is

able to mark loads that occasionally communicate with an in-flight

store as independent. These loads do not increase the pressure on

the ALQ. In contrast, with the dynamic predictor, these loads are

marked as dependent reactively after the squash, not avoiding the

penalty, and increasing the pressure on the ALQ until a refresh.

Nevertheless, the difference in the results is insignificant and prob-

ably does not justify the higher implementation cost of the profiling

and the ISA support.

Fourth, special handling of EBF false hits (option B) is effective.

As fewer loads are moved to the ALQ, the energy savings in the

LQ-SQ increase by a few percent. More importantly, the reduction

in ALQ pressure reduces the frequency of stalls due to ALQ full

and thus the performance penalty. This is especially apparent when

the size of the ALQ is small. For example, in the configuration

with the dynamic predictor and a 24-entry ALQ, the performance

penalty of using option B is about 1.5%, half as much as the 3%

5