...PAID policy [7] and MARS [8] considered both spatial and temporal behavioral, even it accounts updates to data ....

[...]

3,582 citations

...For example, in applications where client queries exhibit high locality, Pr(di ∈ query) may take the form of a Zipf distribution [9]....

[...]

1,033 citations

...Cache replacement policies such as LRU, LFU and LRU-k [7] only take into account the temporal characteristics of data access, while policies such as FAR [2] only deal with the location dependent aspect of cache replacement but neglect the temporal properties....

[...]

...1) Temporal Locality Based Cache Replacement Policies: There are many existing temporal-based cache replacement strategies, including LRU, LFU and LRU-K [7]....

[...]

...There are many existing temporal-based cache replacement strategies, including LRU, LFU and LRU-K [7]....

[...]

292 citations

172 citations

...PAID (Probability Area Inverse Distance) [6] is an extended version of PA that deals with the problem of distance....

[...]

...Results from [6] shows that both PA and PAID performs better than other existing schemes such as LRU and FAR....

[...]

...[5], [6]) use cost functions to incorporate different factors including access frequency, update rate, size of objects....

[...]

...PA (Probability Area) [6] is a cost-based replacement policy,...

[...]

...PA (Probability Area) [6] is a cost-based replacement policy, where each cached object is associated with a cost calculated with a predefined cost function....

[...]

129 citations

...A more advanced semantic based location model was proposed in [8], where attributes of data objects are either location related, or nonlocation related....

[...]

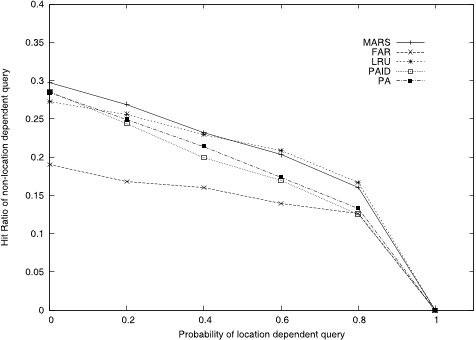

When the percentage of location dependent queries are low (20%), MARS, FAR and PAID achieves a hit ratio of 30% on location dependent queries compared to 20% achieved by LRU and PA.

It is important for cachereplacement policies to dynamically adapt to this change in access locality to ensure high cache hit ratio is achieved.

In this paper, the authors have presented a mobility-aware cache replacement policy that is efficient in supporting mobile clients using location dependent information services.

By anticipating clients’ future location when making cache replacement decisions, MARS is able to maintain good performance even for clients travelling at high speed.

Test results show that MARS provide efficient cache replacement for mobile clients and is able to achieve a 20% improvement in cache hit ratio compared to existing replacement policies.

The temporal score, scoretemp(i), is used in the MARS replacement cost function to capture the temporal locality of data access, the authors define the temporal score of a data object di as:scoretemp(i) = tcurrent − tu,i tcurrent − tq,i × λi µi(4)where λi = λ.

the probability of it querying an object with a valid scope center reference point at Li is equal to :Pr(i) = 1 |Lm − Li| × 1∑ j∈N 1 |Lm−Lj | (6)Based on the definition in Equation 6, queries will be distributed among data objects based on their distance from the client current location.

When the probability of location dependent queries is high, client caches are filled with information relevant to the clients’ current location, resulting in more queries being satisfied by the cache.

In order to model the utilisation of location dependent services, percentLDQ% of queries performed by clients are location dependent and 1 − percentLDQ% are non-location dependent.

At high location dependent query probability, LRU performs slightly better than MARS because more cache space is used by MARS to store objects obtained from location-dependent, thus reducing the number of objects cached from non-location-dependent queries.

The cost of replacing an object di in client m’s cache is calculated with the following equation:cost(i) = scoretemp(i) × scorespat(i) × ci (1) where scoretemp(i) is the temporal score of the object, scorespat(i) is the spatial score of the object and ci is the cost of retrieving the object from the remote server.

The graph in Figure 5 shows that the mobility-aware cache replacement policies perform significantly better than the temporal base policy (LRU) when it comes to location dependent queries.

Restrictions apply.example, given a client located at (x1, y1) and a data object located at (x2, y2), the distance between the client and theobject is equal to √|x1 − x2|2 + |y1 − y2|2.