LOGIC JOURNAL of the IGPL

Volume 22 • Issue 1 • February 2014

Contents

Original Articles

A Routley–Meyer semantics for truth-preserving and well-determined

Łukasiewicz 3-valued logics 1

Gemma Robles and José M. Méndez

Tarski’s theorem and liar-like paradoxes 24

Ming Hsiung

Verifying the bridge between simplicial topology and algebra: the

Eilenberg–Zilber algorithm 39

L. Lambán, J. Rubio, F. J. Martín-Mateos and J. L. Ruiz-Reina

Reasoning about constitutive norms in BDI agents 66

N. Criado, E. Argente, P. Noriega and V. Botti

An introduction to partition logic 94

David Ellerman

On the interrelation between systems of spheres and epistemic

entrenchment relations 126

Maurício D. L. Reis

On an inferential semantics for classical logic 147

David Makinson

First-order hybrid logic: introduction and survey 155

Torben Braüner

Applications of ultraproducts: from compactness to fuzzy

elementary classes 166

Pilar Dellunde

LOGIC JOURNAL

of the

IGPL

Volume 22 • Issue 1 • February 2014

www.oup.co.uk/igpl

LOGIC JOURNAL

of the

IGPL

EDITORS-IN-CHIEF

A. Amir

D. M. Gabbay

G. Gottlob

R. de Queiroz

J. Siekmann

EXECUTIVE EDITORS

M. J. Gabbay

O. Rodrigues

J. Spurr

INTEREST GROUP IN PURE AND APPLIED LOGICS

ISSN PRINT 1367-0751

ISSN ONLINE 1368-9894

JIGPAL-22(1)Cover.indd 1JIGPAL-22(1)Cover.indd 1 16-01-2014 19:02:0516-01-2014 19:02:05

Logical Information Theory:

New Logical Foundations for Information Theory

[Forthcoming in: Logic Journal of the IGPL]

David Ellerman

Philosophy Department,

University of California at Riverside

June 7, 2017

Abstract

There is a new theory of infor mation based on logic. The de…n ition of Shannon entropy as

well as the no tions on joint, conditional, and mutual entropy as de…ned by Shan non can al l

be derived by a uniform transformation fr om the corresponding formulas of logical informati on

theory. Information is …rs t de…ned in terms of sets of distinctions without using any probability

measure. When a probability measure is introduced, the logical entropie s are simply the values

of the (product) probability measu re on t he se ts of distinctions. The c ompound notions of joint,

conditional, and mutual entropies are ob tained as the values of the measure, respectively, on th e

union, di¤erence, and intersection of the sets of distinctions. These compound notions of logical

entropy satisfy the usual Venn diagram relationships (e.g., inclusion-exclusion formulas) since

they are values of a measure (in the s ense of meas ure theory). The u niform transformation into

the f ormulas for Shannon entropy is linear so it expl ains the long-noted fact that t he Shannon

formulas satisfy the Venn d iagram relations–as an a nalogy or mnemonic–since Shannon entropy

is not a measure (in the sense of measure theory) on a given set.

W hat is the logic that gives rise to l ogical info rmation theory? Partitions are dual (in a

category- theoretic sense) to subsets, and the logic of partitions was recently developed in a

dual/parallel relationship to the Boolean logic of subsets (the latter being u sually mis-speci…ed

as the special case of “propositional lo gic”). Boole developed logical probability theory as the

normalized counting measure o n subsets. Similarly the normalized counting measure on parti-

tions is logical entropy–when the partitions are represented as the set of distinctions that is the

complement to the equivalence relation f or the partition.

In this manner, logic al informa tion theory provides the set-theoretic and measure-theoretic

foundations for information theory. The Shannon theory is then derived by the transformation

that r eplaces the counting o f distinctions wi th the counting of the number of binary partitions

(bits) it takes, on average, to make the same distinctions by uniquely encodi ng the distinct

elements–which is why the Shannon theory perfectly dovetails into coding and com munications

theory.

Key words: parti tion logic, logical entropy, Shannon entropy

Contents

1 Introduction 2

2 Logical information as the measure of distinctions 3

3 Duality of subsets and partitions 4

1

4 Classical subset logic and partition logic 5

5 Classical logical probability and logical entropy 6

6 Entropy as a measure of information 8

7 The dit-bit transform 10

8 Information algebras and joint distributions 11

9 Conditional entropies 13

9.1 Logical conditional entropy . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 13

9.2 Shannon conditional entropy . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 14

10 Mutual information 15

10.1 Logical mutual information . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 15

10.2 Shannon mutual information . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 16

11 Independent Joint Distributions 17

12 Cross-entropies and divergences 18

13 Summary of formulas and dit-bit transforms 20

14 Entropies for multivariate joint distributions 20

15 Logical entropy and some related notions 24

16 The statistical interpretation of Shannon entropy 25

17 Concluding remarks 28

1 Introduction

This paper develops the logical theory of information-as-distinctions. It can be seen as the application

of the logic of partitions [15] to information theory. Partitions are dual (in a category-theoretic sense)

to subsets. George Boole developed the notion of logical probability [7] as the normalized counting

measure on subsets in his logic of subsets. This paper develops the normalized counting measure on

partitions as the analogous quantitative treatment in the logic of partitions. The resulting measure is

a new logical derivation of an old formula measuring diversity and distinctions, e.g., Corrado Gini’s

index of mutability or diversity [19], that goes back to the early 20th century. In view of the idea

of information as being based on distinctions (see next section), I refer to this logical measure of

distinctions as "logical entropy".

This raises the question of the relationship of logical entropy to Claude Shannon’s entropy

([40]; [41]). The entropies are closely related since they are both ultimately based on the concept

of information-as-distinctions–but they represent two di¤erent way to quantify distinctions. Logical

entropy directly counts the distinctions (as de…ned in partition logic) whereas Shannon entropy, in

e¤ect, counts the minimum number of binary partitions (or yes/no questions) it takes, on average,

to uniquely determine or designate the distinct entities. Since that gives (in standard examples) a

binary code for the distinct entities, the Shannon theory is perfectly adapted for applications to the

theory of coding and communications.

2

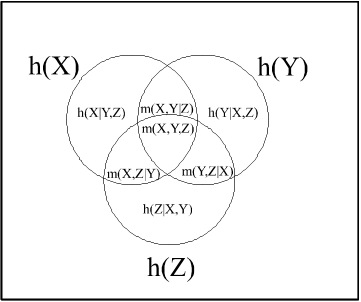

The logical theory and the Shannon theory are also related in their compound notions of joint

entropy, conditional entropy, and mutual information. Logical entropy is a measure in the math-

ematical sense, so as with any measure, the compound formulas satisfy the usual Venn-diagram

relationships. The compound notions of Shannon entropy were de…ned so that they also satisfy

similar Venn diagram relationships. However, as various information theorists, principally Lorne

Campbell, have noted [9], Shannon entropy is not a measure (outside of the standard example of 2

n

equiprobable distinct entities where it is the count n of the number of yes/no questions necessary to

unique determine or encode the distinct entities)–so one can conclude only that the "analogies pro-

vide a convenient mnemonic" [9, p. 112] in terms of the usual Venn diagrams for measures. Campbell

wondered if there might be a "deeper foundation" [9, p. 112] to clarify how the Shannon formulas

can be de…ned to satisfy the measure-like relations in spite of not being a measure. That question is

addressed in this paper by showing that there is a transformation of formulas that transforms each of

the logical entropy compound formulas into the corresponding Shannon entropy compound formula,

and the transform preserves the Venn diagram relationships that automatically hold for measures.

This "dit-bit transform" is heuristically motivated by showing how average counts of distinctions

("dits") can be converted in average counts of binary partitions ("bits").

Moreover, Campbell remarked that it would be "particularly interesting" and "quite signi…cant"

if there was an entropy measure of sets so that joint entropy corresponded to the measure of the

union of sets, conditional entropy to the di¤erence of sets, and mutual information to the intersection

of sets [9, p. 113]. Logical entropy precisely satis…es those requirements.

2 Logical information as the measure of distinctions

There is now a widespread view that information is fundamentally about di¤erences, distinguisha-

bility, and distinctions. As Charles H. Bennett, one of the founders of quantum information theory,

put it:

So information really is a very useful abstraction. It is the notion of distinguishability

abstracted away from what we are distinguishing, or from the carrier of information. [5,

p. 155]

This view even has an interesting history. In James Gleick’s book, The Information: A History,

A Theory, A Flood, he noted the focus on di¤erences in the seventeenth century polymath, John

Wilkins, who was a founder of the Royal Society. In 1641, the year before Isaac Newton was born,

Wilkins published one of the earliest books on cryptography, Mercury or the Secret and Swift Mes-

senger, which not only pointed out the fundamental role of di¤erences but noted that any (…nite)

set of di¤erent things could be encoded by words in a binary code.

For in the general we must note, That whatever is capable of a competent Di¤erence,

perceptible to any Sense, may be a su¢ cient Means whereby to express the Cogitations.

It is more convenient, indeed, that these Di¤erences should be of as great Variety as the

Letters of the Alphabet; but it is su¢ cient if they be but twofold, because Two alone

may, with somewhat more Labour and Time, be well enough contrived to express all the

rest. [47, Chap. XVII, p. 69]

Wilkins explains that a …ve letter binary code would be su¢ cient to code the letters of the alphabet

since 2

5

= 32.

Thus any two Letters or Numbers, suppose A:B. being transposed through …ve Places,

will yield Thirty Two Di¤erences, and so consequently will superabundantly serve for

the Four and twenty Letters... .[47, Chap. XVII, p. 69]

3

As Gleick noted:

Any di¤erence meant a binary choice. Any binary choice began the expressing of cogi-

tations. Here, in this arcane and anonymous treatise of 1641, the essential idea of infor-

mation theory poked to the surface of human thought, saw its shadow, and disappeared

again for [three] hundred years. [20, p. 161]

Thus counting distinctions [12] would seem the right way to measure information,

1

and that is the

measure which emerges naturally out of partition logic–just as …nite logical probability emerges

naturally as the measure of counting elements in Boole’s subset logic.

Although usually named after the special case of ‘propositional’logic, the general case is Boole’s

logic of subsets of a universe U (the special case of U = 1 allows the propositional interpretation

since the only subsets are 1 and ; standing for truth and falsity). Category theory shows there is a

duality between sub-sets and quotient-sets (= partitions = equivalence relations), and that allowed

the recent development of the dual logic of partitions ([13], [15]). As indicated in the title of his

book, An Investigation of the Laws of Thought on which are founded the Mathematical Theories of

Logic and Probabilities [7], Boole also developed the normalized counting measure on subsets of a

…nite universe U which was …nite logical probability theory. When the same mathematical notion

of the normalized counting measure is applied to the partitions on a …nite universe set U (when the

partition is represented as the complement of the corresponding equivalence relation on U U) then

the result is the formula for logical entropy.

In addition to the philosophy of information literature [4], there is a whole sub-industry in

mathematics concerned with di¤erent notions of ‘entropy’or ‘information’([2]; see [45] for a recent

‘extensive’ analysis) that is long on formulas and ‘intuitive axioms’ but short on interpretations.

Out of that plethora of de…nitions, logical entropy is the measure (in the technical sense of measure

theory) of information that arises out of partition logic just as logical probability theory arises out

of subset logic.

The logical notion of information-as-distinctions supports the view that the notion of information

is independent of the notion of probability and should be based on …nite combinatorics. As Andrei

Kolmogorov put it:

Information theory must precede probability theory, and not be based on it. By the very

essence of this discipline, the foundations of information theory have a …nite combinato-

rial character. [27, p. 39]

Logical information theory precisely ful…lls Kolmogorov’s criterion.

2

It starts simply with a set

of distinctions de…ned by a partition on a …nite set U , where a distinction is an ordered pair of

elements of U in distinct blocks of the partition. Thus the “…nite combinatorial” object is the

set of distinctions ("ditset") or information set ("infoset") associated with the partition, i.e., the

complement in U U of the equivalence relation associated with the partition. To get a quantitative

measure of information, any probability distribution on U de…nes a product probability measure on

U U, and the logical entropy is simply that probability measure of the information set.

3 Duality of subsets and partitions

Logical entropy is to the logic of partitions as logical probability is to the Boolean logic of sub-

sets. Hence we will start with a brief review of the relationship between these two dual forms of

1

This paper is about what Adriaa ns and van Benthem call "Information B: Probabilistic, information-theoretic,

measure d quantitatively", not about "Information A: knowledge, logic, wha t is conveyed in i nformative answers" where

the co nnection t o philosophy and logic is built-in from the beginning. Likewise, the p aper is not about Kolmogorov-

style "Information C: Algorithmic, code compression, mea sured quantitatively. " [4, p. 11]

2

Ko lmogorov had something else in mind such as a co mbinatorial development of Hart ley’s log (n) on a s et of n

equiprobable elements.[28]

4