LOW-RANK DATA MATRIX RECOVERY WITH MISSING VALUES AND FAULTY SENSORS

Roberto L

´

opez-Valcarce

∗

Universidade de Vigo, Spain

Josep Sala-Alvarez

†

Universitat Polit

`

ecnica de Catalunya, Spain

ABSTRACT

In practice, data gathered by wireless sensor networks often

belongs in a low-dimensional subspace, but it can present

missing as well as corrupted values due to sensor malfunc-

tioning and/or malicious attacks. We study the problem

of Maximum Likelihood estimation of the low-rank factors

of the underlying structure in such situation, and develop

an Expectation-Maximization algorithm to this purpose, to-

gether with an effective initialization scheme. The proposed

method outperforms previous schemes based on an initial

faulty sensor identification stage, and is competitive in terms

of complexity and performance with convex optimization-

based matrix completion approaches.

Index Terms— Low-rank approximation, matrix comple-

tion, outliers, faulty sensors, wireless sensor networks.

1. INTRODUCTION

The fact that many high-dimensional data structures can be

accurately represented as lying in a low-dimensional sub-

space has spurred a number of approaches to exploit such

low-rank structure [1]. In particular, in wireless sensor net-

works (WSNs), the measurements taken by K sensors over

N sensing slots can be organized into a K × N data matrix,

which in many practical applications will be close to having

rank r min{K, N}. This is because environmental data

usually exhibits strong correlation across space and time [2].

When applied to WSNs, matrix completion techniques [1, 3],

which exploit this property in order to recover the whole data

matrix from a subset of its samples, may result in significant

savings in sensing and communication [4, 5]. Missing data

may also occur if a transmission from a sensor to the Fusion

Center (FC) is affected by a deep channel fade.

WSNs consist of low-cost, battery-powered devices often

operating in harsh environments, and therefore prone to sen-

sor malfunction [6, 7], making data generated by faulty sen-

sors unreliable. Additionally, sensors are usually unattended,

∗

Supported by Agencia Estatal de Investigaci

´

on (Spain) and the European

Regional Development Fund (ERDF) under project WINTER (TEC2016-

76409-C2-2-R), and by Xunta de Galicia (Agrupaci

´

on Estrat

´

exica Conso-

lidada de Galicia accreditation 2016-2019).

†

Supported by Agencia Estatal de Investigaci

´

on (Spain) and ERDF under

project WINTER (TEC2016-76409-C2-1-R), and the Catalan administration

(AGAUR) under 2017 SGR 578.

which makes them vulnerable to data manipulation by mali-

cious external agents [8,9]. Regardless of its origin, corrupted

samples may significantly degrade data analysis at the FC.

In this paper we investigate algorithms that estimate the

low-rank factors of the underlying data matrix in the presence

of both missing data and unreliable sensors. In particular, we

adopt a non-informative model under which faulty sensors,

whose number and identities are not known, produce i.i.d.

data samples uniformly distributed in some unknown interval.

In this setting, Maximum Likelihood (ML) estimators are at-

tractive because of their good asymptotic performance prop-

erties [15]. The presence of hidden variables (i.e., missing

data, identities of faulty sensors) precludes a closed-form so-

lution for the ML estimators and motivates the application of

the Expectation-Maximization (E-M) algorithm [16], which

iteratively refines the estimates of the low-rank factors as well

as a posteriori probabilities of individual sensor faults.

Matrix completion methods have become increasingly

popular in applications in which a low-rank matrix is to be

recovered from a limited number of observations. Typically,

they seek to minimize the nuclear norm (which is a convex

surrogate of the rank) under some constraint on the fidelity

of the reconstruction to account for missing data and/or mea-

surement noise [17,18]. When the underlying rank is assumed

known, non-convex approaches based on the so-called Burer-

Monteiro factorization [19] are also available [20, 21]. In the

presence of corrupted data, convex minimization techniques

have also been proposed by exploiting the fact that such

anomalous data can be assumed to be sparse [22–24]. Many

of these methods enjoy theoretical performance guarantees

under suitable conditions [1, 3]; nevertheless, they invariably

include regularization terms whose parameters need appro-

priate tuning. This is not the case with the proposed E-M

approach, which iteratively estimates all unknown param-

eters; in addition, simulation results show that E-M may

outperform sparsity-based methods even with optimal tuning.

2. PROBLEM STATEMENT

Consider a network of K devices collecting data from their

environment. Each device, say sensor i, gathers N data points

{y

ij

, j = 1, . . . , N}, to be collected at the FC in the K × N

data matrix Y . It is assumed that the underlying physical

phenomenon gives rise to a matrix LR having low rank r

© 2019 IEEE. Personal use of this material is permitted. Permission from IEEE must be obtained for all other uses, in any current or future

media, including reprinting/republishing this material for advertising or promotional purposes,creating new collective works, for resale or

redistribution to servers or lists, or reuse of any copyrighted component of this work in other works. DOI 10.23919/EUSIPCO.2019.8903117

min{K, N}, with L ∈ R

K×r

and R ∈ R

r×N

. Measure-

ments are noisy, so that under ideal conditions the data matrix

would be given by LR + N , where N is a noise matrix with

i.i.d. zero-mean Gaussian entries with variance σ

2

. However,

a number of sensors may be malfunctioning, in which case

their collected data do not adhere to the above model. Thus,

let a

i

= 0 if sensor i is faulty, and a

i

= 1 otherwise, and

collect these in a =

a

1

· · · a

K

T

. Whether a given

device is faulty or not is unknown at the FC. We assume that

sensor faults take place randomly and independently, with the

same a priori probability q for all devices, i.e., the a

i

’s are

i.i.d. Bernoulli random variables with Pr{a

i

= 0} = q. We

adopt a non-informative model under which the readings from

a defective device, say sensor i, are i.i.d. and uniformly dis-

tributed in some unknown interval [A

i

, B

i

].

Due to channel fading, device battery outages, etc., a sub-

set of the observations is missing at the FC. We denote by

Ω

i

the set of pairs (i, j) for which y

ij

is available, with car-

dinality |Ω

i

| = N

i

, and define Ω = Ω

1

∪ · · · ∪ Ω

K

, with

|Ω| =

P

K

i=1

N

i

, M. Then, arranging the available data in

the vector y ∈ R

M

, the data model can be expressed as

y = P

Ω

(A(LR + N ) + (I − A)W ) , (1)

where P

Ω

(X) extracts the entries of X with indices in Ω,

A , diag{a}, and W is a random matrix whose i-th row

entries are i.i.d., uniformly distributed in [A

i

, B

i

].

Our goal is to estimate the low-rank factors L, R given

y and Ω. If all sensors were working properly, and in the

absence of missing data, the ML estimates should minimize

kY − LRk

2

F

, where Y = unvec(y). By the Eckart-Young

theorem [10], these can be readily obtained from the SVD of

Y as

ˆ

L = U B

−1

and

ˆ

R = BSV

T

, where S is diagonal

with the r largest singular values, the columns of U, V com-

prise the corresponding left and right singular vectors, and

B is r × r invertible but otherwise arbitrary. However, with

(unknown) faulty sensors and missing data, the ML estimates

cannot be obtained in closed form.

3. E-M ALGORITHM DERIVATION

The E-M algorithm provides a computationally efficient

means to iteratively seek a maximum of the likelihood func-

tion in the presence of incomplete observations [16]. In

this context, we regard y as the incomplete dataset, and

z = {T , a} as the complete dataset, with T , A(LR +

N) + (I − A)W . The unknown parameters are collected in

θ = {L, R, σ

2

, q, A

1

, . . . , A

K

, B

1

, . . . , B

K

}.

The general form of the E-M algorithm is as follows.

Given the incomplete dataset y and an initial guess

ˆ

θ

0

, at

iteration k one performs the following:

• E-step: Compute the conditional expectation Q(θ;

ˆ

θ

k

) ,

E

z|y

{ln p(z; θ) | y ;

ˆ

θ

k

}, where p(z; θ) is the pdf of z pa-

rameterized by θ.

• M-step: the estimate is updated by maximizing this con-

ditional expectation, i.e.,

ˆ

θ

k+1

= arg max

θ

Q(θ;

ˆ

θ

k

).

3.1. Expectation step

Let t

i

be the i-th column of T . Due to independence, and

using the Bernoulli pdf p(a

i

; θ) = q

1−a

i

(1 − q)

a

i

, one has

p(z; θ) =

K

Y

i=1

p(t

i

, a

i

; θ) =

K

Y

i=1

p(t

i

| a

i

; θ)p(a

i

; θ)

=

K

Y

i=1

[qp

0

(t

i

; θ)]

1−a

i

[(1 − q)p

1

(t

i

; θ)]

a

i

, (2)

where for convenience we denote p

b

(t

i

; θ) , p(t

i

|a

i

= b; θ),

b ∈ {0, 1}. Now let us rewrite Q(θ;

ˆ

θ

k

) as

Q(θ;

ˆ

θ

k

) = E

T ,a|y

{ln p(z; θ) | y;

ˆ

θ

k

}

= E

a|y

n

E

T |a,y

{ln p(z; θ) | a, y;

ˆ

θ} | y;

ˆ

θ

k

o

. (3)

Using (2), the inner conditional expectation in (3) becomes

E

T |a,y

{ln p(z; θ) | a, y;

ˆ

θ

k

} = K ln q +

K

X

i=1

a

i

!

ln

1 − q

q

+

K

X

i=1

(1 − a

i

)E

t

i

|a

i

=0,y

{ln p

0

(t

i

; θ) | a

i

= 0, y ;

ˆ

θ

k

}

+

K

X

i=1

a

i

E

t

i

|a

i

=1,y

{ln p

1

(t

i

; θ) | a

i

= 1, y ;

ˆ

θ

k

}, (4)

since for any binary r.v. a ∈ {0, 1}, one has aE

t|a

{f(t)} =

a[(1 − a)E

t|a=0

{f(t)} + aE

t|a=1

{f(t)}] = aE

t|a=1

{f(t)};

analogously, (1−a)E

t|a

{f(t)} = (1−a)E

t|a=0

{f(t)}. Now,

the entries t

ij

of t

i

are independent under both a

i

= 0 and

a

i

= 1, so that p

b

(t

i

; θ) =

Q

N

j=1

p

b

(t

ij

; θ), b ∈ {0, 1}. Then,

for b ∈ {0, 1},

g

b

i

(y; θ;

ˆ

θ

k

) , E

t

i

|a

i

=b,y

{ln p

b

(t

i

; θ) | a

i

= b, y ;

ˆ

θ

k

}

=

N

X

j=1

E

t

ij

|a

i

=b,y

{ln p

b

(t

ij

; θ) | a

i

= b, y ;

ˆ

θ

k

} (5)

=

X

j∈Ω

i

ln p

b

(y

ij

; θ) +

X

j /∈Ω

i

f

b

ij

(θ;

ˆ

θ

k

), (6)

where f

b

ij

(θ;

ˆ

θ

k

) , E

t

ij

|a

i

=b

{ln p

b

(t

ij

; θ) | a

i

= b;

ˆ

θ

k

}.

Under our model, p

0

(t

ij

; θ) = U(t

ij

; A

i

, B

i

) is a uniform

pdf over [A

i

, B

i

], whereas p

1

(t

ij

; θ) = N (t

ij

; m

ij

, σ

2

) is

a Gaussian pdf with mean m

ij

, (LR)

ij

and variance σ

2

.

Hence, upon defining ˆm

ij,k

, (

ˆ

L

k

ˆ

R

k

)

ij

, it follows that

f

0

ij

(θ;

ˆ

θ

k

) =

− ln(B

i

− A

i

), A

i

≤

ˆ

A

i,k

, B

i

≥

ˆ

B

i,k

,

−∞, else,

(7)

f

1

ij

(θ;

ˆ

θ

k

) = −

1

2

ln 2πσ

2

−

1

2σ

2

[ˆσ

2

k

+ ( ˆm

ij,k

− m

ij

)

2

]. (8)

Thus, letting ˆa

i,k

, E

a

i

|y

{a

i

|y;

ˆ

θ

k

} = Pr{a

i

= 1|y;

ˆ

θ

k

},

Q(θ;

ˆ

θ

k

) = K ln q +

K

X

i=1

ˆa

i,k

!

ln

1 − q

q

+

K

X

i=1

h

(1 − ˆa

i,k

)g

0

i

(y; θ;

ˆ

θ

k

) + ˆa

i,k

g

1

i

(y; θ;

ˆ

θ

k

)

i

. (9)

Note that ˆa

i,k

can be interpreted as the a posteriori probabil-

ity of sesor i being non-defective, given the data y and the

estimate

ˆ

θ

k

. Using Bayes’ theorem, it is obtained as

ˆa

i,k

=

(1 − ˆq

k

)

Q

j∈Ω

i

p

1

(y

ij

;

ˆ

θ

k

)

ˆq

k

Q

j∈Ω

i

p

0

(y

ij

;

ˆ

θ

k

) + (1 − ˆq

k

)

Q

j∈Ω

i

p

1

(y

ij

;

ˆ

θ

k

)

.

(10)

3.2. Maximization step

In order to maximize Q(θ;

ˆ

θ

k

) in (9) w.r.t. θ, we proceed step

by step. First, the optimum value of q is readily found:

ˆq

k+1

= 1 −

1

K

K

X

i=1

ˆa

i,k

. (11)

Second, note that the values of A

i

, B

i

maximizing Q(θ;

ˆ

θ

k

)

are those maximizing g

0

i

(y; θ;

ˆ

θ

k

). This function evaluates to

−∞ unless A

i

≤

ˆ

A

i,k

, B

i

≥

ˆ

B

i,k

, and A

i

≤ y

ij

, B

i

≥ y

ij

∀j ∈ Ω

i

, in which case it evaluates to −N ln(B

i

− A

i

). Sub-

ject to the above constraints, this is maximized at

ˆ

A

i,k+1

=

min{

ˆ

A

i,k

} ∪{y

ij

, j ∈ Ω

i

},

ˆ

B

i,k+1

= max{

ˆ

B

i,k

} ∪{y

ij

, j ∈

Ω

i

}. Therefore, it is clear that we can simply take, for all k,

ˆ

A

i,k

= min

j∈Ω

i

{y

ij

},

ˆ

B

i,k

= max

j∈Ω

i

{y

ij

}. (12)

Third, letting c

i

, 1 −

N

i

N

, the values of L, R maximizing

Q(θ;

ˆ

θ

k

) in (9) are seen to be those minimizing the term

−2

K

X

i=1

ˆa

i,k

g

1

i

(y; θ;

ˆ

θ

k

) = N

K

X

i=1

ˆa

i,k

ln 2πσ

2

+ c

i

ˆσ

2

k

σ

2

+

1

σ

2

K

X

i=1

ˆa

i,k

X

j∈Ω

i

(y

ij

− m

ij

)

2

+

X

j /∈Ω

i

( ˆm

ij,k

− m

ij

)

2

(13)

Only the last term in the right-hand side of (13) depends on

L, R via {m

ij

}. If we define

ˆ

Y

k

∈ R

K×N

with entries

ˆy

ij,k

= y

ij

, j ∈ Ω

i

, ˆy

ij,k

= ˆm

ij,k

, j /∈ Ω

i

, (14)

and the diagonal matrix

ˆ

A

k

= diag{

ˆa

1,k

· · · ˆa

K,k

},

then such term can be rewritten so as to yield

min

L,R

1

σ

2

ˆ

A

1/2

k

(

ˆ

Y

k

− LR)

2

F

, (15)

which is a particular case of the weighted low-rank approx-

imation (WLRA) problem [12]. Although general WLRA

problems do not generally admit closed-form solutions, the

special structure of (15), in which the weighting is on a row-

by-row basis, constitutes an exception, as shown in [13]. This

can be seen by noticing in (15) that

ˆ

A

1/2

k

L and R are the

factors in the (unweighted) rank-r approximation of

ˆ

A

1/2

k

ˆ

Y

k

.

Therefore, we first compute the SVD of

ˆ

A

1/2

k

ˆ

Y

k

and trun-

cate it to its r principal components, say U

k

S

k

V

T

k

, where

U

k

∈ R

K×r

, V

k

∈ R

N×r

, and S

k

is r × r diagonal with the

largest singular values. Then we set

ˆ

L

k+1

=

ˆ

A

−1/2

k

U

k

,

ˆ

R

k+1

= S

k

V

T

k

. (16)

Finally, the value of σ

2

maximizing Q(θ;

ˆ

θ

k

) is the one min-

imizing (13), and is given by

ˆσ

2

k+1

=

1

N

ˆ

A

1/2

k

(

ˆ

Y

k

−

ˆ

L

k+1

ˆ

R

k+1

)

2

F

+ ˆσ

2

k

P

K

i=1

ˆa

i,k

c

i

P

K

i=1

ˆa

i,k

.

(17)

4. INITIALIZATION

Since the E-M iteration may in general converge to a local

maximum of the likelihood function, its initialization is a crit-

ical issue. Next we describe an initialization scheme which

appears to be quite effective. It is based on the PCA-based

“row-structure fault detection algorithm” from [14], a statis-

tical test to check if a given sensor is faulty. Specifically, let

the zero-padded data matrix

e

Y ∈ R

K×N

be given by

e

Y

ij

= y

ij

, (i, j) ∈ Ω,

e

Y

ij

= 0 (i, j) /∈ Ω, (18)

and let

e

µ

T

=

1

K

1

T

K

e

Y be the average of its rows. The

N × N sample covariance matrix is given by C =

1

K

(

e

Y −

1

K

e

µ

T

)

T

(

e

Y − 1

K

e

µ

T

). Let now Λ

r

∈ R

r×r

be diagonal

with the r largest eigenvalues of C, and let the columns of

U

r

∈ R

N×r

comprise the corresponding r eigenvectors.

According to [14], the test statistic

d

2

i

= kΛ

−1/2

r

U

T

r

(

e

y

i

−

e

µ)k

2

, (19)

where

e

y

T

i

is the i-th row of

e

Y , is approximately χ

2

r

-distributed

if the data from sensor i is not abnormal. Using this, the

authors of [14] declare sensor i as faulty if d

2

i

exceeds a

threshold, which is set to achieve a given probability of false

alarm P

FA

. Since d

2

i

will likely take large values when sensor

i is defective yielding corrupted data with high energy, we

propose to initialize the probabilities ˆa

i,0

proportionally to

these values after normalization. Specifically,

ˆa

i,0

= β ·

d

2

i

max

1≤j≤K

{d

2

j

}

, i = 1, . . . , K, (20)

where 0 < β ≤ 1 is a suitable constant. In practice, β = 0.95

has been found to give good results. Finally, the initial value

ˆ

L

0

ˆ

R

0

is taken as the best rank-r approximation of

e

Y .

0 5 10 15 20 25 30

iterations

0

0.1

0.2

0.3

0.4

0.5

0.6

NRMSE over healthy nodes

Naive

Hard fault detection

Clairvoyant

EM

Outlier Pursuit

Fig. 1. NRMSE vs. iterations (r = 10).

5. NUMERICAL RESULTS

Consider a setting with K = 100, N = 70. Entries of ground

truth matrices L ∈ R

K×r

, R ∈ R

r×N

are independently

drawn from a standard Gaussian distribution. The probabil-

ities of sensor malfunction and observing a given data point

are 0.1 and 0.65, respectively. The signal-to-noise ratio, de-

fined as kLRk

2

F

/(KNσ

2

), is set to 20 dB. The limits of the

uniform pdf for faulty sensor data are generated as A

i

= −B

i

with each B

i

independently drawn from a uniform distribu-

tion over [0, 65σ] to capture a wide range of sensor fault types.

In addition to the proposed E-M estimator, the following

schemes were also implemented for comparison:

• A “naive” scheme oblivious to sensor faults, which seeks a

minimum of ky−P

Ω

(LR)k

2

F

by alternatingly optimizing

over one of the factors while fixing the other [20], initial-

ized at the best rank-r approximation of the zero-padded

matrix

e

Y .

• A “clairvoyant” scheme with knowledge about which sen-

sors are faulty, which applies a similar alternating mini-

mization approach after discarding the corrupted data.

• A scheme based on a preliminary hard fault detection

stage declaring sensor i faulty if d

2

i

in (19) exceeds a

threshold. Data estimated as corrupted are then discarded

before applying the alternating minimization scheme

above. As in [14], the threshold is set for P

FA

= 0.025.

• A scheme based on the “noisy outlier pursuit” method

from [23], solving the following convex problem:

min

M,S

kMk

∗

+ λkS

T

k

1,2

s.to ky − P

Ω

(M + S)k ≤ ,

(21)

where k · k

∗

is the nuclear norm, and kBk

1,2

is the sum

of Euclidean norms of the columns of B. In (21), M is

0 5 10 15 20 25

rank r

0

0.1

0.2

0.3

0.4

0.5

NRMSE over healthy nodes

Naive

Hard fault detection

Clairvoyant

EM

Outlier Pursuit

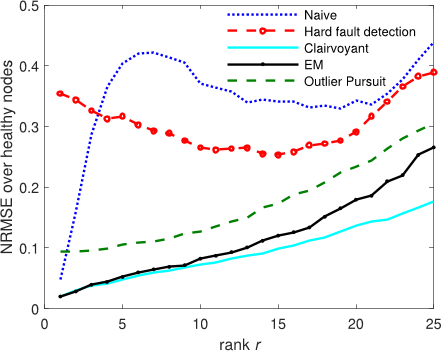

Fig. 2. NRMSE for the different schemes vs. rank r.

an estimate of the low-rank term LR, whereas S is an es-

timate of the corrupted data, with the term kS

T

k

1,2

pro-

moting row-sparsity. The tuning parameters were set to

λ = 0.9 and = 0.01 by trial and error.

For r = 10, Fig. 1 shows the evolution of the normalized

root mean squared error (NRMSE) over clean data

1

, defined

as

NRMSE =

kA(LR −

ˆ

L

ˆ

R)k

F

kALRk

F

(22)

and averaged over 100 Monte Carlo runs (for the outlier pur-

suit scheme,

ˆ

L

ˆ

R in (22) is replaced by M ). Fig. 2 shows the

NRMSE as a function of the ground-truth rank r.

The performance of the clairvoyant estimator constitutes

a benchmark. The naive estimator behaves poorly, as could

be expected: the effect of corrupted data from faulty sensors

cannot be lightly dismissed. In this scenario, the improvement

by applying a data cleansing stage as suggested in [14] (hard

fault detection) is limited. It was observed that whenever the

corrupted data from a faulty sensor has small energy, the fault

detection mechanism based on (19) is not effective, but nev-

ertheless the impact on matrix recovery of such low-energy

abnormal data remains significant, which explains the poor

performance of this scheme. The outlier-pursuit method is

seen to provide reasonable performance, although it requires

fine tuning of the user-selectable parameters; in addition, its

computational load may be a bottleneck as matrix sizes grow.

In this sense, we note that the complexity of the proposed E-M

scheme is dominated by step (15), which requires the compu-

tation of the SVD of a K × N matrix at each iteration. The

E-M estimator gets close to the benchmark, showing the efec-

tiveness of the proposed initialization, with a graceful degra-

dation in performance as the rank r increases.

1

Corrupted data from faulty sensors is excluded from (22) since it is not

possible to recover a whole row of LR from the low-rank property alone.

We repeated the simulations under the same setting, but

generating the corrupted data for faulty sensor i as i.i.d. Gaus-

sian with mean (A

i

+B

i

)/2 and variance

1

12

(B

i

−A

i

)

2

, where

A

i

, B

i

were generated in the same way as above. Results

were roughly similar to those with uniformly distributed out-

liers, indicating that E-M is robust to deviations from the pro-

posed non-informative model for corrupted data.

6. CONCLUSIONS

We have presented an ML approach to the problem of matrix

completion under noisy data and unreliable sensors. Moti-

vated by the presence of unobserved variables, we developed

the E-M iterative algorithm for this problem, together with

an effective initialization strategy. E-M outperforms schemes

based on hard decisions about the sensor labels, at a fraction

of the computational cost of convex optimization approaches,

and without requiring parameter tuning.

7. REFERENCES

[1] Yudong Chen and Yuejie Chi, ”Harnessing structures in

Big Data via guaranteed low-rank matrix estimation,”

IEEE Signal Process. Mag. vol. 35 no. 4, pp. 14-31, Jul.

2018.

[2] M. C. Vuran,

¨

O. B. Akan, I. F. Akyildiz, ”Spatiotempo-

ral correlation: theory and applications for wireless sen-

sor networks”, Computer Networks, vol. 45 no. 3, pp.

245-259, 2004.

[3] M. A. Davenport and J. Romberg, ”An overview of low-

rank matrix recovery from incomplete observations,”

IEEE J. Sel. Topics Signal Process., vol. 10 no. 4, pp.

608-622, Jun. 2016.

[4] Jie Cheng et al., ”STCDG: An efficient data gathering

algorithm based on matrix completion for wireless sen-

sor networks,” IEEE Trans. Wireless Commun., vol. 12

no. 2, pp. 850-861, Feb. 2013.

[5] Jingfei He et al., ”Data recovery in wireless sensor

networks with joint matrix recovery and sparsity con-

straints,” IEEE Commun. Lett., vol. 19 no. 12, pp. 2230-

2233, Dec. 2015.

[6] K. Ni et al., ”Sensor network data fault types,” ACM

Trans. Sensor Netw., vol. 5 no. 3, May 2009.

[7] A. Mahapatro and P. M. Khilar, ”Fault diagnosis in wire-

less sensor networks: a survey,” IEEE Commun. Surv.

Tuts., vol. 15 no. 4, pp. 2000-2026, 2013.

[8] X. Du and H.-H. Chen, ”Security in wireless sensor net-

works,” IEEE Trans. Wireless Commun., vol. 15 no. 4,

pp. 60-66, Aug. 2008.

[9] A. Vempaty, L. Tong and P. Varshney, ”Distributed in-

ference with Byzantine data: state of the art review on

data falsification attacks,” IEEE Signal Process. Mag.,

vol. 30 no. 5, pp. 65-75, Sep. 2013.

[10] R. Horn and C. Johnson, Matrix Analysis. Cambridge,

U.K.: Cambridge Univ. Press, 1985.

[11] G. J. McLachlan and T. Krishnan, The EM algorithm

and extensions. New York, NY, USA: Wiley, 1997.

[12] N. Srebro and T. Jaakkola, ”Weighted low-rank approx-

imations,” in Proc. 20th Int. Conf. Machine Learning,

pp. 720-727, 2003.

[13] P. M. Q. Aguiar and J. M. F. Moura, ”Factorization as

a rank 1 problem,” in Proc. IEEE Conf. Comput. Vision

and Pattern Recognition, vol. 1, pp. 178-184, 1999.

[14] K. Xie et al., “Recover corrupted data in sensor net-

works: a matrix completion solution,” IEEE Trans. Mo-

bile Comput., vol. 16 no. 5, pp. 1434-1448, May 2017.

[15] L. L. Scharf, Statistical signal processing: detection, es-

timation, and time series analysis. Reading, MA, USA:

Addison-Wesley; 1990.

[16] G. J. McLachlan and T. Krishnan, The EM algorithm

and extensions. New York, NY, USA: Wiley; 1997.

[17] E. J. Cand

`

es and T. Tao, ”The power of convex relax-

ation: Near-optimal matrix completion,” IEEE Trans.

Inf. Theory, vol. 56 no. 5, pp. 2053-2080, May 2010.

[18] B. Recht, M. Fazel and P. A. Parrilo, ”Guaranteed

minimum-rank solutions of linear matrix equations via

nuclear norm minimization,” SIAM Rev., vol. 52 no.3,

pp. 471-501, 2010.

[19] S. Burer and R. D. C. Monteiro, ”Local minima and con-

vergence in low-rank semidefinite programming,” Math.

Programming, vol. 103 no. 3, pp. 427-444, Jul. 2005.

[20] M. Hardt, ”Understanding alternating minimization for

matrix completion,” in Proc. IEEE 55th Annu. Symp.

Found. Comput. Sci., pp. 651-660, 2014.

[21] P. Jain and P. Netrapalli, ”Fast exact matrix completion

with finite samples,” in Proc. 28th Conf. Learning The-

ory, pp. 1007-1034, 2015.

[22] E. J. Cand

`

es et al., ”Robust Principal Component Anal-

ysis?,” J. of the ACM, vol. 58 no. 3, art. 11 , May 2011.

[23] H. Xu, C. Caramanis and S. Sanghavi, ”Robust PCA via

outlier pursuit,” IEEE Trans. Inf. Theory, vol. 58 no. 5,

pp. 3047-3064, May 2012.

[24] Yudong Chen et al., ”Low-rank matrix recovery from

errors and erasures,” IEEE Trans. Inf. Theory, vol. 59

no. 7, pp. 4324-4337, Jul. 2013.