Q2. What future works have the authors mentioned in the paper "Lstm-modeling of continuous emotions in an audiovisual affect recognition framework" ?

However, the considered scenario reflects realistic conditions in natural interactions and thus highlights the need for further research in the area of affective computing in order to get closer to the human performance in judging emotions. Their future research in the area of video feature extraction will include the application of multi-camera input to be more robust to head rotations. The authors plan to combine the facial movements of the 2D camera sequences to predict 3D movement. Another possibility to increase recognition performance is to allow asynchronities between audio and video, e. g., by applying hybrid fusion techniques like asynchronous HMMs [ 69 ] or multi-dimensional dynamic time warping [ 48 ].

Q3. What are the functionals for the video features?

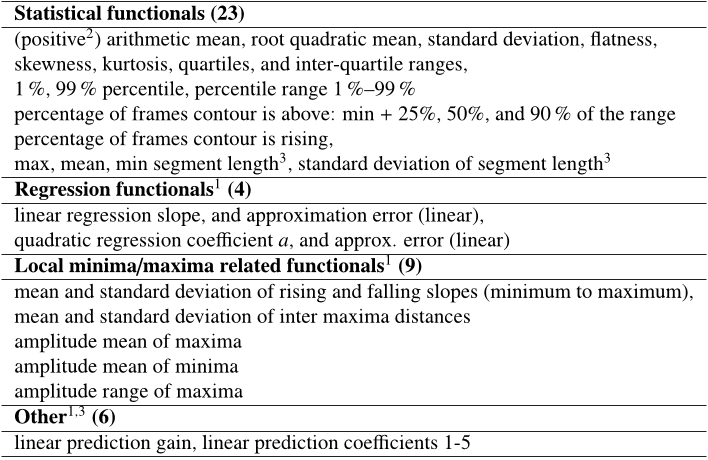

The following functionals are applied to frame-based video features: arithmetic mean (for delta coefficients: arithmetic mean of absolute values), standard deviation, 5% percentile, 95% percentile, and range of 5% and 95% percentile.

Q4. What is the function used to map the sequence of video features to a single vector?

In order to map the sequence of frame-based video features to a single vector describing the word-unit, statistical functionals are applied to the frame-based video features and their first order delta coefficients.

Q5. What is the acoustic feature extraction approach?

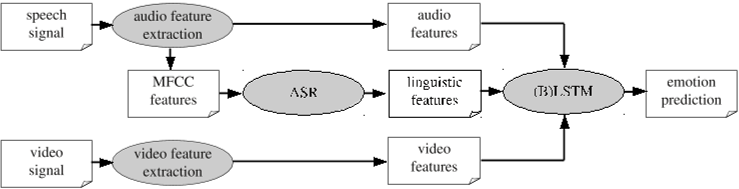

Their acoustic feature extraction approach is based on a large set of low-level descriptors and derivatives of LLD combined with suited statistical functionals to capture speech dynamics within a word.

Q6. How long does it take to compute the low-level features?

The computation of the low-level features takes 50 ms per frame for a C++ implementation on a 2.4 GHz Intel i5 processor with 4 GB RAM.

Q7. What is the official challenge measure for the LSTM?

As the class distribution in the training set is relatively well balanced, the official challenge measure is weighted accuracy, i. e., the recognition rates of the individual classes weighted by the class distribution.

Q8. What is the reason for the introduction of context-sensitivity in emotion classification frameworks?

Human emotions tend to evolve slowly over time which motivates the introduction of some form of context-sensitivity in emotion classification frameworks.

Q9. What are the functions used to ensure a similar dimensionality of the video feature vector and?

Fewer functionals as for audio features are used to ensure a similar dimensionality of the video feature vector and the audio feature vector.

Q10. What is the approach towards achieving acceptable recognition performance even in challenging conditions?

One approach towards reaching acceptable recognition performance even in challenging conditions is the modeling of contextual information.

Q11. What is the classification framework for long-range context modeling?

Among various classification frameworks that are able to exploit turn-level context, so-called Long Short-Term Memory (LSTM) networks [18] tend to be best suited for long-range context modeling in emotion recognition.

Q12. How many training epochs did the LSTM network achieve?

According to optimizations on the development set, the number of training epochs was 60 for networks classifying arousal and 30 for all other networks.

Q13. How is the dimensionality of the features computed?

By employing uniform LBPs instead of full LBPs and aggregating the LBP operator responses in histograms taken over regions of the face, the dimensionality of the features is rather low (59 dimensions per image block).

Q14. What is the WA for arousal?

For arousal, the best WA of 68.5 % is obtained for acoustic features only, whichis in line with previous studies showing that audio is the most important modality for assessing arousal [13].

Q15. What is the probability of a facial pixel in the current image?

For each pixel I(x, y) in the current image the probability of a facial pixel can be approximated byP f (x, y) = M(IH(x, y), IS (x, y), IV (x, y))N , (2)with N being the number of template pixels that have been used to create the histogram.

Q16. How many sessions are intended for this sub-challenge?

their test set consists only of the sessions that are intended for this sub-challenge, meaning only 10 out of the 32 test sessions.

Q17. What is the WA for LSTMs?

the classification of expectation seems to benefit from including visual information as the best WA (67.6 %) is reached for LSTM networks applying late fusion of audio and video modalities.

Q18. How can the authors improve the recognition performance of the audio/video emotion challenge?

To obtain the best possible recognition performance, future studies should also investigate which feature-classifier combinations lead to the best results, e. g., by combining the proposed LSTM framework with other audio or video features proposed for the 2011 Audio/Visual Emotion Challenge.

![Figure 5: Importance of facial regions for video feature extraction according to the ranking-based information gain attribute evaluation algorithm implemented in the Weka toolkit [58]. Information gain is evaluated for each emotional dimension. The shading of the facial regions indicates the importance of the features corresponding to the respective region.](/figures/figure-5-importance-of-facial-regions-for-video-feature-1xjm8183.png)

![Table 1: Overview of the SEMAINE database as used for the 2011 Audio/Visual Emotion Challenge [6].](/figures/table-1-overview-of-the-semaine-database-as-used-for-the-2iacvgtc.png)

![Table 8: Statistical significance of the average performance difference between the audio-based classification approaches denoted in the column and the approaches in the table header (evaluations on test set of the Audiovisual Sub-Challenge); ‘-’: not significant; ‘o’ significant at 0.1 level; ‘+’: significant at 0.05 level; ‘++’: significant at 0.001 level. Significance levels are computed according to the z-test described in [67].](/figures/table-8-statistical-significance-of-the-average-performance-1htyiuze.png)