Did you find this useful? Give us your feedback

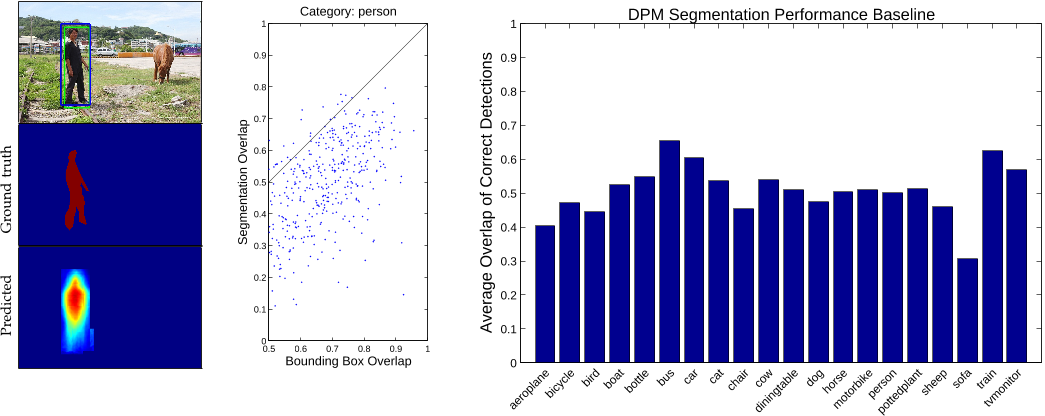

![TABLE 1: Top: Detection performance evaluated on PASCAL VOC 2012. DPMv5-P is the performance reported by Girshick et al. in VOC release 5. DPMv5-C uses the same implementation, but is trained with MS COCO. Bottom: Performance evaluated on MS COCO for DPM models trained with PASCAL VOC 2012 (DPMv5-P) and MS COCO (DPMv5-C). For DPMv5-C we used 5000 positive and 10000 negative training examples. While MS COCO is considerably more challenging than PASCAL, use of more training data coupled with more sophisticated approaches [5], [6], [7] should improve performance substantially.](/figures/table-1-top-detection-performance-evaluated-on-pascal-voc-1sypmu73.png)

123,388 citations

44,703 citations

40,257 citations

30,811 citations

26,458 citations

...Region proposal methods typically rely on inexpensive features and economical inference schemes....

[...]

5,742 citations

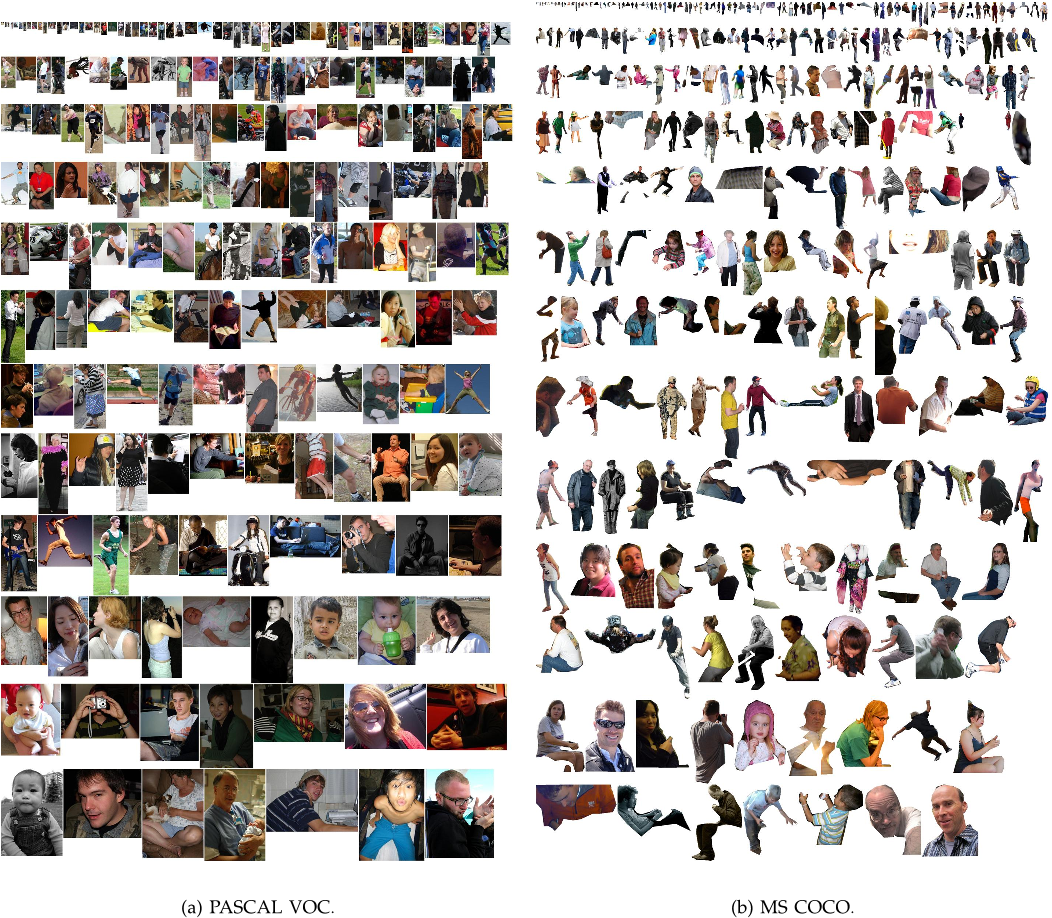

...ly represented by a bounding box, Figure1(b). Early algorithms focused on face detection [27] using various ad hoc datasets. Later, more realistic and challenging face detection datasets were created [28]. Another popular challenge is the detection of pedestrians for which several datasets have been created [29], [4]. The Caltech Pedestrian Dataset [4] contains 350,000 labeled instances with bounding ...

[...]

5,068 citations

...The Berkeley Segmentation Data Set (BSDS500) [37] has been used extensively to evaluate both segmentation and edge detection algorithms....

[...]

...The Berkeley Segmentation Data Set (BSDS500) [37]...

[...]

4,827 citations

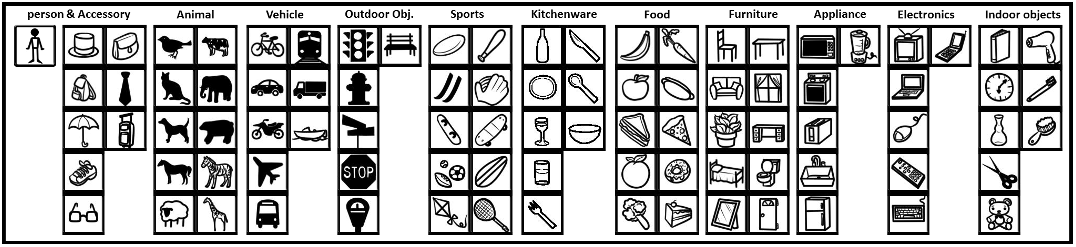

...The community has also created datasets containing object attributes [8], scene attributes [9], keypoints [10], and 3D scene information [11]....

[...]

...Some datasets also include depth information [11]....

[...]

...Datasets exist for both indoor [11] and outdoor [35], [14] scenes....

[...]

3,501 citations

3,170 citations

...Many object detection algorithms benefit from additional annotations, such as the amount an instance is occluded [4] or the location of keypoints on the object [10]....

[...]

...Another popular challenge is the detection of pedestrians for which several datasets have been created [24], [4]....

[...]

...The Caltech Pedestrian Dataset [4] contains 350,000 labeled instances with bounding boxes....

[...]

...The current object classification and detection datasets [1], [2], [3], [4] help us explore the first challenges related to scene understanding....

[...]

Since the detection of many objects such as sunglasses, cellphones or chairs is highly dependent on contextual information, it is important that detection datasets contain objects in their natural environments.

by observing how recall increased as the authors added annotators, the authors estimate that in practice over 99% of all object categories not later rejected as false positives are detected given 8 annotators.

Segmenting 2,500,000 object instances is an extremely time consuming task requiring over 22 worker hours per 1,000 segmentations.

The task of labeling semantic objects in a scene requires that each pixel of an image be labeled as belonging to a category, such as sky, chair, floor, street, etc.

Utilizing over 70,000 worker hours, a vast collection of object instances was gathered, annotated and organized to drive the advancement of object detection and segmentation algorithms.

Segmentations of insufficient quality were discarded and the corresponding instances added back to the pool of unsegmented objects.

After 10-15 instances of a category were segmented in an image, the remaining instances were marked as “crowds” using a single (possibly multipart) segment.

For the detection of basic object categories, a multiyear effort from 2005 to 2012 was devoted to the creation and maintenance of a series of benchmark datasets that were widely adopted.

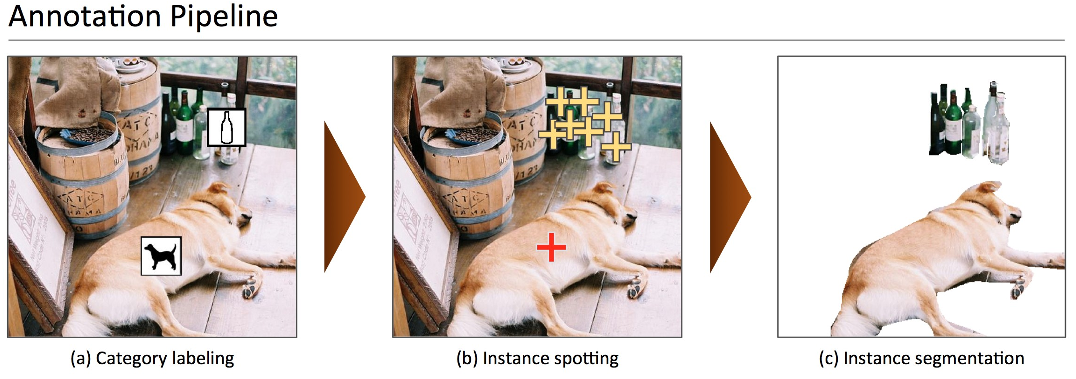

If a worker determines instances from the super-category (animal) are present, for each subordinate category (dog, cat, etc.) present, the worker must drag the category’s icon onto the image over one instance of the category.

Since the authors are primarily interested in precise localization of object instances, the authors decided to only include “thing” categories and not “stuff.”

For images containing 10 object instances or fewer of a given category, every instance was individually segmented (note that in some images up to 15 instances were segmented).

“Thing” categories include objects for which individual instances may be easily labeled (person, chair, car) where “stuff” categories include materials and objects with no clear boundaries (sky, street, grass).

Such examples may act as noise and pollute the learned model if the model is not rich enough to capture such appearance variability.

Another interesting observation is only 10% of the images in MS COCO have only one category per image, in comparison, over 60% of images contain a single object category in ImageNet and PASCAL VOC.