Did you find this useful? Give us your feedback

21 citations

...in [18] utilises wavelet-based volumetric coding, energy-based lossless compression of wavelet subbands and tensor decomposition based coding for effective compression....

[...]

8 citations

...The proposed technique drastically reduces the amount of sampled data at each node, thus allowing the nodes to spend more time in a low-power sleep mode and save energy....

[...]

2 citations

11,407 citations

9,227 citations

...We apply parallel factor decomposition (PARAFAC) decomposition [8] to the three-way tensor I, formed from multichannel EEG....

[...]

3,211 citations

...In the first stage, we consider (i) wavelet-based volumetric coding; (ii) energybased lossless compression of wavelet subbands; (iii) tensor decomposition based coding....

[...]

3,195 citations

178 citations

The main idea is to exploit the intra- and inter-channel correlations simultaneously by arranging the multi-channel EEG as a volume, and to represent that volume in different ways.

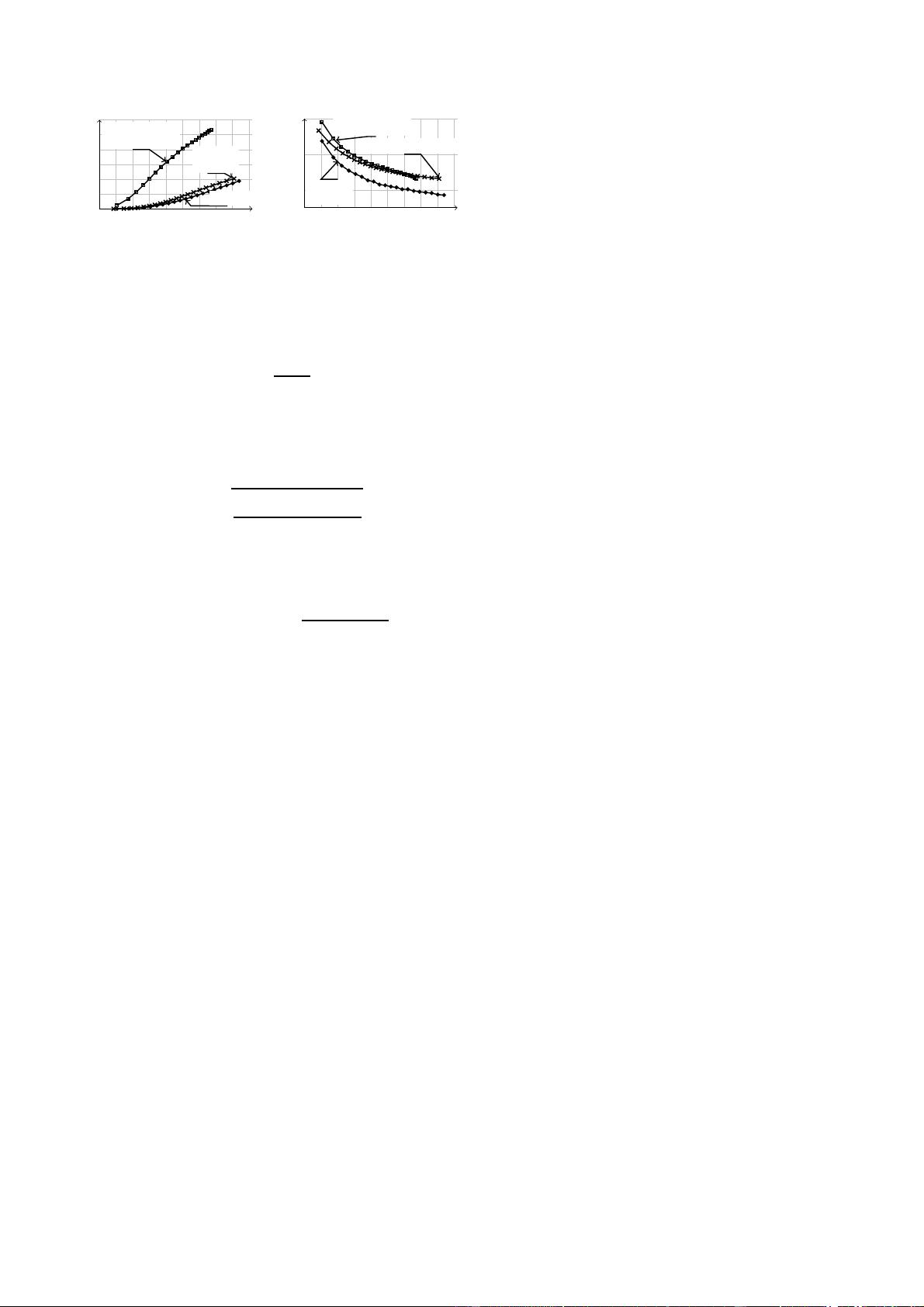

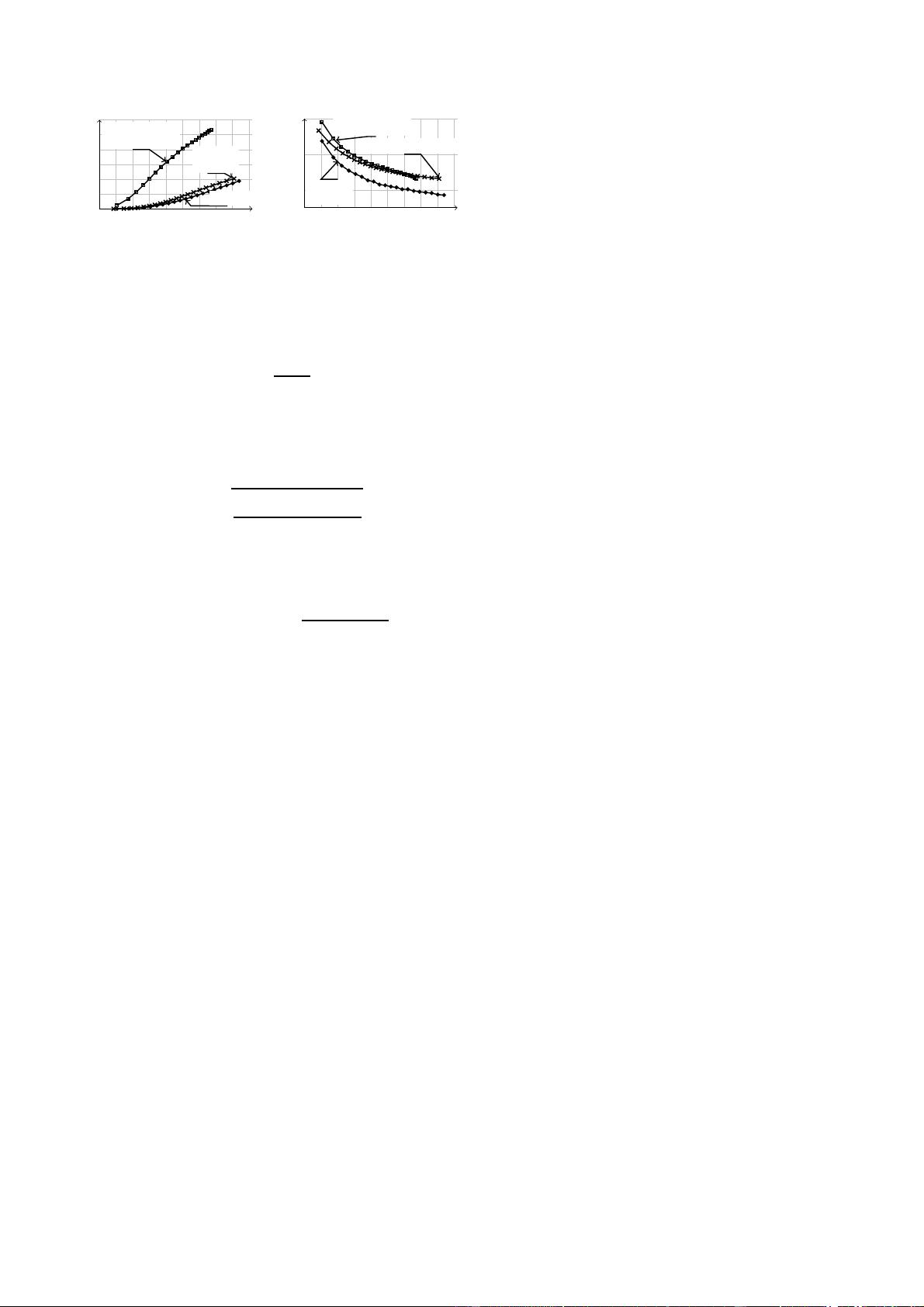

The tensor-based coding scheme yields smaller worst-case error than both subband specific coding and volumetric coding, yet the average error is only slightly larger than in subband specific coding and much smaller than in volumetric coding.

(10)The authors consider segments of 1024 samples from each channel, arranged in a suitable volume size, specifically, 32×32× 64 for t/dt/s volume, 8 × 8 × 1024 for s/s/t volume.

The authors consider three lossy compression algorithms (Stage 1): (i) 3D Wavelet volumetric coding, (ii) 3D Wavelet subband specific arithmetic coding, and (iii) tensor decomposition (PARAFAC) based coding.

The authors consider two specific ways to extract a volumetric data from multi-channel EEG, where the three axes capture spatial and temporal variations in different form.

The quality of the reconstructed signal (x̃) is assessed using percent rootmean-square distortion (PRD (%)):PRD (%) =√ √ √ √ ∑N i=1(x(i)− x̃(i)) 2∑N i=1 x(i)2 × 100. (9)The authors also use an alternative quantitative distortion measure, based on the maximum absolute difference between x and x̃:PSNR(x, x̃) = 10 log10(2Q − 1max(|x− x̃|)).

In their previous work [2], the authors introduced a pre-processing technique where single-channel EEG is arranged as a matrix before compression; this representation improved the RateDistortion (R-D) performance over conventional compression schemes.

The energy threshold (τ ) for the subband specific arithmetic coding is fixed to 50%; the authors obtained the best results for that value of the threshold.

The authors analyze the performance of the algorithms based on compression ratio:CR = LorigLcomp , (8)where Lorig and Lcomp are the bit length of original and reconstructed multi-channel EEG signals respectively.