Did you find this useful? Give us your feedback

119 citations

...• Decision update Researchers are exploring the use of online or incremental learning approaches to improve the decision boundary of the classifiers even in deployment phase [32,33]....

[...]

111 citations

85 citations

68 citations

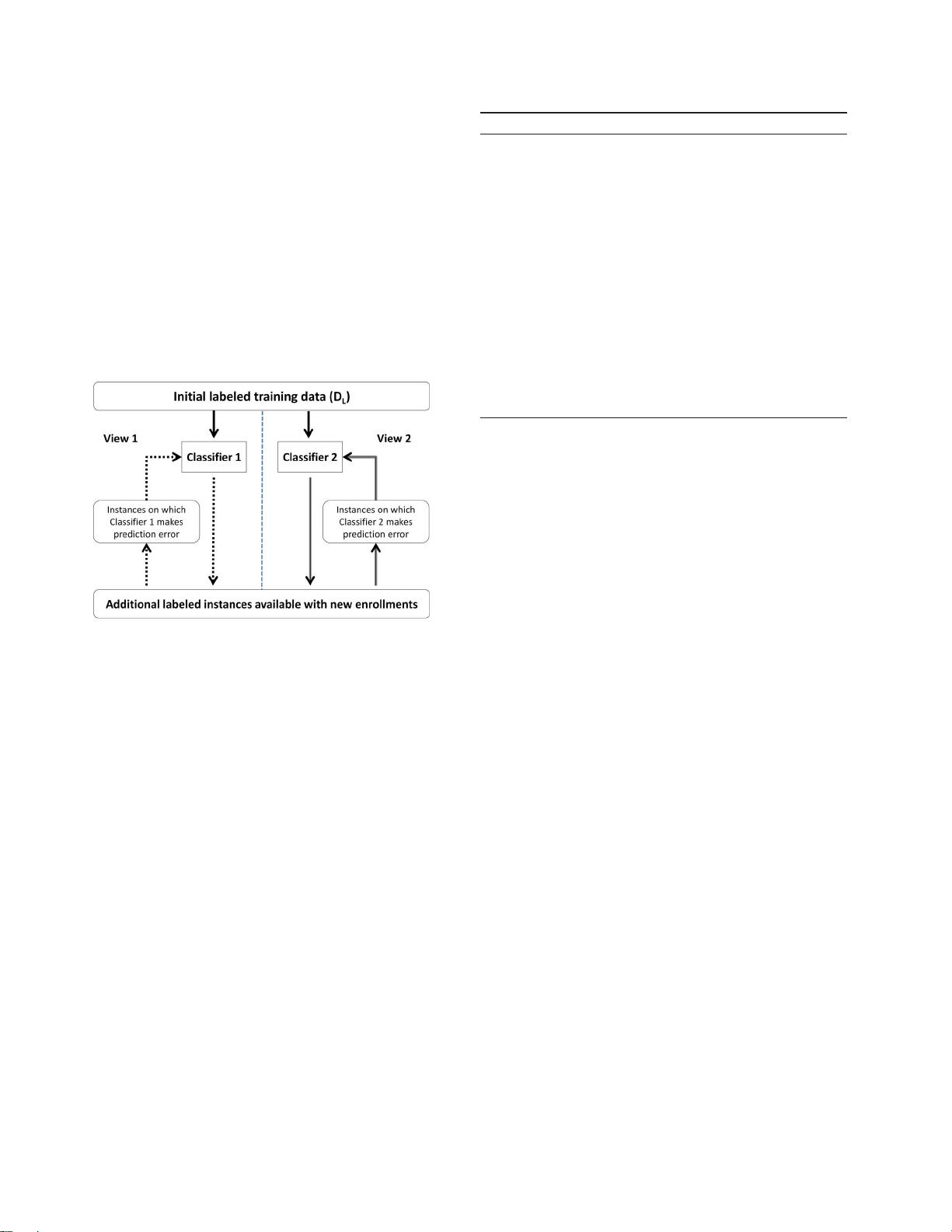

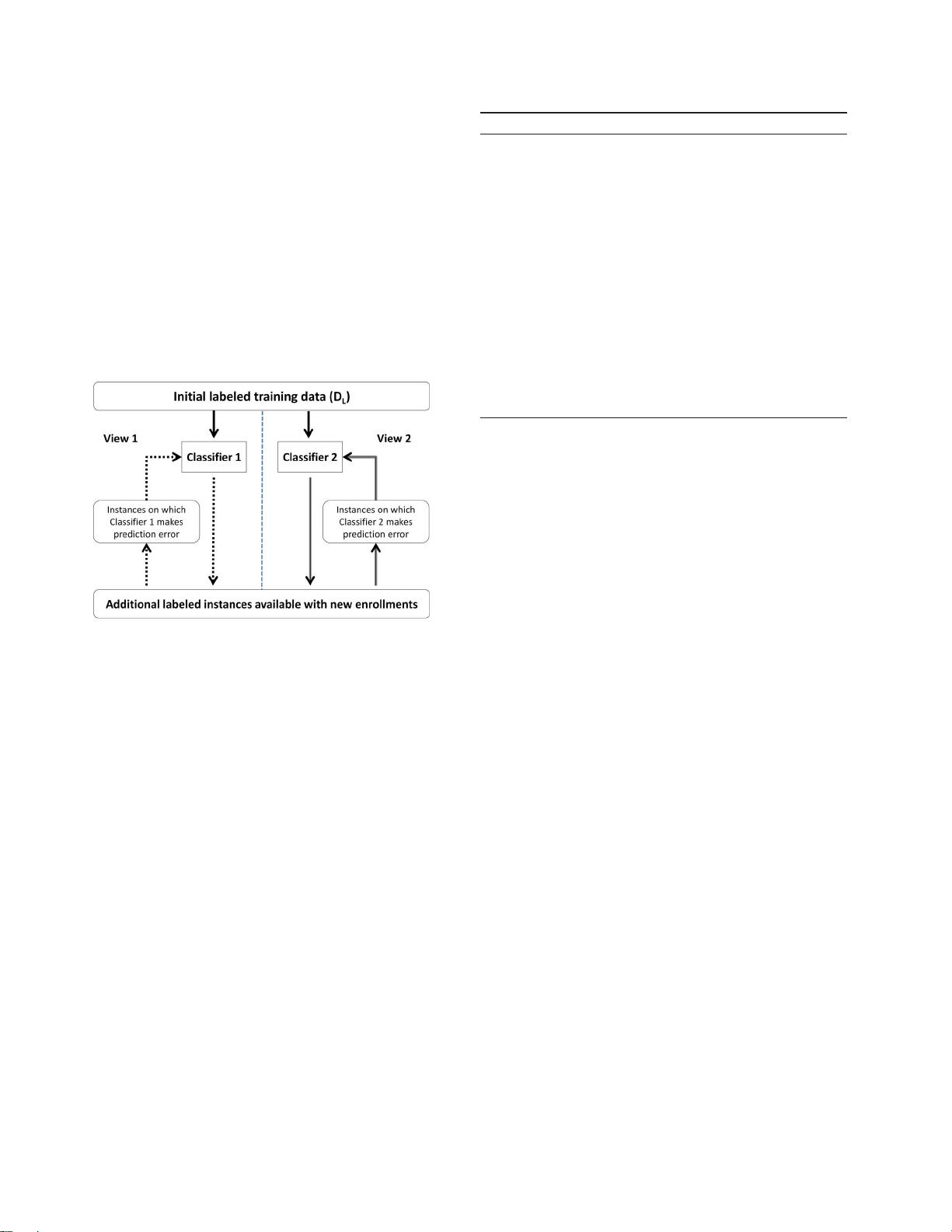

...Classifier update using co-training is explored by Bhatt et al. [38] where the biometric classifiers are updated using labeled as well as unlabeled instances....

[...]

51 citations

...Besides subject-specific incremental improvements, new quality-controlled data can also be employed to improve biometric models via online learning [80][68]....

[...]

13,011 citations

...2In our experiments, SURF and UCLBP had genuine Pearson’s correlation of 0.58 and impostor Pearson’s correlation of 0.46....

[...]

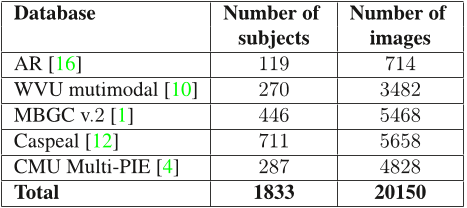

...UCLBP and SURF are used for facial feature extraction because they are fast, discriminating, rotation invariant, and robust to changes in gray level intensities due to illumination variations....

[...]

...Pointbased Speeded Up Robust Features (SURF) [6] and texturebased Uniform Circular Local Binary Pattern (UCLBP) [3] are used as facial feature extractors along with 𝜒2 distance for matching....

[...]

...Two SVM classifiers, one for SURF (classifier1) and another for UCLBP (classifier2), are trained to classify the scores as 𝑔𝑒𝑛𝑢𝑖𝑛𝑒 or 𝑖𝑚𝑝𝑜𝑠𝑡𝑜𝑟....

[...]

...Pointbased Speeded Up Robust Features (SURF) [6] and texturebased Uniform Circular Local Binary Pattern (UCLBP) [3] are used as facial feature extractors along with χ(2) distance for matching....

[...]

5,840 citations

...Online learning [18] and co-training [7] are used to update the classifiers in real time and make them scalable....

[...]

...Blum and Mitchell [7] showed that two classifiers should have sufficient individual accuracy and should be conditionally independent of each other....

[...]

...In co-training, as proposed by Blum and Mitchell [7], two classifiers that are trained on separate views (features), co-train each other based on their confidence in predicting the labels....

[...]

5,563 citations

...2In our experiments, SURF and UCLBP had genuine Pearson’s correlation of 0.58 and impostor Pearson’s correlation of 0.46....

[...]

...UCLBP and SURF are used for facial feature extraction because they are fast, discriminating, rotation invariant, and robust to changes in gray level intensities due to illumination variations....

[...]

...Pointbased Speeded Up Robust Features (SURF) [6] and texturebased Uniform Circular Local Binary Pattern (UCLBP) [3] are used as facial feature extractors along with 𝜒2 distance for matching....

[...]

...Two SVM classifiers, one for SURF (classifier1) and another for UCLBP (classifier2), are trained to classify the scores as 𝑔𝑒𝑛𝑢𝑖𝑛𝑒 or 𝑖𝑚𝑝𝑜𝑠𝑡𝑜𝑟....

[...]

...Pointbased Speeded Up Robust Features (SURF) [6] and texturebased Uniform Circular Local Binary Pattern (UCLBP) [3] are used as facial feature extractors along with χ(2) distance for matching....

[...]

3,773 citations

As future work, the proposed framework can be extended to different stages of a biometric system that require regular updates. The authors also plan to incorporate the quality of the given gallery-probe pair in computing the confidence of prediction rather than making a decision based only on the distance from the hyperplane.

In the proposed framework, co-training is used to leverage the availability of multiple classifiers and unlabeled instances to update the decision boundaries of both the classifiers and account for the wide intra-class variations introduced by the probe set.

During probe verification, whenever a user queries the system, co-training is used to update the classifiers using the unlabeled data.

For online learning, during enrolment, classifier1 was updated using 22,145instances and classifier2 was updated using 31,846 instances.

New enrolments can lead to variations in genuine and impostor score distributions while probe images may introduce wide intra-class variations (due to temporal changes).

To maintain the performance and to accommodate the variations caused due to new enrolments and probes, biometric systems generally require re-training.

online SVM classifiers have a significant advantage of reduced re-training time using only the new sample points to update the decision boundary.

Since corresponding labels (“genuine” or “impostor”) are available during enrolment, classifier update using online learning can be viewed as a supervised learning approach.∙ unlabeled information obtained at probe level can be used to update the classifier using co-training.

is the mapping function used to map the data space to the feature space, and 𝐶 is the tradeoff parameter between the permissible error in the samples and the margin.

Kim et al. [14] have shown that online learning algorithms can be used for biometric score fusion in order to resolve the computational problems with increasing number of users.

Iterate: 𝑗= 1 to number of views (number of classifiers) Process: Train classifier 𝑐𝑗 on 𝑗𝑡ℎ views of 𝐷𝐿 for 𝑘 = 1 to 𝑁 doPredict labels: 𝑐𝑗(𝑥𝑖,𝑗) → 𝑦𝑖 if 𝑦𝑖 ∕= 𝑧𝑖 thenUpdate 𝑐𝑗 with labeled instance {𝑥𝑖,𝑗 ,𝑧𝑖} end ifend for End iterate Output: Updated classifier 𝑐1 and 𝑐2.

For the proposed framework, classifier1 was updated on 34, 086 instances and classifier2 was updated on 42, 102 instances using co-training during probe verification.

In co-training, as proposed by Blum and Mitchell [7], two classifiers that are trained on separate views (features), co-train each other based on their confidence in predicting the labels.

By varying the confidence threshold for a classifier, the number of sample points on which co-training is performed can be controlled.