Did you find this useful? Give us your feedback

7,383 citations

...It is, rather, a plurality of smaller improvements that add up to the significant gain....

[...]

3,592 citations

...This property in conjunction with unequal error protection is especially useful in any transmission scenario with unpredictable throughput variations and/or relatively high packet loss rates....

[...]

3,514 citations

...264 describes the lossy compression of a video stream [25] and is also part of ISO/IEC MPEG-4....

[...]

3,312 citations

265 citations

...This extension refers back to [11] and is further investigated in [ 12 ]....

[...]

247 citations

175 citations

103 citations

...This extension refers back to [11] and is further investigated in [12]....

[...]

98 citations

...The syntax supports multipicture motion-compensated prediction [9], [10]....

[...]

When used effectively, flexible macroblock ordering can significantly enhance robustness to data losses by managing the spatial relationship between the regions that are coded in each slice.

Other services that operate at lower bit rates and are distributed via file transfer and therefore do not impose delay constraints at all, which can potentially be served by any of the three profiles depending on various other systems requirements are:—3GPP multimedia messaging services; —video mail.

For transmitting the quantized transform coefficients, a more efficient method called Context-Adaptive Variable Length Coding (CAVLC) is employed.

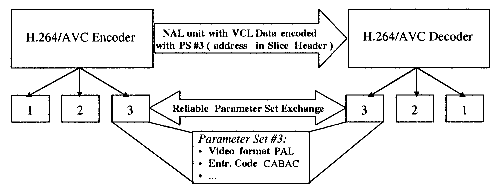

The sequence and picture parameter-set mechanism decouples the transmission of infrequently changing information from the transmission of coded representations of the values of the samples in the video pictures.

The samples at quarter sample positions labeled as a, c, d, n, f, i, k, and q are derived by averaging with upward rounding of the two nearest samples at integer and half sample positions as, for example, byThe samples at quarter sample positions labeled as e, g, p, and r are derived by averaging with upward rounding of the two nearest samples at half sample positions in the diagonal direction as, for example, byThe prediction values for the chroma component are always obtained by bilinear interpolation.

In prior standards, pictures encoded using some encoding methods (namely bi-predictively-encoded pictures) could not be used as references for prediction of other pictures in the video sequence.

The prediction values at half-sample positions are obtained by applying aone-dimensional 6-tap FIR filter horizontally and vertically.

The H.264/AVC standard enables this in two ways: 1) by using a hierarchical transform to extend the effective block size use for low-frequency chroma information to an 8 8 array and 2) by allowing the encoder to select a special coding type for intra coding, enabling extension of the length of the luma transform for low-frequency information to a 16 16 block size in a manner very similar to that applied to the chroma.

While arithmetic coding was previously found as an optional feature of H.263, a more effective use of this technique is found in H.264/AVC to create a very powerful entropy coding method known as CABAC (context-adaptive binary arithmetic coding).

In other applications (see Fig. 3), it can be advantageous to convey the parameter sets “out-of-band” using a more reliable transport mechanism than the video channel itself.