Because of manual binding minimizes remote memory access and local memory access has higher memory bandwidth and lower latency, some workload benefit from that.

When processes are not bound to specific NUMA nodes, the OS is in charge of all placement decisions, which includes reactive migrations to balance the load.

Because of the main memory is connected to the processor using separate links for reading and write operations, with two links for memory reads and one link for memory writes, the system has asymmetric read and write bandwidth.

Hardware counters have been used to collect most realtime information from experimental executions, using the perfmon2 [19] library.

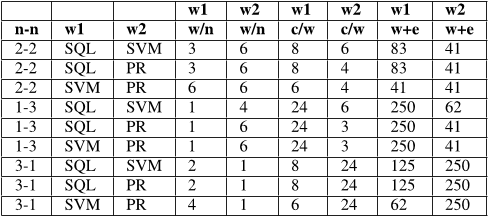

the numactl command has been used to bind a workload in a set of CPUs or in memory regions (e.g. in a NUMA node); the nB nomenclature is used to describe different binding configurations, where n is the number of assigned NUMA nodes, e.g. 1B for workloads bound to 1 NUMA node, 2B for workloads bound to 2 NUMA nodes, etc.

In particular, for any pair of workloads that is evaluated, the authors run both of them in a continuous loop of 90 minutes, from which the first 15 minutes are taken as a warm-up period and the final 15 minutes as a cool-down process.

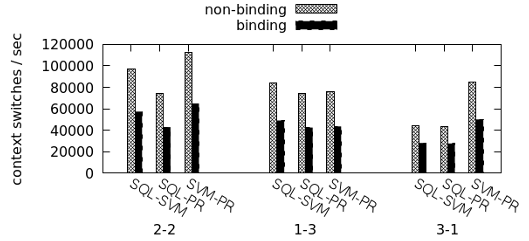

The obtained results show that binding spark processes to particular NUMA nodes can speed up the completion time of co-located workloads up to 1.39x at maximum due to less interconnect traffic, less remote memory access, and less context switches and CPI.

As it can be observed, SVM is constrained by the high CPU usage, reaching around 80% for the user CPU time only, that when added to the system and wait CPU times tops to about 100% CPU usage, which is the actual performance bottleneck.

The optimal configurations in case of 4B are 12 cores per worker and 1 worker per node for SQL, 8 cores per worker and 3 workers per node for SVM and 6 cores per worker and 3 workers per node for PageRank.

SQL is a more interesting case because CPU and Memory Bandwidth usage are really low for the fastest configuration, and no other resource is apparently acting as a bottleneck.

Some amount of memory is intentionally left for the OS and other processes (e.g spark driver, master) to avoid the slowdown effects not related to NUMA.

This final experiment explores the benefits of workload colocation and process binding (cores and memory) as a mechanism to improve system throughput and increase resource utilization.

only a third of the hardware threads are in use and that is why the average CPU utilization is shown to be low: several hardware threads are idle.

Workload co-location is well-known to possibly slow down interference-sensitive applications, however, the impact of NUMA co-scheduled Spark workloads are still not completely understood.

Considering following formula ( current node(s) + new node(s) / current node(s)), the theoretical speedup of allocating one new NUMA node to application with one current node would lead to a speedup of 2x.

These configurations use a too low number of cores or workers that are not enough to fully utilize the available compute resources and produce optimal results.

In practice, what is avoiding the total CPU usage to go higher is the fact that the number of threads that are spawn in this configuration (only 48) is well below the number of hardware threads offered by the system.