Did you find this useful? Give us your feedback

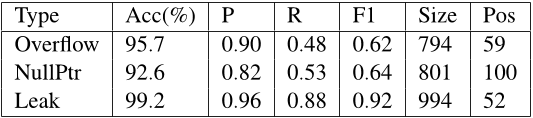

![Table 8.1: R2Fix Appendix: Fix Patterns. [[]] represents optional part, and [[]]* represents this pattern could appear 0 or more times.“Size” and “len” are often part of a buffer length variable name; once a buffer length variable is identified, the buffer length can be extracted simply by checking right hand side of “=”. To extract the line number for memory leak bugs, R2Fix takes the number after “:” or “at line” in the bug report. Note that the line number is only required for one memory leak subpattern, and is not needed for any other subpatterns.](/figures/table-8-1-r2fix-appendix-fix-patterns-represents-optional-3q0ogkro.png)

127 citations

...Some techniques mine historical data [25, 28, 30] or analyze documents [27, 58] to rank the repair candidates....

[...]

...Some recent works leverage document analysis [27, 58], anti-patterns [53], test generation [57], and location selection with test pruning [38] to enhance repair....

[...]

...Existing automated repair techniques have utilized various supportive resources to improve the repair efficacy, such as historical data [25, 30], documents [27, 58], anti-patterns [53] and test generation [57]....

[...]

123 citations

...R2Fix closes the loop between bug report submission and patch generation by automatically classify the type of bug discussed in bug report and extracting pattern parameters to generate fixes based on a several predefined fix patterns [38]....

[...]

120 citations

...If a bug report ID was found in a commit log, they consider the commit a bug-fixing commit for the bug report....

[...]

...However, several research questions have not been studied at all or in depth, and answers to these questions can guide the design of techniques and tools for addressing performance bugs in the following ways: • Based on maxims such as “premature optimization is the root of all evil” [27], it is…...

[...]

116 citations

...For example, Mozilla bug repository receives an average of 135 new bug reports each day ( Liu et al., 2013 )....

[...]

105 citations

...[20] learn from bug reports to fix bug....

[...]

20,196 citations

9,295 citations

...Following machine learning methods [33], classifiers are built using a small training set of manually labelled bug reports....

[...]

9,185 citations

...%) and precisions (86.0–90.0%)....

[...]

2,896 citations

813 citations

...Mark Weiser studies programmers’ behavior and finds that programmers usually breaks apart large programs into slices for the ease of debugging [43]....

[...]

In the future, the authors plan to generate patches for new types of bug reports, and extend R2Fix to take the output of existing bug detection tools as input to improve the effectiveness of patch generation. In other words, R2Fix does not use the “ Comment ” fields of the bug reports, because the authors want to apply R2Fix as soon as a bug is reported to maximize the time and effort that R2Fix can save for developers in fixing bugs. The authors estimate that it will take you approximately 3 minutes to complete this short survey. In other words, R2Fix does not use the “ Comment ” fields of the bug reports, because the authors want to apply R2Fix as soon as a bug is reported to maximize the time and effort that R2Fix can save for developers in fixing bugs.

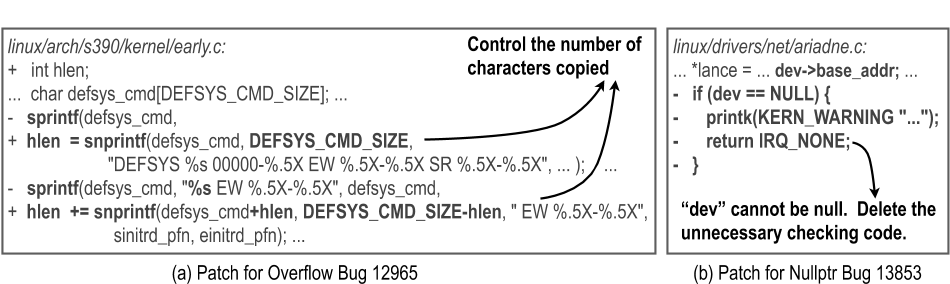

The patch deletes the line that writes 5 bytes to buffer state (denoted by - strcpy(state, "off ");), and adds a new line to write only 4 bytes to state (+ strcpy(state, "off");), which fixes the overflow bug.

The developers first need to understand this bug report by reading the relevant code together with this report: the buffer state contains only 4 bytes, but 5 bytes, “off \\0”, was written to the buffer, where denotes one space character and the single character ‘\\0’ is needed to mark the end of the string.

My dear mother, the first teacher and the role model in my life, gives me confidence to explore new things, especially in a different country far away from my homeland.

Developers’ bug-fixing process is primarily manual; therefore the time required for producing a fix and its accuracy depend on the skill and experience of individuals.

Developers often spend days, weeks, or even months diagnosing the root cause of a bug by reading the relevant source code, using a debugger to observe and modify the program execution on different inputs, etc.

After a developer determines the root cause, typically the developer needs to figure out how to modify the buggy code to fix the bug, check out the buggy version of the software, apply the fix, and generate the patch.

I am thankful to readers of the thesis, Prof. Patrick Lam and Prof. Mahesh V. Tripunitara, for spending their valuable time to review the thesis and give valuable comments.