Abstract— In this paper, model-based approaches for

real-time 3D soccer ball tracking are proposed, using image

sequences from multiple fixed cameras as input. The main

challenges include filtering false alarms, tracking through

missing observations and estimating 3D positions from single

or multiple cameras. The key innovations are: i) incorporating

motion cues and temporal hysteressis thresholding in ball

detection; ii) modeling each ball trajectory as curve segments in

successive virtual vertical planes so that the 3D position of the

ball can be determined from a single camera view; iii)

introducing four motion phases (rolling, flying, in possession,

and out of play) and employing phase-specific models to

estimate ball trajectories which enables high-level semantics

applied in low-level tracking. In addition, unreliable or missing

ball observations are recovered using spatio-temporal

constraints and temporal filtering. The system accuracy and

robustness is evaluated by comparing the estimated ball

positions and phases with manual ground-truth data of real

soccer sequences.

Index Terms— Motion analysis, video signal processing,

geometric modeling, tracking, multiple cameras,

three-dimensional vision.

I. INTRODUCTION

ith the development of computer vision and multimedia

technologies, many important applications have been

developed in automatic soccer video analysis and content-based

indexing, retrieval and visualization [1-3]. By accurately

tracking players and ball, a number of innovative applications

can be derived for automatic comprehension of sports events.

These include annotation of video content, summarization,

team strategy analysis and verification of referee decisions, as

Manuscript received Dec 20, 2005. This work was supported in part by the

European Commission under Project IST-2001-37422.

J. Ren is with School of Informatics, University of Bradford, BD7 1DP, U.K.,

on leave from the School of Computers, Northwestern Polytechnic University,

Xi‟an, 710072, China (email: j.ren@bradford.ac.uk; npurjc@yahoo.com).

J. Orwell and G. A. Jones are with Digital Imaging Research Centre, Kingston

University, Surrey, KT1 2EE, U.K. (email: j.orwell@kingston.ac.uk;

g.jones@kingston.ac.uk).

M. Xu is with Signal Processing Lab, Engineering Department, Cambridge

University, CB2 1PZ, U.K. (email: mx204@cam.ac.uk).

Copyright (c) 2007 IEEE. Personal use of this material is permitted. However,

permission to use this material for any other purposes must be obtained from the

IEEE by sending an email to pubs-permissions@ieee.org.

well as the 2D or 3D reconstruction and visualization of action

[3-16]. In addition, some more recent work on tracking of

players and the ball can be also found in [27-29].

In a soccer match, the ball is invariably the focus of

attention. Although players can be successfully detected and

tracked on the basis of color and shape [1, 10, 12], similar

methods cannot be extended to ball detection and tracking for

several reasons. First, the ball is small and exhibits irregular

shape, variable size and inconsistent color when moving

rapidly, as illustrated in Figure 1. Second, the ball is frequently

occluded by players or is out of all camera fields of view (FOV),

such as when it is kicked high in the air. Finally, the ball often

leaves the ground surface, and its 3D position cannot be

uniquely determined without the measurements from at least

two cameras with overlapping fields of view. Therefore, 3D

ball position estimation and tracking is, arguably, the most

important challenge in soccer video analysis. In this paper the

problem under investigation is the automatic ball tracking from

multiple fixed cameras.

A. Related Work

Generally, TV broadcast cameras or fixed-cameras around

the stadium are the two usual sources of soccer image streams.

While TV imagery generally provides high resolution data of

the ball in the image centre, the complex camera movements

and partial views of the field, make it hard to obtain accurate

camera parameters for on-field ball positioning. On the other

hand, fixed cameras are easily calibrated, but their wide-angle

field of view makes ball detection more difficult, since the ball

is often represented by only a small number of pixels.

In the soccer domain, fully automatic methods for limited

scene understanding have been proposed, e.g. recognition of

replays from cinematic features extracted from broadcast TV

data [1] and detection of the ball in broadcast TV data [1, 2,

4-9]. Gong et al adopted white color and circular shape to

detect balls in image sequences [1]. In Yow et al [2], the ball is

detected by template matching in each of the reference frames

and then tracked between each pair of these reference frames.

Seo et al applied template matching and Kalman filter to track

balls after manual initialization [4]. Tong et al [5] employed

indirect ball detection by eliminating non-ball regions using

color and shape constraints. In Yamada et al [6], white regions

Real-time Modeling of 3D Soccer Ball

Trajectories from Multiple Fixed Cameras

Jinchang Ren, James Orwell, Graeme A Jones and Ming Xu

W

Fig. 1. Ball samples in various sizes, shapes and colors.

are taken as ball candidates after removing of players and field

lines. In Yu et al [7, 8], candidate balls are first identified by

size range, color and shape, and then these candidates are

further verified by trajectory mining with a Kalman filter.

D‟Orazio et al [9] detected the ball using a modified Hough

transform along with a neural classifier.

Using soccer sequences from fixed cameras, usually there

are two steps for the estimation and tracking of 3D ball

positions. Firstly, the ball is detected and tracked in each single

view independently. Then, 2D ball positions from different

camera views are integrated to obtain 3D positions using

known motion models [10-12]. Ohno et al arranged eight

cameras to attain a full view of the pitch [10]. They modeled the

3D ball trajectory by considering air friction and gravity which

depend on an unsolved initial velocity. Matsumoto et al [11]

used four cameras in their optimized viewpoint determination

system, in which template matching is also applied for ball

detection. Bebie and Bieri [12] employed two cameras for

soccer game reconstruction, and modeled 3D trajectory

segments by Hermite spline curves. However, about one-fifth of

the ball positions need to be set manually before estimation. In

Kim et al [13] and Reid and North [14], reference players and

shadows were utilized in the estimation of 3D ball positions.

These are unlikely to be robust as the shadow positions depend

more on light source positions than on camera projections.

B. Contributions of This Work

In this paper, a system is presented for model-based 3D ball

tracking from real soccer videos. The main contributions can

be summarized as follows.

Firstly, a motion-based thresholding process along with

temporal filtering is used to detect the ball, which has proved to

be robust to the inevitable variations in ball color and size that

result from its rapid movement. Meanwhile, a probability

measure is defined to capture the likelihood that any specific

detected moving object represents the ball.

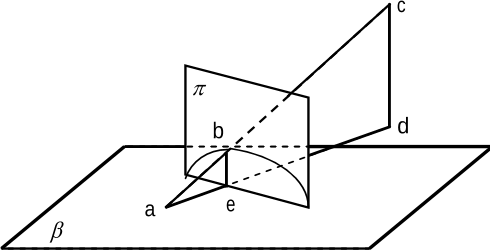

Secondly, the 3D ball motion is modeled as a series of planar

curves each residing in a vertical virtual plane (VVP), which

involves geometric based vision techniques for 3D ball

positioning. To determine each vertical plane, at least two

observed positions of the ball with reliable height estimate are

required. These reliable estimates are obtained by either

recognizing a bouncing on the ground from single view, or

triangulating from multiple views. Based on these VVPs, the

3D ball positions are determined in single camera views by

projections. Ball positions for frames without any valid

observations are easily estimated by polynomial interpolation

to allow a continuous 3D ball trajectory to be generated.

Thirdly, the ball trajectories are modeled as one of four

phases of ball motion – rolling, flying, in-possession and

out-of-play. These phase types were chosen because they each

require different models in trajectory recovery. For the first two

types, phase-specific models are employed to estimate ball

positions in linear and parabolic trajectories, respectively. It is

shown how two 3D points are sufficient to estimate the

parabolic trajectory of a flying ball. In addition, the transitions

from one phase to another also provide useful semantic insight

into the progression of the game, i.e. they coincide with the

passes, kicks etc. that constitute the play.

C. Structure of the Paper

The remaining part of the paper is organized as follows. In

Section II, the method we used for tracking and detecting

moving objects is described, using Gaussian mixtures [17] and

calibrated cameras [19]. In Section III, a method is presented

for identifying the ball from these objects. These methods

operate in the image plane from each camera separately. In

Section IV, the data from multiple cameras is integrated, to

provide a segment-based model of the ball trajectory over the

entire pitch, estimating 3D ball positions from either single

view or multiple views. In Section V, a technique is introduced

for recognizing different phases of ball motion, and for

applying phase-specific models for robust ball tracking.

Experimental results are presented in Section VI and the

conclusions are drawn in Section VII.

II. MOVING OBJECTS DETECTION AND TRACKING

To locate and track players and the soccer ball, a

multi-modal adaptive background model is utilized which

provides robust foreground detection using image differencing

[17]. This detection process is applied only to visible pitch

pixels of the appropriate color. Grouped foreground connected-

components (i.e. blobs) are tracked by a Kalman filter which

estimates 2D position, velocity and object dimensions. These

2D positions and dimensions are converted to 3D coordinates

on the pitch. Greater detail is given in the subsections below.

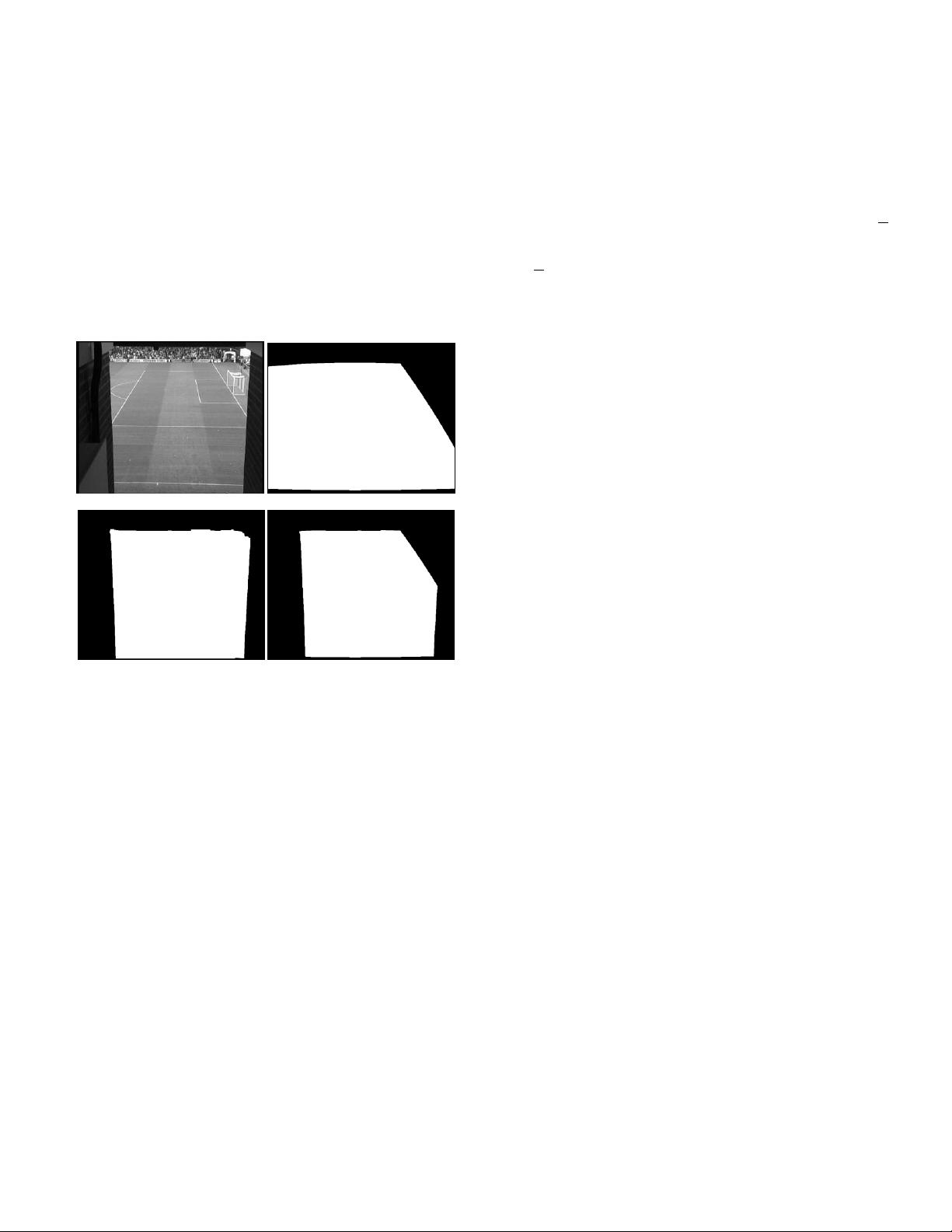

A. Determining Pitch Masks

Rather than process the whole image, a pitch mask is

developed to avoid processing pixels containing spectators.

This mask is defined as the intersection of the geometry-based

mask

g

M

and the color-based mask

c

M

, as shown in Figure

2. The former constrains processing to only those pixels on the

pitch, and can be easily derived from a coordinate transform of

the position of the pitch in the ground plane to the image plane

as follows. For each image pixel

p

, compute its corresponding

ground-plane point

P

. If

P

locates within the pitch, then

p

is set to 255 in

g

M

, otherwise 0. Note, however, that parts of

the pitch can be occluded by foreground spectators or parts of

the stadium. Thus, a color-based mask is used to exclude these

elements from the overall pitch mask (i.e. the region to be

processed).

The hue component of the HSV color space is used to

identify the region of the background image representing the

pitch, since it is robust to shadows and other variations in the

appearance of the grass. As it is assumed that the pitch region

has an approximately uniform color and occupies the dominant

area of the background image, pixels belonging to the pitch will

contribute to the largest peak in any hue histogram. Lower and

upper hue thresholds

1

H

and

2

H

delimit an interval around

the position

0

H

of this maximum. Defined as the positions at

which the histogram has decreased by 90% of the peak

frequency, image pixels contributing to this interval are

included in the color-based mask

c

M

.

A morphological closing operation is performed on

c

M

to

bridge the gaps caused by the white field lines in the initial

color-based mask. Thus the final mask,

M

, can be generated

as follows:

BHHvuHvuM

c

]},[),(|),{(

21

(1)

cg

MMM

(2)

where the morphological closing operation is denoted by

and

B

is its square structuring element of size

66

.

B. Detecting Moving Objects

Over the mask

M

detected above, foreground pixels are

located using the robust multi-modal adaptive background

model [17]. Firstly, an initial background image is determined

by a per-pixel Gaussian Mixture Model, and then the

background image is progressively updated using a running

average algorithm for efficiency.

Each per-pixel Gaussian Mixture Model is represented as

(

)()()(

,,

j

k

j

k

j

k

μ

), where

)()(

,

j

k

j

k

μ

and

)( j

k

are the mean,

root of the trace of covariance matrix, and weight of the j

th

distribution at frame k. The distribution which matches each

new pixel observation

k

I

is updated as follows:

)()()1(

)1(

T2

1

2

1

kkkkkk

kkk

μIμI

Iμμ

(3)

where

is the updating rate satisfying

10

. For each

unmatched distribution, the parameters remain the same but its

weight decreases. The initial background image is selected as

the distribution with the greatest weight at each pixel.

Given the input image

k

I

, the foreground binary mask

k

F

can be generated by comparing

||||

1

kk

μI

against a

threshold, i.e.

k

5.2

. To accelerate the process of updating

the background image, a running average algorithm is further

employed after the initial background and foreground have

been estimated:

kkHkHkkLkLk

FF ])1([])1([

11

μIμIμ

(4)

where

k

F

is the complement of

k

F

. The use of two update

weights (where

10

HL

) ensures that the

background image is updated slowly in the presence of

foreground regions. Updating is required even when a pixel is

flagged as moving to allow the system to overcome mistakes in

the initial background estimate.

Inside these foreground masks, a set of foreground regions

are generated using connected component analysis. Each

region is represented by its centroid

),(

00

cr

, area

a

, and

bounding box where

),(

11

cr

and

),(

22

cr

are the top-left and

bottom-right corners of the bounding box.

C. Tracking Moving Objects

A Kalman tracker is used in the image plane to filter noisy

measurements and split merged objects because of frequent

occlusions of players and the ball. The state

I

x

and

measurement

I

z

are given by:

T

22110000

][ crcrcrcr

I

x

(5)

T

221100

][ crcrcr

I

z

(6)

where

),(

00

cr

is the centroid,

),(

00

cr

is the velocity,

),(

11

cr

and

),(

22

cr

are the top-left and bottom-right

corners of the bounding box respectively (such that

21

rr

and

21

cc

) and

),(

11

cr

and

),(

22

cr

are the

relative positions of the two opposite corners to the centroid.

The state transition and measurement equations in the Kalman

filter are:

)()()(

)()()1(

kkk

kkk

IIII

IIII

vxHz

wxAx

(7)

where

I

w

and

I

v

are the image plane process noise and

measurement noise, and

I

A

and

I

H

are the state transition

matrix and measurement matrix, respectively.

2222

2222

2222

2222

IOOO

OIOO

OOIO

OOII

A

T

I

(a) (b)

(c) (d)

Fig. 2. Extraction of pitch masks based on both color and geometry:

(a) Original background image, (b) Geometry-based mask of pitch, (c)

Color-based mask of pitch, and (d) Final mask obtained.

2222

2222

2222

IOOI

OIOI

OOOI

H

I

(8)

In equation (8),

2

I

and

2

O

represent

22

identity and

zero metrics;

T

is the time interval between frames. Further

detail on the method for data association and handling of

occlusions can be found in [18].

D. Computing Ground Plane Positions

Using the Tsai‟s algorithm for camera calibration [19], the

measurements are transformed from image co-ordinates into

world co-ordinates. Basically, the pin-hole model of 3D-2D

perspective projection is employed in [19] to estimate totally 11

intrinsic and extrinsic camera parameters. In addition,

effective dimensions of pixel in images are obtained in both

horizontal and vertical directions as two fixed intrinsic

constants. These two constants are then taken to calculate the

world co-ordinates measurements of the objects on the basis of

detected image-plane bounding boxes. Let

),,( zyx

denote

the 3D object position in world co-ordinates, then

x

and

y

are estimated by using the center point of the bottom line of

each bounding box, and

z

initialized as zero. Until Section

IV, all objects are assumed to lie on the ground plane. (This

assumption is usually true for players, but the ball could be

anywhere on the line between that ground plane point and the

camera position). For each tracked object, a position and

attribute measurement vector is defined as

T

][

yxi

vvzyxp

and

T

][ nahw

i

a

. In

addition, a ground plane velocity

),(

yx

vv

is estimated from

the projection of the image-plane velocity (which is obtained

from the image plane tracking process) onto the ground plane.

Note that this ground-plane velocity is not intended to estimate

the real velocity, in cases where the ball is off the ground. The

attributes

hw,

and

a

are an object‟s width, height and area,

also measured in meters (and meters squared), and calculated

by assuming the object touches the ground plane. Besides, each

object is validated before further processing provided that its

size satisfies

mw 1.0

,

mh 1.0

and

2

03.0 ma

.

Finally,

n

is the longevity of the tracked object, measured in

frames.

III. DETECTING BALL-LIKE FEATURES

To identify ball-like features in a single-view process, each

of the tracked objects is attributed with a likelihood l that

represents the ball. The two elementary properties to

distinguish the ball from players and other false alarms are its

size and color. Three simple features are used to describe the

size of the object, i.e. its width, height, and area, in which

measurements in real-world units are adopted for robustness

against variable sizes of the ball in image plane. A fourth

feature derived from its color appearance, measures the

proportion of the object‟s area that is white.

To discriminate the ball from other objects, a

straightforward process is to apply fixed thresholds to these

features. However, this suffers from several difficulties. Firstly,

false alarms such as fragmented field lines or fragments of

players (especially socks) cannot always be discriminated.

Secondly, if no information is available about the height of the

ball, the estimate of the dimensions may be inaccurate. For

example, by assuming the ball is touching the ground plane, an

airborne ball will appear to be a larger object. Thirdly, the

image of a fast-moving ball is affected by motion blurring,

rendering it larger and less white than a stationary (or slower

moving) ball.

A key observation from soccer videos is that the ball in play

is nearly always moving, which suggests that the velocity may

be a useful additional discriminant. Thus, as field markings are

stationary the majority of these markings can be discriminated

from the ball by thresholding both the size and absolute velocity

of the detected object.

Another category of false alarms is caused by a part of a

player that has become temporarily disassociated from the

remainder of the player. A typical cause of this phenomenon is

imperfect foreground segmentation. However, such transitory

artifacts do not in general persist for longer than a couple of

(a)

(b)

(c)

(d)

Fig. 3. Tracked ball with ID and assigned likelihood (a) Id=7, l=0.9 (b)

l=0.0, the ball is moving out of current camera view (c) Id=16, l=0.9

and (d) ball is merged with player 9 in frame #977, #990, #1044, and

#1056, respectively.

frames, whereupon the correct representation is resumed.

Therefore, this category of false alarm can be correctly

discriminated by discarding all short-lived objects, i.e. whose

longevity is less than five frames.

Features describing the velocity and longevity of the

observations are used to solve the three difficulties described

above. These features (derived from tracking) are employed

alongside size and color features to help discriminate the ball

from other objects. The velocity feature is also useful when the

size of the detected ball is overestimated, either through a

motion-blur effect (proportional to the duration of the

shutter-speed), or a range error effect (incorrectly assuming

the object lies on the ground plane). Here, the key innovation is

to allow the size threshold to vary as a function of the estimated

ground-plane velocity. There is a simple rationale for the

motion-blur effect: the expected area is also directly

proportional to the image-plane speed. The range error effect is

more complicated as the 3D trajectory of the ball may be

directly towards the camera generating zero velocity in the

image plane. However, in general it can be assumed that the

ball rapidly moving in the image plane is more likely to be

positioned above the ground plane, and therefore, the size

threshold should be increased to accommodate the consequent

over-estimation of the ball size.

As for a standard soccer ball, it has a constant diameter

0

d

(between

m216.0

and

m226.0

) and an area (of a great circle)

0

a

about

2

04.0 m

. Considering over-estimated ball size

during fast movement, two thresholds for the width and height

of the ball,

0

w

and

0

h

, are defined by

Tvdh

Tvdw

y

x

||

||

00

00

(9)

For robustness, valid size ranges of the ball are required

satisfying

5/||

00

dww

,

5/||

00

dhh

, and

2

00

)(||8/|| Tvvaaa

yx

. In addition, the proportion

of white color within the object is required no less than 30% of

the whole area. All objects having size and color outside the

prescribed thresholds are assigned a likelihood of zero and

excluded from further processing. Each remaining object is

classed as a ball candidate, and assigned an estimate of the

likelihood that represents the ball. The proposed form for this

estimate is the following equation, incorporating both its

absolute velocity

i

v

and longevity

n

:

)1(

0

max

nt

i

i

e

v

l

v

(10)

where

max

v

is the maximum absolute velocity of all the objects

detected in the given camera, at a given frame (including the

ball, if visible, and also non-ball objects), and

0

t

is a constant

parameter. Thus, faster moving objects are considered more

likely to be the ball based on the fact that, in the professional

game, the ball normally moves faster than other objects.

Figure 3 shows partial views of camera #1 with detected ball

at frame 977, 990, 1044 and 1056, respectively. The ball or

each player is assigned with a unique ID unless it is near the

(a)

(b)

(c)

Fig. 4. Thirty seconds of single camera tracking data from camera #1 (a) and filtered results of the ball in (b) and (c), in which time

t moves from left to right, and the x-coordinate of the objects c

0

is plotted up the y-axis. In (b) and (c), most-likely ball is labeled in

black, (b) is the result filtering on appearance and velocity and (c) is the result after temporal filtering.