Did you find this useful? Give us your feedback

110 citations

86 citations

72 citations

...Software solutions to this problem include thread mapping [48] and scheduling [15], cache partitioning [18], and compiler-time code transformation for cache behavior optimization [33][36]....

[...]

68 citations

...Methods to reduce cache pollution by compiler-directed use of non-temporal move instructions [29] and non-temporal prefetch instructions are also proposed [30]....

[...]

62 citations

1,329 citations

...However, instead of using LRU stack distances, they use OPT stack distances, which requires expensive simulation....

[...]

...A natural starting point for modeling LRU caches is the stack distance [11]....

[...]

...[3] propose a method to identify non-temporal memory accesses based on Mattson’s optimal replacement algorithm (OPT) [11]....

[...]

...Wong et al. [3] propose a method to identify non-temporal memory accesses based on Mattson s optimal replacement algorithm (OPT) [11]....

[...]

1,083 citations

...To detect non-temporal data, they introduce a set of shadow tags [15] used to count the number of hits to a cache line that would have occurred if the thread was allocated all ways in the cache set....

[...]

722 citations

...Several researchers have proposed hardware improvements to the LRU replacement algorithm [5], [6], [7], [8]....

[...]

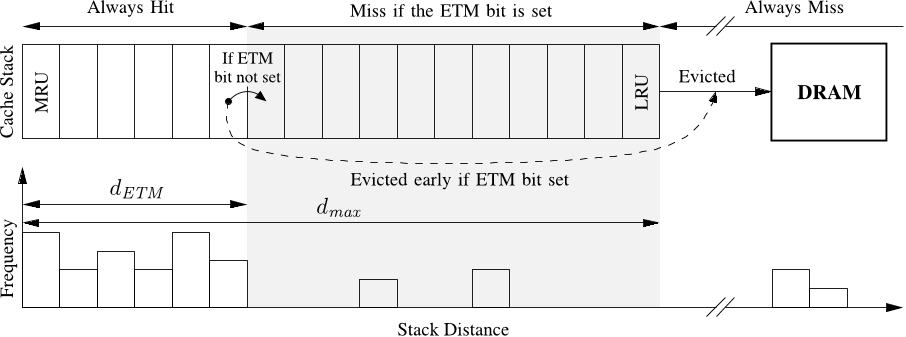

...[5] propose an insertion policy (DIP) where on a cache miss to non-temporal data it is installed in the LRU position, instead of the MRU position of the LRU stack....

[...]

715 citations

...Several researchers have proposed hardware improvements to the LRU replacement algorithm [5], [6], [7], [8]....

[...]

...A recent extension [8] introduces an additional policy that installs cache lines in the MRU− 1 position....

[...]

334 citations

...Several hardware methods have been proposed [1], [3], [6], [14], that dynamically identify non-temporal data....

[...]

...[14] propose a replacement policy, PIPP, to effectively way-partition a shared cache, that explicitly handles nontemporal (streaming) data....

[...]

...Focus has recently started to shift towards shared caches [6], [14]....

[...]

Future work will explore other hardware mechanism for handling non-temporal data hints from software and possible applications in scheduling.

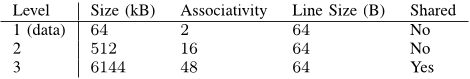

The authors used the performance counters in the processor to measure the cycles and instruction counts using the perf framework provided by recent Linux kernels.

Since the authors are using StatStack the authors have made the implicit assumption that caches can be modeled to be fully associative, i.e. conflict misses are insignificant.

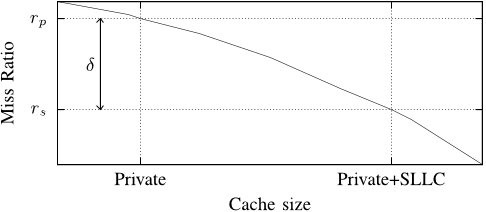

The speedup when running with applications from the two victim categories can largely be attributed to a reduction in the total bandwidth requirement of the mix.

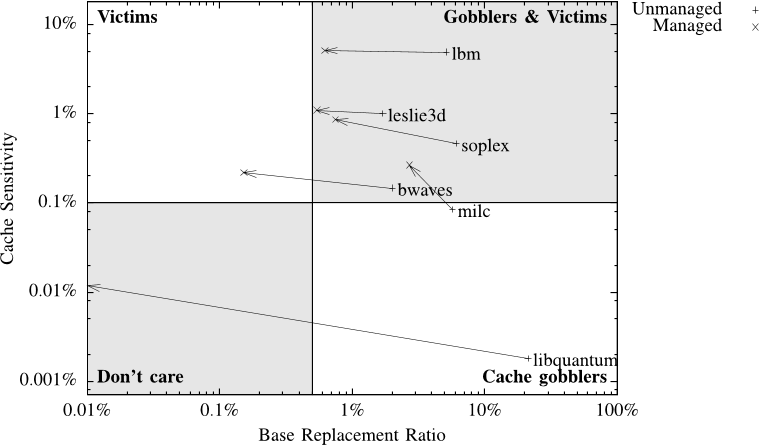

Managing the cache for these applications is likely to improve throughput, both when they are running in isolation and in a mix with other applications.

Most hardware implementations of cache management instructions allow the non-temporal data to live in parts of the cache hierarchy, such as the L1, before it is evicted to memory.

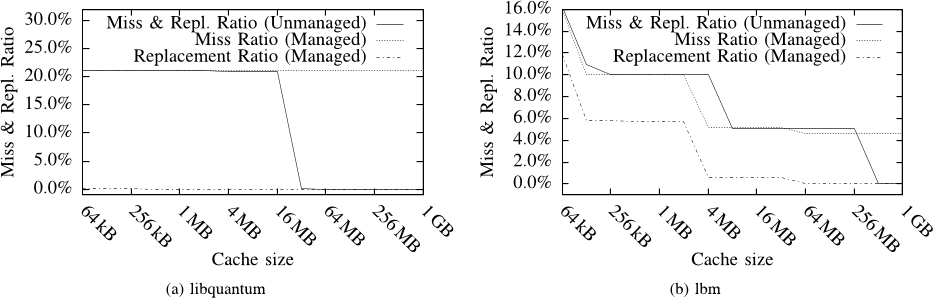

the stack distance distribution enables the application’s miss ratio to be computed for any given cache size, by simply computing the fraction of memory accesses with a stack distances greater than the desired cache size.

Using a modified StatStack implementation the authors can reclassify applications based on their replacement ratios after applying cache management, this allows us to reason about how cache management impacts performance.

By looking at the forward stack distances of an instruction the authors can easily determine if the next access to the data used by that instruction will be a cache miss, i.e. the instruction is non-temporal.