Space-Efficient Private Search

with Applications to Rateless Codes

George Danezis and Claudia Diaz

K.U. Leuven, ESAT/COSIC,

Kasteelpark Arenberg 10,

B-3001 Leuven-Heverlee, Belgium

{george.danezis, claudia.diaz}@esat.kuleuven.be

Abstract. Private keyword search is a technique that allows for search-

ing and retrieving documents matching certain keywords without reveal-

ing the search criteria. We improve the space efficiency of the Ostrovsky

et al. Private Search [9] scheme, by describing methods that require con-

siderably shorter buffers for returning the results of the search. Our ba-

sic decoding scheme recursive extraction, requires buffers of length less

than twice the number of returned results and is still simple and highly

efficient. Our extended decoding schemes rely on solving systems of si-

multaneous equations, and in special cases can uncover documents in

buffers that are close to 95% full. Finally we note the similarity between

our decoding techniques and the ones used to decode rateless codes, and

show how such codes can be extracted from encrypted documents.

1 Introduction

Private search allows for keyword searching on a stream of documents (typical

of online environments) without revealing the search criteria. Its applications

include intelligence gathering, medical privacy, private information retrieval and

financial applications. Financial applications that can benefit from this technique

are, for example, corporate searches on a patents database, searches for financial

transactions meeting specific but private criteria and periodic updates of filtered

financial news or stock values.

Rafail Ostrovsky et al. presented in [9] a scheme that allows a server to filter a

stream of documents, based on matching keywords, and only return the relevant

documents without gaining any information about the query string. This allows

searching to be outsourced, and only relevant results to be returned, economising

on communications costs. The authors of [9] show that the communication cost

is linear in the number of results expected. We extend their scheme to improve

the space-efficiency of the returned results considerably by using more efficient

coding and decoding techniques.

Our first key contribution is a method called recursive extraction for effi-

ciently decoding encrypted buffers resulting from the Ostrovsky et al. scheme.

The second method, based on solving systems of linear equations, is applied af-

ter recursive extraction and allows for the recovery of extra matching documents

S. Dietrich and R. Dhamija (Eds.): FC 2007 and USEC 2007, LNCS 4886, pp. 148–162, 2007.

c

IFCA/Springer-Verlag Berlin Heidelberg 2007

Space-Efficient Private Search 149

from the encrypted buffers. Recursive extraction results in the full decoding of

buffers of length twice the size of the expected number of matches, and has a

linear time-complexity. Shorter bufferscanalsobedecryptedwithhighprob-

ability. Solving the remaining equations at colliding buffer positions allows for

even more documents to be retrieved from short buffers, and in the special case

of documents only matching one keyword, we can decode buffers that are only

10% longer than the expected matches, with high probability. We present simula-

tions to assess the decoding performance of our techniques, and estimate optimal

parameters for our schemes.

In this work we also present some observations that may be of general interest

beyond the context of private search. We show how arrays of small integers

can be represented in a space efficient manner using Pailler ciphertexts, while

maintaining the homomorphic properties of the scheme. These techniques can be

used to make private search more space-efficient, but also implement other data

structures like Bloom filters, or vectors in a compact way. Finally we show how

rateless codes, block based erasure resistant multi-source codes, can be extracted

from encrypted documents, while maintaining all their desirable properties.

This paper is structured as follows: We introduce the related work in Section 2;

present more in detail in Section 3 the original Ostrovsky scheme whose efficiency

we are trying to improve; and explain in Section 4 the required modifications.

Sections 5 and 6 present the proposed efficient decoding techniques, which are

evaluated in Section 7. In Section 8 we explain how our techniques can be applied

to rateless codes; and we present our conclusions in Section 9.

2 Related Work

Our results can be applied to improve the decoding efficiency of the Private

Search scheme proposed by Rafail Ostrovsky et al. in [9]. This scheme is described

in detail in Section 3. Danezis and Diaz proposed in [5] some preliminary ideas on

how to improve the decoding efficiency of the Ostrovsky Private Search scheme,

which are elaborated in this paper.

Bethencourt et al. [1,2] have independently proposed several modifications to

the Ostrovsky private searchscheme which include solving a system of linear equa-

tions to recover the documents. As such, the time complexity of their approach is

O(n

3

), while our base technique, recursive extraction, is O(n). Their technique

also requires some changes to the original scheme [9], such as the addition of an

encrypted buffer that acts as a Bloom filter [3]. This buffer by itself increases by

50% the data returned. Some of our techniques presented in section 6.2, that al-

low for efficient space representation of concatenated data, are complementary to

their work, and would greatly benefit the efficiency of their techniques.

The rateless codes for big downloads proposed by Maymounkov and Mazi`eres

in [8] use a technique similar to ours for efficient decoding, indicating that our

ideas can be applied beyond private search applications. We explore further this

relation in Section 8, where we show how homomorphic encryption can be used

to create rateless codes for encrypted data.

150 G. Danezis and C. Diaz

Pfitzmann and Wainer [13] also notice that collisions in DC networks [4] do

not destroy all information transmitted. They use this observation to allow n

messages to be transmitted in n steps despite collisions.

3PrivateSearch

The Private Search scheme proposed by Ostrovsky et al. [9] is based on the prop-

erties of the homomorphic Paillier public key cryptosystem [10], in which the

multiplication of two ciphertexts leads to the encryption of the sum of the corre-

sponding plaintexts (E(x) · E(y)=E(x + y)). Constructions with El-Gamal [6]

are also possible but do not allow for full recovery of documents.

The searching party provides a dictionary of terms and a corresponding Pail-

lier ciphertext, that is the encryption of one (t

i

= E(1)), if the term is to be

matched, or the encryption of zero (t

i

= E(0)) if the term is of no interest. Be-

cause of the semantic security properties of the Paillier cryptosystem this leaks

no information about the matching criteria.

The dictionary ciphertexts corresponding to the terms in the document d

j

are multiplied together to form g

j

=

k

t

k

= E(m

j

), where m

j

is the number

of matching words in document d

j

. A tuple (g

j

,g

E(d

j

)

j

) is then computed. The

second term will be an encryption of zero (E(0)) if there has been no match, and

the encryption E(m

j

d

j

) otherwise. Note that repeated words in the document

are not taken into account, meaning that each matching word is counted only

once, and m

j

represents the number of different matching words found in a

document.

Each document tuple is then multiplied into a set of l random positions in a

buffer of size b (smaller than the total number of searched documents, but bigger

than the number of matching documents). All buffer positions are initialized with

tuples (E(0),E(0)). The documents that do not match any of the keywords, do

not contribute to changing the contents of these positions in the buffer (since

zero is being added to the plaintexts), but the matched documents do.

Collisions will occur when two matching documents are inserted at the same

position in the buffer. These collisions can be detected by adding some redun-

dancy to the documents. The color survival theorem [9] can be used to show

that the probability that all copies of a single document are overwritten be-

comes negligibly small as the number of l copies and the size of the buffer b

increase (the suggested buffer length is b =2· l · M,whereM is the expected

number of matching documents). The searcher can decode all positions, ignoring

the collisions, and dividing the second term of the tuples by the first term to

retrieve the documents.

4 Modifications to the Original Scheme

A prerequisite for more efficient decoding schemes is to reduce the uncertainty of

the party that performs the decoding. At the same time, the party performing

Space-Efficient Private Search 151

the search should gain no additional information with respect to the original

scheme. In order to make sure of this, we note that the modifications to the

original scheme involve only information flows from the searching (encoding)

party back to the matching (decoding) party, and therefore cannot introduce

any additional vulnerabilities in this respect.

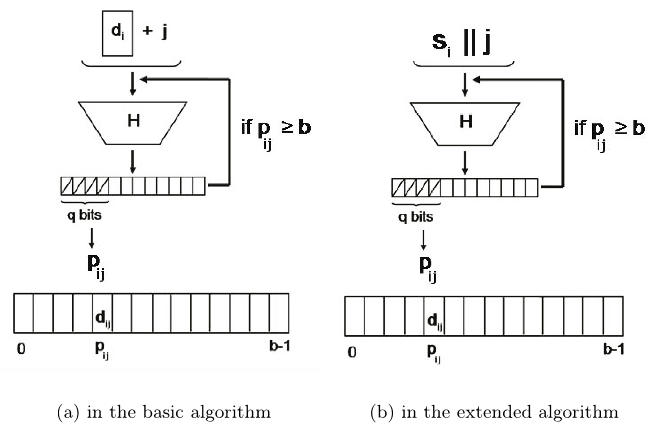

Our basic decoding algorithm (presented in Section 5) only requires that the

document copies are stored in buffer positions known to the decoder. In practice,

the mapping of documents to buffer positions can be done using a good hash

function H(·) that can be agreed by both parties or fixed by the protocol. We

give an example of how this function can be constructed.

Notation:

– l is the total of copies stored per document;

– d

ij

is the j-th copy of document d

i

(j =1...l) – note that all copies of d

i

are equal;

– b is the size of the buffer;

– q is the number of bits needed to represent b (2

q−1

<b≤ 2

q

);

– p

ij

is the position of document copy d

ij

in the buffer (0 ≤ p

ij

<b).

The hash function is applied to the sum of the the document d

i

and the

copy number j, H(d

i

+ j). The position p

ij

is then represented by the q most

significant bits of the result of the hash. If there is index overflow (i.e., b ≤ p

ij

),

then we apply the hash function again (H(H(d

i

+ j))) and repeat the process,

until we obtain a result p

ij

<b. This is illustrated in Figure 1(a).

With this method, once the decoding party sees a copy of a matched doc-

ument, d

i

, it can compute the positions of the buffer where all l copies of d

i

have been stored (and thus extract them from those positions) by applying the

function to d

i

+ j,withj =1...l.

We present in Section 6 an extension to our decoding algorithm that further

improves its decoding efficiency. The extension requires that the total number

N of searched documents is known to the decoder, and that the positions of

all (not just matched) searched documents are known by the decoder. This can

be achieved by adding a serial number s

i

to the documents, and then deriving

the position p

ij

of the document copies as a function of the document serial

number and the number of the copy H(s

i

||j), as shown in Figure 1(b). We then

take the q most significant bits of the result and proceed as in the previous

case.

With respect to the original Ostrovsky scheme, our basic algorithm only re-

quires the substitution of the random function U[0,b−1] used to select the buffer

positions for the document copies by a pseudorandom function dependent on the

document and the copy number, that can be computed by the decoder.

The extension requires that the encoder transmits to the decoder the total

number N of documents searched. The encoder should also append a serial

number to the documents (before encrypting them). We assume that the serial

numbers take values between 1 and N (i.e., s

i

= i).

152 G. Danezis and C. Diaz

(a) in the basic algorithm (b) in the extended algorithm

Fig. 1. Function to determine the position of a document copy d

ij

5 Basic Decoding Algorithm: Recursive Extraction

Given the minor modifications above, we note that much more efficient decoding

algorithms can be used, that would allow the use of significantly smaller buffers

for the same recovery probability.

While collisions are ignored in the original Ostrovsky scheme, our key in-

tuition is that collisions are in fact not destroying all information, but merely

adding together the encrypted plaintexts. This property can be used to recover

a plaintext if the values of the other plaintexts with which it collides are known.

The decoder decrypts the buffer, and thanks to the redundancy included in

the documents, it can discern three states of a particular buffer position: whether

it is empty, contains a single document, or contains a collision.

In this basic scheme, the empty buffer positions are of no interest to the

decoder (they do provide useful information in the extended algorithm, as we

shall see in the next section). In the case of it containing a single document d

i

,

then the document can be recovered. By applying the hash function as described

in Section 4 to d

i

+ j with j =1...l, the decoder can locate all the other

copies of d

i

and extract them from the buffer. This hopefully uncovers some

new buffer positions containing only one document. This simple algorithm is

repeated multiple times until all documents are recovered or no more progress

can be made.

In the example shown in Figure 2(a), we match 9 documents and store 3

copies of each in a buffer of size 24. Documents ‘3’, ‘5’, ‘7’ and ‘8’ can be trivially

recovered (note that these four documents would be the only ones recovered in

the original scheme). All copies of these documents are located and extracted