read more

In this paper, the authors explore the use of sparse representation based methods specifically to restore the degraded document images.

In order to avoid blocky artifacts in the reconstructed image, the authors use overlapping patches for restoration and the final reconstructed image is obtained by performing averaging at the overlapped regions.

The techniques such as i) greedy methods (matching pursuit [12]) or ii) convex relaxation (l1-norm) can be used to solve the above problem.

In order to maintain overcompleteness and recover sparse representation [5], size of dictionary is usually fixed to four times the size of the patch.

a document image patch (y) that the authors would like to represent using a dictionary D should be computed as:y = g(D,α), (3)where α is a set of parameters and g is a non-linear function that maps from the binary document dictionary elements to a valid binary document image or patch.

Their algorithm takes about 12 seconds to restore a document of size 157 × 663 on a 2GB RAM and Intel(R) Core(TM) i3−2120 system with 3.30 GHz processor with un-optimized implementation.

(3) Noise in document images usually contain a mixture of degradations coming from independent processes such as erosion, cuts, bleeds, etc.

One of the fundamental assumptions in such a representation is that the elements of the dictionary span the subspace of images of interest and that any linear combination of a sparse subset of dictionary elements is indeed a valid image.

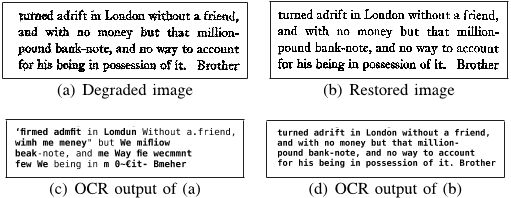

The recognition error on the degraded page was due to erosion and low printing quality, which might possibly confuse the OCR when the noise fills up the gap between two characters in a word.

texture-blending simulates effects such as textured paper or stained paper, and was produced by linearly blending the document with a texture image for various degrees of blending.

with the advent of internet, one can obtain clean documents with any font easily e.g simple search of ‘gothic text’ will result in lot of high quality documents which can be used to restore gothic texts.

The authors used the sparse coding technique proposed in [3] treating the missing pixels (cuts) as infinite noise and restored the image after learning a dictionary using large number of clean text and natural image patches.

If the patch size is large, the dictionary elements may overfit the training data, resulting in reduced flexibility of degraded images that can be restored.

It is observed in [1], [13], [3] that very large dictionary leads to overfitting i.e, learnt atoms may correspond to individual patches instead of generalizing for large number of patches and very small dictionary leads to underfitting.

The fundamental elements that constitute the documents are strokes, curves, glyffs, etc. and their method automatically learns these elements.

Another kind of degradation is fading resulting in near cuts as seen in character a in word sanguinary, which is restored with high resolution.

i.e,x ≈ Dα s.t. ||α||0 L, (1)where α is the sparse representation of the image patch and ||.||0 is l0 pseudo-norm, which gives a measure of number of non-zero entries in a vector, and the constant L defines the required sparsity level.

sparse representation of x is recovered from y asα̂ = min α ||α||0 s.t ||y −Dα||2 ≤ , (2)where is constant and can be tuned according to the application at hand.