Did you find this useful? Give us your feedback

![Fig. 4. The recall vs. (1-precision) graph for different binning methods and two different matching strategies tested on the ’Graffiti’ sequence (image 1 and 3) with a view change of 30 degrees, compared to the current descriptors. The interest points are computed with our ’Fast Hessian’ detector. Note that the interest points are not affine invariant. The results are therefore not comparable to the ones in [8]. SURF-128 corresponds to the extended descriptor. Left: Similarity-threshold-based matching strategy. Right: Nearest-neighbour-ratio matching strategy (See section 5).](/figures/fig-4-the-recall-vs-1-precision-graph-for-different-binning-2hh70ttd.png)

12,449 citations

8,702 citations

...This has led to an intensive search for replacements with lower computation cost; arguably the best of these is SURF [2]....

[...]

...There are various ways to describe the orientation of a keypoint; many of these involve histograms of gradient computations, for example in SIFT [17] and the approximation by block patterns in SURF [2]....

[...]

4,522 citations

3,807 citations

3,760 citations

46,906 citations

18,620 citations

...In order to make the paper more self-contained, we succinctly discuss the concept of integral images, as defined by [23]....

[...]

16,989 citations

...Focusing on speed, Lowe [12] approximated the Laplacian of Gaussian (LoG) by a Difference of Gaussians (DoG) filter....

[...]

13,993 citations

7,057 citations

Future work will aim at optimising the code for additional speed up.

The benefit of avoiding the overkill of rotation invariance in such cases is not only increased speed, but also increased discriminative power.

only 64 dimensions are used, reducing the time for feature computation and matching, and increasing simultaneously the robustness.

Using the determinant of the Hessian matrix rather than its trace (the Laplacian) seems advantageous, as it fires less on elongated, ill-localised structures.

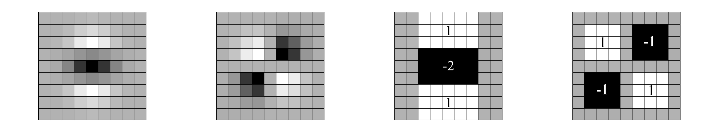

In order to arrive at these SURF descriptors, the authors experimented with fewer and more wavelet features, using d2x and d 2 y, higher-order wavelets, PCA, median values, average values, etc.

The most valuable property of an interest point detector is its repeatability, i.e. whether it reliably finds the same interest points under different viewing conditions.

For the extraction of the descriptor, the first step consists of constructing a square region centered around the interest point, and oriented along the orientation selected in the previous section.

The most widely used detector probably is the Harris corner detector [10], proposed back in 1988, based on the eigenvalues of the second-moment matrix.

each sub-region has a four-dimensional descriptor vector v for its underlying intensity structure v = ( ∑ dx, ∑ dy, ∑ |dx|, ∑ |dy|).

Examples are the salient region detector proposed by Kadir and Brady [13], which maximises the entropy within the region, and the edge-based region detector proposed by Jurie et al. [14].

With IΣ calculated, it only takes four additions to calculate the sum of the intensities over any upright, rectangular area, independent of its size.

anisotropic scaling, and perspective effects are assumed to be second-order effects, that are covered to some degree by the overall robustness of the descriptor.

for example, their 27 × 27 filter corresponds to σ = 3 × 1.2 = 3.6 = s. Furthermore, as the Frobenius norm remains constant for their filters, they are already scale normalised [26].

This PCA-SIFT yields a 36- dimensional descriptor which is fast for matching, but proved to be less distinctive than SIFT in a second comparative study by Mikolajczyk et al. [8] and slower feature computation reduces the effect of fast matching.

Due to space limitations, only results on similarity threshold based matching are shown in Fig. 7, as this technique is better suited to represent the distribution of the descriptor in its feature space [8] and it is in more general use.

the authors also propose an upright version of their descriptor (USURF) that is not invariant to image rotation and therefore faster to compute and better suited for applications where the camera remains more or less horizontal.

The SIFT descriptor still seems to be the most appealing descriptor for practical uses, and hence also the most widely used nowadays.