Q2. Why do both protocols emphasize hop-by-hop error recovery?

Because the cost of end-to-end repair is exponential with the path length, both protocols emphasize hop-by-hop error recovery where loss detection and recovery is limited to a small number of hops (ideally one).

Q3. How do the authors measure the timing of the UART message?

By opening up sockets to each node from a desktop computer, the authors timestamp each UART message with precision on the order of milliseconds and track the propagation of each page.

Q4. How much does spatial multiplexing limit a node’s broadcast rate?

spatial multiplexing limits a node’s broadcast rate to no greater than onethird the maximum rate due to the single-channel, broadcast medium.

Q5. What is the way to reduce the propagation time?

One might suggest that starting the propagation in the centermight help to eliminate the behavior of following the edge and also decrease the propagation time by about half.

Q6. What did the authors do with the suppression of data packets?

The authors experimented with suppressing the transmission of data packets if k redundant data packets were overheard while in TX, where lower values of k represented more aggressive suppression.

Q7. What is the cause of the propagation of a single page along good links?

One cause is Deluge’s depth-first tendency, where propagation of a single page along good links is not blocked by delays caused by poor links.

Q8. How does the propagation rate increase along the diagonal?

By doubling τr, the propagation rate along the diagonal improves by about 2.7 times while the propagation rate along the edge remains nearly identical, leading to an improvement in overall propagation performance.

Q9. How does Deluge take advantage of the quick edges?

Even though placing the source at the center effectively reduces the the diameter by about half, Deluge is unable to take advantage of the quick edges since nodes in the center experience a greater number of collisions.

Q10. What is the expected time to transmit just the data packets?

The expected time required to transmit just the data packets isE[Ttx] = E[NtPkt] · TtPkt ·N, (2)where TtPkt is the transmission time for a single packet.

Q11. What is the only trigger that causes R to request data from S?

The only trigger that causes R to request data from S is the receipt of an advertisement stating the availability of a needed page.

Q12. What is the effect of the SNACK constraint on the rate of requests from R?

the rate of requests from R will decrease with the decreasing advertisement rate in the steady state since S will not know that R is not up-to-date.

Q13. Why is TOSSIM a good choice for simulating the network at the bit level?

The main advantage with simulating at the bit-level is that the transmission and reception of bits govern the actions of each layer, rather than modeling each layer with its own set of parameters.

Q14. How many nodes did the authors use to evaluate and investigate the behavior of Deluge?

the authors used TOSSIM to evaluate and investigate the behavior of Deluge with network sizes on the order of hundreds of nodes and tens of hops.

Q15. How many pages does Deluge take to disseminate?

For the linear case, the simulations show that Deluge takesabout 40 seconds to disseminate each page to 152 nodes across 15 hops.

Q16. Why is it unlikely for many senders in a region to begin transmitting data?

Because requests are unicast to the node that most recently advertised, it is unlikely for many senders in a region to begin transmitting data.

Q17. What is the behavior of the propagation of a square topology?

With this topology, the propagation behaves as expected: the propagation progresses at a fairly constant rate in a nice wavefront pattern from corner to corner.

Q18. What is the way to improve the propagation time for a single page?

This structured approach should improve the propagation time for a single page, but inhibits the use of pipelining since it is more difficult to minimize interference between transfers of different pages.

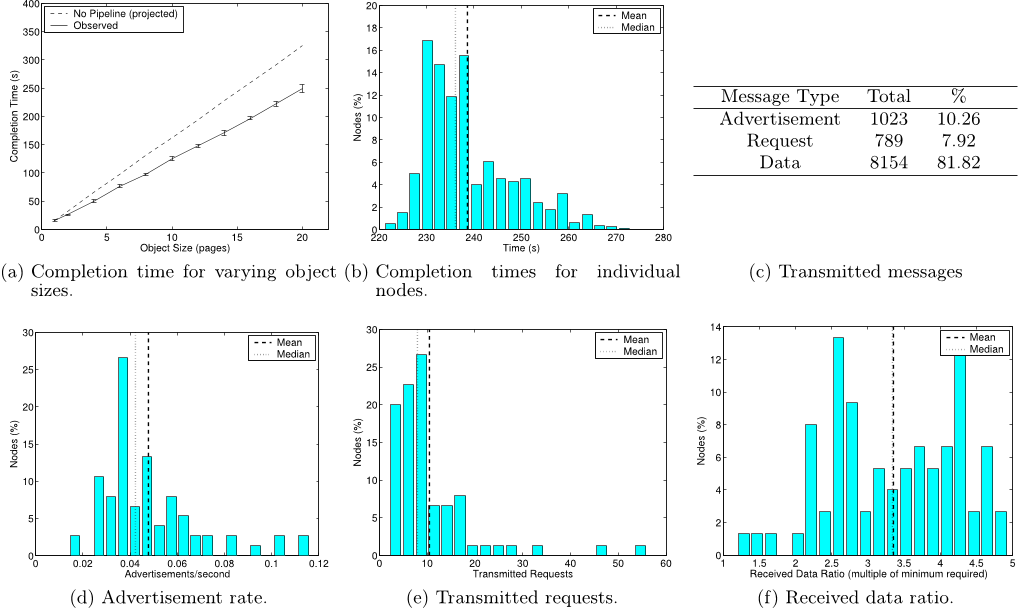

Q19. How do the authors plot the average advertisement rate for each node?

Using the transmission count information on advertisements, the authors plot a histogram of the average advertisement rate for each node by dividing the count by the completion time.