Did you find this useful? Give us your feedback

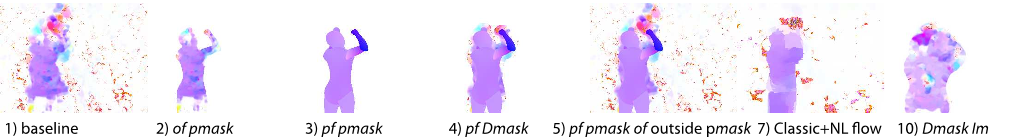

![Figure 1. Overview of our annotation and evaluation. (a-d) A video frame annotated by a puppet model [36]. (a) image frame, (b) puppet flow [35], (c) puppet mask, (d) joint positions and relations. Three types of joint relations are used: 1) distance and 2) orientation of the vector connecting pairs of joints; i.e. the magnitude and the direction of the vector u. 3) Inner angle spanned by two vectors connecting triples of joints; i.e. the angle between the two vectors u and v. (e-h) From left to right, we gradually provide the baseline algorithm (e) with different levels of ground truth from (b) to (d). The trajectories are displayed in green.](/figures/figure-1-overview-of-our-annotation-and-evaluation-a-d-a-3jrgh78x.png)

2,372 citations

...6M” [10] that includes images and 3D poses of people but are captured in the controlled indoor environments, whereas our dataset includes real-world images but provides 2D poses only....

[...]

850 citations

...We keep the softmax loss on JHMDB as it is the default loss used by previous methods on this dataset....

[...]

...To demonstrate the competitiveness of our baseline methods, we also apply them to the JHMDB dataset [18] and compare the results against the previous state-of-theart....

[...]

...A few datasets, such as CMU [20], MSR Actions [37], UCF Sports [29] and JHMDB [18] provide spatio-temporal annotations in each frame for short trimmed videos....

[...]

...One key difference between AVA and JHMDB (as well as many other action datasets) is that action labels in AVA are not mutually exclusive, i.e., multiple labels can be assigned to one bounding box....

[...]

...We can also see that using Deep Flow extracted flows and stacking multiple flows are both helpful for JHMDB as well as AVA....

[...]

809 citations

[...]

694 citations

684 citations

...We report performance on two widely adopted video action detection datasets: JHDMB [52] and UCF-101-24 [45]....

[...]

40,826 citations

...The multi-class classification is done by LIBSVM [4] using a one-vs-all approach....

[...]

31,952 citations

...HOG: Histograms of oriented gradients [5] of 8 bins are computed in a 32-pixels × 32-pixels × 15-frames spatiotemporal volume surrounding the trajectory....

[...]

5,670 citations

3,833 citations

...Commonly considered sources for action recognition are sport activities [18], YouTube videos [21], or movie scenes [14, 16]....

[...]

...HOF: Histograms of optical flow [16] are computed similarly as HOG except that there are 9 bins with the additional one corresponding to pixels with optical flow magnitude lower than a threshold....

[...]

3,533 citations

...Since HMDB51 [14] is the most challenging dataset among the current movie datasets [30], we build on it to create J-HMDB....

[...]

...Training and testing splits are generated as in [14]....

[...]

...According to [30], the HMDB51 dataset [14] is the most challenging dataset for vision algorithms, with the best method achieving only 48% accuracy....

[...]

...The HMDB51 database [14] contains more than 5,100 clips of 51 different human actions collected from movies or the Internet....

[...]

...We focus on one of the most challenging datasets for action recognition (HMDB51 [14]) and on the approach that achieves the best performance on this dataset (Dense Trajectories [30])....

[...]