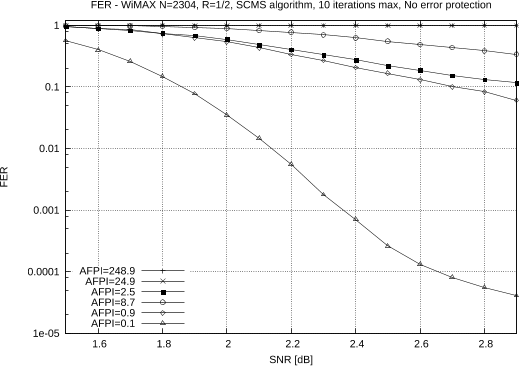

Abstract: Author(s): Huang, Chu-Hsiang | Advisor(s): Dolecek, Lara | Abstract: In traditional information processing systems, inference algorithms are designed to collect and process information subject to noisy transmission. It is saliently assumed that the inference algorithms themselves have error-free implementations. However, with the scaling of process technologies and the increase in process variations, nano-devices will be inherently unreliable. Producing reliable decisions in systems with unreliable components thus becomes an important and challenging problem. In this dissertation, we provide a novel information theoretic approach to analyze and develop robust system design for iterative information processing algorithms running on noisy hardware. We characterize the fundamental performance limits of the systems under the joint effect of communication/environment noise and hardware noise. Based on this analysis, we then propose new theory-guided methods that guarantee reliable performance under hardware errors of varied characteristics. The proposed methods successfully explore the inherent robustness of the information processing algorithms and leverage the error-tolerance of the considered applications to minimize the overhead introduced in robust system design. We investigate a wide range of iterative information processing systems implemented on noisy hardware via the proposed information theoretic approach. Starting from iterative message passing decoders, we study different decoder implementations including finite-precision and infinite precision decoders subject to various types of hardware errors. We identify the performance-critical components in the iterative decoders via a theoretical analysis and develop robust system designs to assign computation units with different error characteristics to different components in the decoder. Then, we apply the proposed analysis and design methodology to general inference problems on probabilistic graphical models and develop robust implementations of the general belief propagation algorithms by noise cancellation based on averaging. For certain applications with error-tolerance, e.g. image processing and classification based on machine learning, we propose theory-guided adaptive coding schemes inspired by approximate computing to correct errors without additional hardware redundancy. The redundant free codes have the same performance as the traditional codes. Our algorithm-guided approach offers up to 100x reduction in the error rates relative to the nominal system designs.