HAL Id: hal-00749180

https://hal.archives-ouvertes.fr/hal-00749180

Submitted on 6 Nov 2012

HAL is a multi-disciplinary open access

archive for the deposit and dissemination of sci-

entic research documents, whether they are pub-

lished or not. The documents may come from

teaching and research institutions in France or

abroad, or from public or private research centers.

L’archive ouverte pluridisciplinaire HAL, est

destinée au dépôt et à la diusion de documents

scientiques de niveau recherche, publiés ou non,

émanant des établissements d’enseignement et de

recherche français ou étrangers, des laboratoires

publics ou privés.

Constrained Epsilon-Minimax Test for Simultaneous

Detection and Classication

Lionel Fillatre

To cite this version:

Lionel Fillatre. Constrained Epsilon-Minimax Test for Simultaneous Detection and Classication.

IEEE Transactions on Information Theory, Institute of Electrical and Electronics Engineers, 2011, 57

(12), pp.8055-8071. �10.1109/TIT.2011.2170114�. �hal-00749180�

IEEE TRANSACTIONS ON INFORMATION THEORY, VOL. 57, NO. 12, DECEMBER 2011 8055

Constrained Epsilon-Minimax Test for Simultaneous

Detection and Classification

Lionel Fillatre

Abstract—A constrained epsilon-minimax test is proposed to de-

tect and classify nonorthogonal vectors in Gaussian noise, with a

general covariance matrix, and in presence of linear interferences.

This test is epsilon-minimax in the sense that it has a small loss of

optimality with respect to the purely theoretical and incalculable

constrained minimax test which minimizes the maximum classifi-

cation error probability subject to a constraint on the false alarm

probability. This loss is even more negligible as the signal-to-noise

ratio is large. Furthermore, it is also an epsilon-equalizer test since

its classification error probabilities are equalized up to a negligible

difference. When the signal-to-noise ratio is sufficiently large, an

asymptotically equivalent test with a very simple form is proposed.

This equivalent test coincides with the generalized likelihood ratio

test when the vectors to classify are strongly separated in term of

Euclidean distance. Numerical experiments on active user identifi-

cation in a multiuser system confirm the theoretical findings.

Index Terms—Constrained minimax test, generalized likelihood

ratio test, linear nuisance parameters, multiple hypothesis testing,

statistical classification, user activity detection.

I. INTRODUCTION

T

HE problem of detecting and classifying a vector in noisy

measurements under uncertainty of vector presence often

appears in engineering applications. This problem has many

applications including radar and sonar signal processing [1],

image processing [2], speech segmentation [3], [4], integrity

monitoring of navigation systems [5], quantitative nondestruc-

tive testing [6], network monitoring [7] and digital communi-

cation [8] among others. This paper deals with the following

detection and classification problem. It is assumed that a mea-

surement vector

consists of either a vector of interest

(for example, a target or an anomaly) plus an unknown nuisance

vector in additive Gaussian noise, or just an unknown nuisance

vector in additive Gaussian noise. If present, the vector of in-

terest must be detected and classified. The unknown nuisance

vector belongs to the nuisance parameter subspace spanned by

the columns of a known

matrix . Hence, the observa-

tion model has the form

(1)

Manuscript received November 29, 2009; revised June 08, 2011; accepted

June 28, 2011. Date of current version December 07, 2011. This work was sup-

ported in part by the French National Agency of Research under Grant ANR-08-

SECU-013-02.

The author is with the ICD, LM2S, Université de Technologie de Troyes

(UTT), UMR STMR, CNRS 6279, BP 2060, 10010, Troyes, France (e-mail:

lionel.fillatre@utt.fr).

Communicated by M. Lops, Associate Editor for Detection and Estimation.

Color versions of one or more of the figures in this paper are available online

at http://ieeexplore.ieee.org.

Digital Object Identifier 10.1109/TIT.2011.2170114

where both the nuisance parameter vector

and the

vector

are unknown and deterministic. The zero-mean

Gaussian noise vector

has the known positive definite general

covariance matrix

. The vector belongs to the known set of

different vectors , also called

the vector constellation. The relation between the dimension

of the observed vector and the number of non-null vectors

is arbitrary but it is assumed that

.

Three objectives are aimed to be achieved: i) vector detection

which is to decide if

for a given false alarm probability

(probability to declare an alarm when the observation vector is

anomaly-safe), ii) vector classification which is to specify the

actual index

of the vector and iii) insensitivity to the nui-

sance parameters which consists of taking the simultaneous de-

tection/classification decision independently from the value of

the unknown vector

.

A. Relation to Previous Work

From the statistical point of view, this problem of simulta-

neous detection/classification can be viewed as an hypotheses

testing problem between several composite hypotheses [9], [10].

The goal is to design a statistical test which achieves the above

mentioned three objectives according to a prefixed criterion of

optimality.

The first approach to the design of statistical detection and

classification tests is the uncoupled design strategy where detec-

tion performance is optimized under the false alarm constraint

and the classification is gated by this optimal detection. On the

one hand, the classical Neyman-Pearson criterion of vector de-

tection [10] states that it is desirable to minimize the probability

to miss the target subject to a constraint on the false alarm prob-

ability. On the other hand, in terms of target classification, it

is desirable to minimize the probabilities to badly classify the

target. All the above mentioned probabilities generally vary as

a function of both the vector

and the nuisance parameter .

Hence, the uniform minimization of these probabilities with re-

spect to

and is in general impossible. There is no guarantee

that the global performance of this uncoupled strategy will be

acceptable. Consequently, a different approach must be taken,

namely the coupled design strategies for detection and classifi-

cation. These strategies have been studied by only a few authors.

Pioneering works include the papers [11]–[14]. The common

ground in each of these studies is the Bayesian point of view,

i.e., prior probabilities are assigned to all the parameters so that

average performance can be optimized. The problem of simulta-

neous detection and classification using a combination of a gen-

eralized likelihood ratio test and a maximum-likelihood classi-

fier is studied in [15], [16]. This strategy is optimal only in some

cases.

0018-9448/$26.00 © 2011 IEEE

8056 IEEE TRANSACTIONS ON INFORMATION THEORY, VOL. 57, NO. 12, DECEMBER 2011

Contrary to a purely Bayesian criterion which needs a com-

plete statistical description of the problem, the minimax crite-

rion is well adapted to detection problems where some param-

eters are deterministic and unknown (typically the nuisance pa-

rameter

) and the appearance probability of each vector is

unknown. This criterion consists of minimizing the largest prob-

ability to make a decision error (typically a miss detection or a

classification error). In [17], the generalized-likelihood ratio test

approach is extended to multiple composite hypothesis testing,

by breaking the problem into a sequence of binary composite

hypothesis tests. In some cases, sufficient conditions for min-

imax optimality of this strategy are provided. Lastly, a general

framework to design minimax tests with a prefixed level of false

alarm, namely the constrained minimax tests, between multiple

hypotheses composed of a finite number of parameters is estab-

lished in [18].

It must be mentioned that some interesting papers like [19],

[20] study the asymptotic performance of Bayesian tests be-

tween multiple hypotheses in absence of constraints on the false

alarm probability. When the number of observations is very

large, these papers show that the error probabilities depend only

on the Kullback-Leibler information between the hypotheses.

These results are not yet extended to the case of multiple hy-

potheses testing with a constraint on the false alarm probability.

B. Motivation of the Study

The design of the optimal constrained minimax test mainly

depends on three major points: 1) the geometric complexity

of the vector constellation, 2) the covariance matrix of the

Gaussian noise and 3) the presence of nuisance parameters. To

underline the importance of these points, the three following

cases must be distinguished.

In the simplest case, the vectors

are orthogonal and have

the same norm (the least complex vector constellation), the co-

variance matrix

is the identity matrix (possibly multiplied by

a known scalar) and there is no nuisance parameter

. The op-

timal solution of the problem is given in [9], [21]: this is the

so-called

-slippage problem.

In a more difficult case, the vectors

are not orthogonal

and/or they have different norms (the most complex vector con-

stellation), the Gaussian noise has a general covariance matrix

(not necessarily diagonal) and there is no nuisance parameter

. The theoretical optimal solution is given by the constrained

minimax test [18] but it is generally intractable. This optimal

test compares the maximum of weighted likelihood ratios to

a threshold to take its decision. Although the existence of the

optimal solution is established, this solution depends on some

unknown coefficients, namely the optimal weights and the

threshold. Furthermore, the presence of a general covariance

matrix

plays a significant role in the calculation of the

optimal weights, even if the vector constellation is simple.

In fact, it is always possible to get a diagonal covariance

matrix after prefiltering but this operation may involve that

the vector constellation becomes more complex. For example,

orthogonal vectors of interest may be no longer orthogonal

after prefiltering. For all these reasons, it is often impossible

to easily calculate the optimal weights and, even, to reduce

the number of weights to be determined by using invariance

principles [10], [22]. The optimization problem to be solved for

calculating the optimal weights is highly nonlinear and leads to

a combinatorial explosion.

Example 1 (Slippage Problem With Unstructured Noise):

This example is directly related to the important problem of

detecting outliers in multivariate normal data [23]. Let

be a

Gaussian random vector with zero mean and a known general

covariance matrix under hypothesis . Under hypothesis

, the th component of has the known mean .

Hence, the vector

to detect and classify corresponds to

where denotes the vector with a 1 in the th coordinate and

0’s elsewhere. Since the covariance is known but it differs from

the identity matrix, it is no longer possible to use the famous

principle of invariance to solve such a slippage problem [9].

The optimal solution is not known up to now. Example 6 shows

that the results proposed in this paper can be used to solve this

slippage problem.

In the most difficult case, the vectors ’s are not orthog-

onal and/or they have different norms, the Gaussian noise has

a general covariance matrix

and there is an unknown nui-

sance parameter

. To our knowledge, the optimal constrained

minimax test is unknown in this case. The main reasons which

explain this lack of results are the followings. First, the presence

of linear nuisance parameters certainly complicates the mutual

geometry between the vectors. Next, there is an unavoidable an-

tagonism between the detection and classification performances

of the test. For example, to get small classification errors, it is

necessary to accept a loss of sensibility for the probability of de-

tection. The tradeoff between these two requirements is essen-

tially based on the worst case of detection and the worst case of

classification which are generally difficult to identify. Finally, as

underlined in [24]–[27], the analytic calculation of the miss de-

tection probability and the classification error probabilities are

intractable, which makes difficult the derivation of an optimal

test.

Example 2 (Integrity Monitoring of Navigation Systems): Let

be a Gaussian random vector with the known covariance ma-

trix . Under hypothesis , its mean is where is the user

unknown parameters and

is a matrix describing the measure-

ment system [5]. Under hypothesis

is contaminated by

a scalar error with intensity

. The common solution, namely

the parity space approach, involves two steps. First, the user un-

known parameters are eliminated by projecting

on the null-

space of

. This null-space is called the parity space in the ana-

lytical redundancy literature [28]. Next, the error is detected and

classified (isolated) directly in the parity space. Unfortunately,

the first step may generate some linear dependencies between

the possible error signatures in the parity space. In this case, the

problem is not theoretically solved. Example 7 shows that this

paper proposes a solution to this problem.

Example 3 (New User Identification in a Multiuser System):

In a multiuser system, after chip-matched filtering and chip rate

sampling, the received signal vector under hypothesis

is

modeled as

where the th column of the ma-

trix is the normalized unit energy signature waveform vector

FILLATRE: CONSTRAINED EPSILON-MINIMAX TEST FOR SIMULTANEOUS DETECTION AND CLASSIFICATION 8057

of user

is the diagonal matrix of user amplitudes and

is the vector whose th component is the antipodal symbol,

or 1, transmitted by user [29], [30]. The random vector

has zero mean and the known covariance matrix where

is the identity matrix of size . Under hypothesis , the

vector

is added to , i.e., a new user with the sig-

nature

emits the symbol with the amplitude .Itis

assumed that belongs to a finite set of predefined nonorthog-

onal signatures. The multiple-access interferences

can be

eliminated by using the above mentioned parity space approach

[31]. The goal is to detect the new user arrival and to identify

the waveform

. This is a difficult problem, especially when

the common length

of each user’s signature is shorter than

the total number of simultaneously active users [32]. Section VI

will further elaborate upon this example.

When the optimal statistical test is unknown or intractable,

it is often assumed that the optimal weights are equal (since

it is the least informative

a priori choice) and the threshold is

tuned to satisfy the false alarm constraint. The resulting test is

called the

-ary Generalized Likelihood Ratio Test (MGLRT)

between equally probable hypotheses. It must be noted that the

optimality proof of the MGLRT is still an open problem.

C. Contribution and Organization of the Paper

The first contribution is the design of a constrained

-min-

imax detection/classification test solving the detection/clas-

sification problem in the case of nonorthogonal vectors with

linear nuisance parameters and an additive Gaussian noise with

a known general covariance matrix. This test is based on the

maximum of weighted likelihood ratios, i.e., it is a Bayesian

test associated to some specific weights. It is

-optimal (under

mild assumptions) in the sense that it is optimal with a loss

of a small part, say

, of optimality with respect to the purely

theoretical minimax test. This loss of optimality, which is the-

oretically bounded, is unavoidable since the purely theoretical

minimax test is intractable due to the difficulties above men-

tioned. This loss is even more negligible as the signal-to-noise

ratio is large. It is also shown that this test coincides with

a constrained

-equalizer Bayesian test which equalizes the

classification error probabilities over the alternative hypotheses

up to a constant .

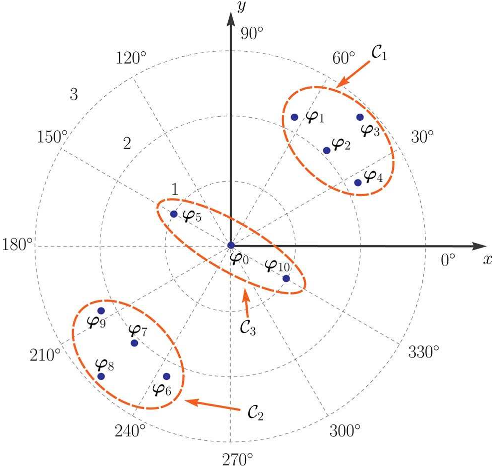

Secondly, an algorithm is proposed to compute the optimal

weights of the proposed test with a reasonable numerical com-

plexity. This algorithm is based on a graph, namely the separa-

bility map, describing the mutual geometry between the vectors.

This separability map serves to identify the least separable vec-

tors, making possible the design of the associated constrained

-minimax test. This map is also used to calculate in advance

the asymptotic maximum classification error probability of the

constrained -minimax test as the Signal-to-Noise Ratio (SNR)

tends to infinity. Moreover, in the case of large SNR, an asymp-

totically equivalent test is proposed whose optimal weights have

a very simple form.

Finally, it is shown that the MGLRT is

-optimal when the

mutual geometry between the hypotheses is very simple, i.e.,

when each vector has at most one other vector nearest to it in

term of Euclidean distance. In general, the MGLRT is subop-

timal and the loss of optimality may be significant.

The paper is organized as follows. Section II starts with the

problem statement and introduces the statistical framework that

will be used in this paper, including the presentation of the con-

strained

-minimax criterion. Section III describes the general

methodology to reduce the detection/classification problem

between multiple hypotheses with nuisance parameters to a

detection/classification problem between multiple hypotheses

without nuisance parameters. This reduction is based on the fact

that it is sufficient to design a constrained

-equalizer Bayesian

test to get the constrained

-minimax one. Section IV defines

the separability map and proposes the main theorem of this

paper which establishes the constrained

-equalizer Bayesian

test. The proof of this theorem is given in Appendix A. The

false alarm probability of this test is calculated in Appendix B.

Section V gives an asymptotically equivalent test to the con-

strained

-minimax as the SNR tends to infinity. The derivation

of this test is given in Appendix C. It is also shown that the

MGLRT is asymptotically

-optimal when the mutual geometry

between the hypotheses is very simple. Section VI deals with a

practical problem, namely the identification of a new active user

in a multiuser system, showing the efficiency of the proposed

-optimal test. Finally, Section VII concludes this paper.

II. PROBLEM STATEMENT

This section presents the multiple hypotheses testing problem

which consists in detecting and classifying a vector in the pres-

ence of linear nuisance parameters. A new optimality criterion,

namely the constrained

-minimax criterion, is introduced and

motivated.

A. Multiple Hypotheses Testing

The observation model has the form (1). Without any loss

of generality, it is assumed that the noise vector

follows a

zero-mean Gaussian distribution

. In fact, it is always

possible to multiply (1) on the left by the inverse square-root

matrix

of to obtain the linear Gaussian model with the

vector , the nuisance matrix and a Gaussian noise

having the covariance matrix

. It is also assumed that is a

full-column rank matrix. If the matrix

does not satisfy this

assumption, it suffices to keep the maximum number of linear

independent columns to get a full-column rank matrix spanning

the same linear space. It is then desirable to solve the multiple

Gaussian hypotheses testing problem between the statistical hy-

potheses

(2)

where

. The following condition of

separability is assumed to be satisfied:

(3)

In other words, it is assumed that the intersection of the two

linear manifolds

and (which are parallel to each other)

is an empty set for all

(two parallel linear manifolds with

nonempty intersection are equal). Here, the parameter set

associated to hypothesis is not a singleton, hence, is

called a composite hypothesis [33]. Otherwise, the hypothesis is

simple and it is identified by the absence of an underscore, say

8058 IEEE TRANSACTIONS ON INFORMATION THEORY, VOL. 57, NO. 12, DECEMBER 2011

. The set of decision strategies for the -ary hypotheses

testing problem (2) is specified by the set of test functions.

Definition 1: A test function

for the mul-

tiple hypotheses

is a -dimensional vector

function defined on such that and

Given , the test function decides the hypothesis

if and only if . In this case, the other components

, are zero. The study of randomized test functions

(a random test function satisfies

for some )

is not considered in this paper since the probability distribution

of

is continuous whatever the true hypothesis. The average

performance of a particular test function

is determined by

the

functions where

stands for the expectation of when follows the distribution

. The false alarm probability function is given by

when . The function for

describes the probability of miss detection. When

is the classification error proba-

bility. The maximum classification error probability for the test

function

is denoted

For a test where is a given superscript, all the above men-

tioned notations are completed by the superscript

. Let be

the set of test functions whose maximum

false alarm probability is less or equal to

B. Constrained Epsilon-Minimax Test

As mentioned in the introduction, the constrained minimax

criterion given in [18] is a very natural criterion for problem

(2). Unfortunately, as underlined in [18], since the geometry of

the set

may be very complex, it is impossible

to infer the structure of the minimax test. Hence, to overcome

this difficulty, it makes sense to consider constrained

-minimax

tests, i.e., tests that approximate optimal minimax test with a

small loss, say

, of optimality.

Definition 2: A test function is a con-

strained

-minimax test in the class between the hypotheses

if the following conditions are fulfilled :

i)

;

ii) There exists a positive function

satisfying

as such that

for any other test function .

Obviously, Definition 2 assumes that the positive constant

is

(very) small. In some cases, it is possible to get

(see

Section VI) but, generally,

because of the vector con-

stellation complexity. Contrary to a purely constrained minimax

test, the design of a constrained

-minimax test tolerates small

errors on the classification error probabilities. Hence, it becomes

possible to use lower and upper bounds on these probabilities in

order to evaluate the statistical performances of the test. As un-

derlined in [25], the exact calculation of these probabilities is

generally intractable.

III. E

PSILON-MINIMAX TEST FOR COMPOSITE HYPOTHESES

This section introduces the constrained

-equalizer Bayesian

test of level . Proposition 1 shows that such a test is neces-

sarily a constrained

-minimax one. The first step to design the

constrained

-equalizer Bayesian test between composite hy-

potheses in presence of nuisance parameters consists in elim-

inating these unknown parameters. Proposition 2 shows that

this elimination, based on the nuisance parameters rejection,

leads to a reduced decision problem between simple statistical

hypotheses.

A. Constrained Epsilon-Equalizer Test

Let us recall the definition of the constrained Bayesian test

before introducing the definition of the constrained

-equalizer

test. Let

be a probability distribution over called the a priori

distribution. For all

, this distribution induces

some a priori distributions on the linear manifolds and

some a priori probabilities

such that

(4)

Let

be the probability density function (pdf) of the ob-

servation vector

following the distribution . To each

hypothesis is associated the weighted pdf (see de-

tails in [10]) defined by

Let be the weighted log-likelihood ratio defined by

(5)

for

. The constrained Bayesian test function

of level associated to is given by

(6)

and for

(7)